A LSTM-based Machine Translation Approach for Question Answering over Knowledge Graphs.

Install git-lfs in your machine, then fetch all files and submodules.

git lfs fetch

git lfs checkout

git submodule update --initpip install -r requirements.txtYou can extract pre-generated data from data/monument_300.zip and data/monument_600.zip in folders having the respective names.

The template used in the paper can be found in a file such as annotations_monument.tsv. data/monument_300 will be the ID of the working dataset used throughout the tutorial. To generate the training data, launch the following command.

mkdir data/monument_300

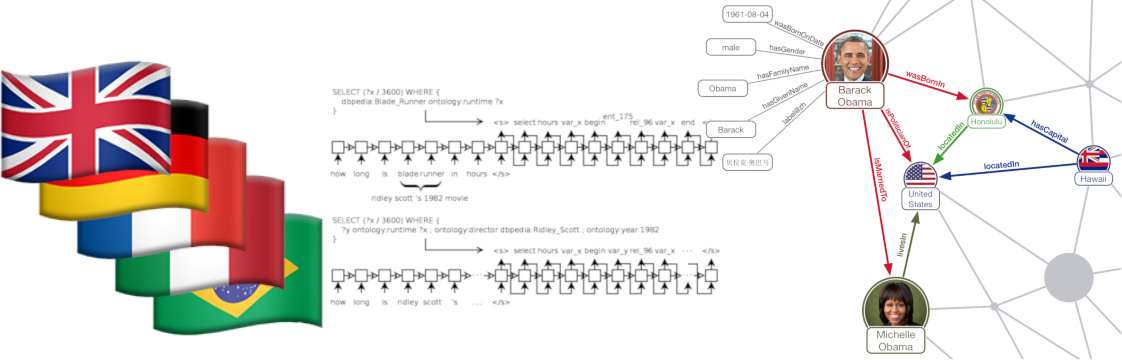

python generator.py --templates data/annotations_monument.csv --output data/monument_300Launch the command to build the vocabularies for the two languages (i.e., English and SPARQL) and split into train, dev, and test sets.

./generate.sh data/monument_300Now go back to the initial directory and launch train.sh to train the model. The first parameter is the prefix of the data directory and the second parameter is the number of training epochs.

./train.sh data/monument_300 12000This command will create a model directory called data/monument_300_model.

Predict the SPARQL query for a given question with a given model.

./ask.sh data/monument_300 "where is edward vii monument located in?"Tests can be run, but exclusively within the root directory.

py.test *.py- Components of the Adam Medical platform partly developed by Jose A. Alvarado at Graphen (including a humanoid robot called Dr Adam), rely on NSpM technology.

- The Telegram NSpM chatbot offers an integration of NSpM with the Telegram messaging platform.

- The Google Summer of Code program has been supporting 6 students to work on NSpM-backed project "A neural question answering model for DBpedia" since 2018.

- A question answering system was implemented on top of NSpM by Muhammad Qasim.

@inproceedings{soru-marx-2017,

author = "Tommaso Soru and Edgard Marx and Diego Moussallem and Gustavo Publio and Andr\'e Valdestilhas and Diego Esteves and Ciro Baron Neto",

title = "{SPARQL} as a Foreign Language",

year = "2017",

journal = "13th International Conference on Semantic Systems (SEMANTiCS 2017) - Posters and Demos",

url = "https://arxiv.org/abs/1708.07624",

}

- NAMPI Website: https://uclnlp.github.io/nampi/

- arXiv: https://arxiv.org/abs/1806.10478

@inproceedings{soru-marx-nampi2018,

author = "Tommaso Soru and Edgard Marx and Andr\'e Valdestilhas and Diego Esteves and Diego Moussallem and Gustavo Publio",

title = "Neural Machine Translation for Query Construction and Composition",

year = "2018",

journal = "ICML Workshop on Neural Abstract Machines \& Program Induction (NAMPI v2)",

url = "https://arxiv.org/abs/1806.10478",

}

@inproceedings{panchbhai-2020,

author = "Anand Panchbhai and Tommaso Soru and Edgard Marx",

title = "Exploring Sequence-to-Sequence Models for {SPARQL} Pattern Composition",

year = "2020",

journal = "First Indo-American Knowledge Graph and Semantic Web Conference",

url = "https://arxiv.org/abs/2010.10900",

}

- Primary contacts: Tommaso Soru and Edgard Marx.

- Neural SPARQL Machines mailing list.

- Join the conversation on Gitter.

- Follow the project on ResearchGate.

- Follow Liber AI Research on Twitter.