-

Notifications

You must be signed in to change notification settings - Fork 87

Logbook 2021 H1

Fixing issues with my Prometheus PR: The transaction map used to record confirmation time keeps growing and keys are never deleted so should fix that.

Trying to refactor code to use \case instead of pattern-matching on arguments, not sure if it's really better though. It spares couple lines of declaration for the function itself and removes the need for wildcards on unused arguments which is better, but might obscure some inner patterns potentially leading to more refactoring? -> Lead to proposed Coding standard.

While running through the demo we noticed that the nodes stop abruptly because there is a ReqTx coming in while in Closed state.

It's possible that some txs are in flight while closing the head so deifnitely a case we should handle gracefully. Yet there's the question of why we see a tx being in Wait state for a while.

- Writing a unit test to assert we don't crash in this case -> really, we should just simply not crash when we get an

InvalidEvent=> write a property test throwing events at a node and making sure it does not throw

Got annoyed by mock-chain not being configurable: Running the demo interacts with the tests.

We observe that when peers are not connected, messages are "lost" but the client behaviour is weird and does not say much about it. We might want to:

- Pass the list of known parties to the

Heartbeatso it knows which parties should be up from the get go - Get past outputs from the server so we can see the Connected/Disconnected

- Discussion about the

Waits: We should really not reenqueue all the time, but wait till the state change and reprocess the event.

How do we distinguish between transactions that are invalid now and ones that could be valid at some later point => This drags us into the weed of interpreting ledger's errors or crawling past txs/utxos to check which ones have been consumed.

- Providing some non-authoritative feeback when

NewTxis sent is better: Check tx is valid and provide aClientEffectreporting that

Adding TxValid / TxInvalid ServerOutput to provide feedback to the client when it submits a new transaction.

Noticed that the nix-build and with that also the docker build is not succeeding with this error:

error: builder for '/nix/store/5qzggg7ljjhmxr1jfvbfm48333vs76mm-hydra-prelude-lib-hydra-prelude-1.0.0.drv' failed with exit code 1;

last 10 log lines:

> Setup: Encountered missing or private dependencies:

> QuickCheck -any,

> aeson -any,

> cardano-binary -any,

> generic-random -any,

> network -any,

> quickcheck-instances -any,

> random-shuffle -any,

> warp -any

>

For full logs, run 'nix log /nix/store/5qzggg7ljjhmxr1jfvbfm48333vs76mm-hydra-prelude-lib-hydra-prelude-1.0.0.drv'.

This indicates that cabal's build plan is missing those dependencies. As we are somewhat "pinning" the whole build plan using nix to avoid long Resolving dependencies..., cabal does not automatically create a new build plan here. So errors like these can be resoled by updating the materialized dependencies:

Temporarily remove plan-sha256 (and materialized?) from default.nix and run

$ nix-build -A hydra-node.project.plan-nix.passthru.calculateMaterializedSha | bash

trace: To make project.plan-nix for hydra-poc a fixed-output derivation but not materialized, set `plan-sha256` to the output of the 'calculateMaterializedSha' script in 'passthru'.

trace: To materialize project.plan-nix for hydra-poc entirely, pass a writable path as the `materialized` argument and run the 'updateMaterialized' script in 'passthru'.

1ph1yazxqrrbh0q46mdyzzdpdgsvv9rrzl6zl2nmmrmd903a0805

The provided 1ph1yazxqrrbh0q46mdyzzdpdgsvv9rrzl6zl2nmmrmd903a0805 can be set as new plan-sha256 and then

$ nix-build -A hydra-node.project.plan-nix.passthru.updateMaterialized | bash

these 3 derivations will be built:

/nix/store/zfm0h0lfm4j025lsgrvhqwf2lirpqnp1-hydra-poc-plan-to-nix-pkgs.drv

/nix/store/90dk71nv40sppysrq35sqxsxhyx6wy9x-generateMaterialized.drv

/nix/store/dg34q3d7v2djmlrgwigybmj93z8rw330-updateMaterialized.drv

building '/nix/store/zfm0h0lfm4j025lsgrvhqwf2lirpqnp1-hydra-poc-plan-to-nix-pkgs.drv'...

Using index-state 2021-06-02T00:00:00Z

Warning: The package list for 'hackage.haskell.org-at-2021-06-02T000000Z' is

18807 days old.

Run 'cabal update' to get the latest list of available packages.

Warning: Requested index-state 2021-06-02T00:00:00Z is newer than

'hackage.haskell.org-at-2021-06-02T000000Z'! Falling back to older state

(2021-06-01T22:42:25Z).

Resolving dependencies...

Wrote freeze file: /build/tmp.E719eYkdj0/cabal.project.freeze

building '/nix/store/90dk71nv40sppysrq35sqxsxhyx6wy9x-generateMaterialized.drv'...

building '/nix/store/dg34q3d7v2djmlrgwigybmj93z8rw330-updateMaterialized.drv'...

Should have updated the materialized files, ready to be checked in.

Merging JSON PR with some changes in EndToEndSpec to make things more readable

- We noticed that instances of ClientInput / ServerOutput for JSON are handwritten, which is annoying to maintain.

Trying to find a way to have those generated automatically. It's annoying right now because

ServerOutputconstructors have no field names so we would get something like{"tag": "ReadyToCommit", "content": [ ...]}which is annoying. - Tried to use

genericShrinkfor defining shrinker forServerOutputbut it does not work because of overlapping instances. - Need to move types to own module and only export the type and the constructors, not accessors, so that the latter get used by generic JSON deriving but not usable for partial fields access. -> Some legwork, do it later.

Working on improving notifications to end users:

- Renaming

sendResponse->sendOutputfor consistency with the changes in types for API stuff. - Writing a test for API Server to check we get some error message when input cannot be serialised

- Implementing

Committedoutput message for clients to be notified of all commits - Got an unexpected test failure in the init -> commit test because we were not waiting for

ReadyToCommit. Now fixing more failures on BehaviorSpec because we are adding more messages in the mix -> Tests are very strict in the ordering of messgaes read and checked, unlikeHeadLogicSpecorEndToEndSpec.BehaviorSpecis somehow becoming a liability as tests are somewhat hard to change and very brittle to changes in ordering of messages and adding new messages => it this unavoidable?

Working on querying current ledger's state: Client send a GetUTxO input message and receives in return an output listing all consumed UTxOs, and possibly the list of transactions.

- Writing a predicate to wait until some message is seen, with intermediate messages being simply discarded. This seems not to work out of the box though, as there is a timeout thrown. The problem was that no node was doing snapshotting, so there was no way I could observe

SnapshotConfirmed! - Adding the capability to query Committed Utxo before head is open

Having a stab at integrating prometheus stats collector in our demo stack and possibly adding more metrics collection:

- Distribution of confirmation time for each tx?

- Number of confirmed tx

Noticed that running MonitoringSpec tests which use a warp server to run monitoring service ends with:

Hydra.Logging.Monitoring

Prometheus Metrics

tests: Thread killed by timeout manager

provides count of confirmed transactions from traces

tests: Thread killed by timeout manager

provides histogram of txs confirmation time from traces

This is apparently a recent issue

-

Review Heartbeat PR

- Discussion about Peers vs. Parties -> It makes sense to use

Partybecause we interested in having

- Discussion about Peers vs. Parties -> It makes sense to use

-

Review JSON PR Have "generic" in all sense of the term instance of

Arbitrarypossibly using generic-random -> Write a coding convention about it:- It makes sense to have one

Arbitraryinstance for all "API" types - We can use that in tests when we don't care about the actual value and get more coverage

- We can refine it through

newtypewrappers when needed - Does not preclude the use of specialised generators when testing properties

- It makes sense to have one

-

Missing from demo:

- remove snapshotted txs from the seen txs

- query current ledger state

- provide feedback upon commit

- provide feedback upon invalid tx

- latch client effects to allow clients to "catch up"

-

Discussed demo story:

- Three parties because this is not lightning

- Websocket clients connected to each node

- Init, no parameters because it's a "single head per node" right now

- Commit some UTxO (only numbers for simple ledger) taking turns, last node will also CollectCom

- use numbers indicating "ownership" of party (11, 12 for party 1), but Simple ledger has no notion of ownership or allowed to spend

- Submit a couple of transactions and occasionally query utxo setin between

- a valid tx

- an invalid tx -> because already spent (using the same utxo)

- a "not yet valid" tx -> because utxo not yet existing (gets put on hold)

- a tx "filling the gap" and making the one before also valid -> both are snapshotted

- Close the head, all hydra nodes should

NotContest, contestation period should be around 10 seconds - Fanout get's posted and is seen in by all nodes

We want to send a ReqSn after each (or window) ReqTx for the coordinated protocol

- Renamed and simplified

confirmedTxs->seenTxsas a list of TXs - Renamed

confirmedUTxO->seenUTxO - Changing

TxConfirmednotification toTxSeen=> There are notconfirmedTxsin theClosedoutput anymore, only UTxO => There are no hanging transactions anymore because transactions become really valid only after a snapshot is issued - Rip out

AckTxfrom the protocol - Check non leader does not send

ReqSn

There is a problem with leader "election" in the ReqTx: By default, we use number 1 but really we want to use the index of a party in a list of parties.

This list should not contain duplicate and the ordering should be the same for all nodes. And independent of the actual implementation of the ordering, like an Ord instance in Haskell. => this should be specified somewhere in our API? or the contracts actually...

- Replace

allPartieswithotherPartiesso that we make theEnvironmentmore fail proof - Slightly struggling to make the

isLeaderfunction right, sticking to hardcoded first element of the list of parties in the current head -

initEnvironmenthad snapshot strategy hardcoded toNoSnapshot=> predicate for leader fails. Also had an off-by-one error in determining the leader, using index 1 instead of 0

What happens when the leader concurrently emits 2 different snapshots with different content? This can happen if the leader receives 2 different ReqTx in "a row"?

In hydra-sim this is handled by having a NewSn message coming from a daemon that triggers the snapshotting: This message just takes all "confirmed" transactions accrued in the leader's state and create a new snapshot out of it, hence ReqTx can be processed concurrently as long as they cleanly apply to the current pending UTxO. We don't have such a mechanism in our current hydra-node implementation so the ReqSn emission is triggered by the ReqTx handling which leads to potentially conflicting situation. Possible solutions are:

- When leader emitted a

ReqSn, change the state andWaitif there are moreReqTxcoming in until the snapshot has been processed by all nodes? - Implement snapshotting thread that wraps the

HeadLogicinside the node and injectsNewSnmessages at the right time?

- From the ensemble session we identified, that we would be fine to just accrue

seenTxswhile the current snapshot is getting signed and a later snapshot would include them. - We also identified where changes are necessary in the protocol, but exercising this in the

HeadLogicSpecis unconfortable as this is ideally expressed in assertions on the whole node's behavior on processing multiple events - So I set off to revive the

Hydra.NodeSpec, which ought to be the right level of detail to express such scenarios - For example:

- Test that the node processes as the snapshot leader

[ReqTx, ReqTx, ReqTx]into[ReqSn, ReqSn, ReqSn]with a single tx each, and - (keeping the state) processes then

[ReqSn, ReqTx, ReqTx]into[AckSn]plus some internal book keeping onseenTxs

- Test that the node processes as the snapshot leader

- Trying to reduce

Resolving dependencies...time. This is slowing us down as it can take ages recently. - Likely this is coming from

cabalas it needs to solve all the dependencies and constraints. As we have added some more dependencies recently this time got longer (> 10mins for some of us) - Idea: side-step dependency solving by keeping the

plan.json; this is what.freezefiles are doing? Usinghaskell.nix, this is what materialization is doing - Followed the steps in the

haskell.nixmanual and it seems to be faster now for me.. but also onmaster, so maybe the fact, that a plan is cached is also cached in my/nix/storenow?

Troubleshooting NetworkSpec with Marcin, it appears the problem comes from the ipRetryDelay which is hardcoded to 10s in the Worker.hs code in Ouroboros. Increasing the test's timeout to 30s makes it pass as the worker thread now has the time to retry connecting. Here is the relevant part of the code:

-- We always wait at least 'ipRetryDelay' seconds between calls to

-- 'getTargets', and before trying to restart the subscriptions we also

-- wait 1 second so that if multiple subscription targets fail around the

-- same time we will try to restart with a valency

-- higher than 1.

Note that Subscriptions will be replaced by https://input-output-hk.github.io/ouroboros-network/ouroboros-network/Ouroboros-Network-PeerSelection-Governor.html so it does not make sense to try to make this delay configurable.

Working together on signing and validating messages. We now correctly pass the keys to the nodes at startup and when we initialise the head.

We won't care about AckTx because in the coordinated protocol, we don't sign individual transactions, only the snapshots.

Writing a unit test in the head to ensure we don't validate a snapshot if we get a wrong signature:

- Modify

AckSnto pass aSigned Snapshotwhich will contain the signature for the given data - Do we put the data in the signature? probably not usually

Why is there not a Generic instance for FromCBOR/ToCBOR?

There is a Typeable a constraint on FromCBOR for the sake of providing better error reporting. Typeable is special: https://ghc.gitlab.haskell.org/ghc/doc/users_guide/exts/deriving_extra.html#deriving-typeable-instances

Discussion around how to define "objects" we need for our test, esp. if we sould explicitly have a valid signature for the test which checks leadershipr -> this begs for having test builder to help us define "interesting" values

We need different tests for the verification of signature of snapshot:

- snapshot signed is not the right one but is signed by right party

- snapshot is the right one but signed by wrong party (unknown one or invalid key)

- check that when we received a valid signature from an unknown party, we don't use it as AckSn

Going to continue pairing beginning of afternoon to get to a green state, then working on our own:

- JSON interface

- Saner Heartbeat

- Merging NetworkSpec stuff

- Walk-through of recent

ExternalPABwork, made it finally pass tests and merge it tomasteras an "experiment covered by tests" - Discussed issues with

ouroboros-networking, re-/connection anderrorPolicies - Reflected on current work backlog, prototype scope (on the miro board), as well as what we think would be a meaningful scope for the next and following quarter (in Confluence)

- Started to write down instructions on how to use the

hydra-nodein a demo scenario here: https://github.com/input-output-hk/hydra-poc/tree/demo - Heartbeating is somewhat in the way right now

- The

--node-idis only used for the logs right now, maybe use change the command line options to just use 4000 +node-idwhen--api-portnot set explicitly etc?

Continued work on the "external" PAB chain client

- All kinds of dependency errors led me to copying the

cabal.projectfrom the latestplutus-starterverbatim (below our ownpackages) - This then also required an

allow-newerbecause ofattoparsecas dependency ofsnap-serveras dependency ofekgas dependency ofiohk-monitoring - Hit a wall as everything from the branch compiled but

masterwas still broken -> paired with AB to fix master - After merging

masterback in, ledger tests were still failing - Weirdly the (more recent?)

cardano-ledger-specsversion viaplutusis now havingapplyTxsTransitionagain as exported function. So I went on to change (back) theHydra.Ledger.MaryTestimplementation - One of

hydra-plutustests was failing- Investigated closer with AB and we identified that

assertFailedTransactionwas not providing our predicate with any failed transactions (but we expect one) - Asked in the #plutus channel, and the reason is, that the wallet now does validate txs before submitting them and they would not be part of

failedTransactionsanymore - However there is no alternative to assert this (right now - they are working on it), so I marked the tests as

expectFail

- Investigated closer with AB and we identified that

Still having troubles with master not building and tests failing: 0MQ based tests for mock chain are hanging

-

Found "faulty" commit to be the one replacing the

NaturalinPartywith an actual Verification key which is not unexpected. -

Replacing

arbitraryNaturalby agenPartywhich contains anundefinedvalue so that I can track what happens and where this is evalauted -

Looks like it's hanging in the

readsfunctioninstance Read Party where readsPrec = error "TODO: use json or cbor instead"is what we have, going to replace with a dummy value just for the sake of checking it's the issue

We are using

concurrently_which runs until all threads are finished, replacing withrace_to run until one of the threads finishes so that we catch exceptions earlier. I suspect one of the thread crashes due to the faulty read and the other ones are hanging.

Pairing with SN, we managed to get ZeroMQ test to stop hanging by implementing proper Read instance for Party and orphan for MockDSIGN

Still having test failures in options and serialisation

Working on PR https://github.com/input-output-hk/hydra-poc/pull/25

- Network tests are still flaky, esp. the Ouroboros ones which fail every so often in an odd way: the timeout that expires is the inner one waiting for the MVar and not the outer one

- Depending on hydra-node in local-cluster seems kinda wrong so trying to sever that link

Trying to remove some warnings in cabal build about incorrect versions, aparently coming from https://github.com/haskell/cabal/issues/5119 which is not fixed (last comment is from 11 days ago and is about looking for a good soul to make a PR...). Going to leave as it is now, unfortunately.

Trying to fix flackiness of network tests, possibly using traces as a synchronisation mechanism rather than waiting:

- Running the tests repeatedly does not reproduce the errors seen. The traces dumped are not very informative on what's going wrong unfortunately...

- Running the tests with

parallelbreaks, probably because the Ouroboros tests reuse the same ports? - Using local Unix sockets with unique names would make the tests more reliable but then it would not test the same thing.

Refactoring local-cluster code to remove Logging --> shaving yak moving Ports module which could also be useful in hydra-node. This lead to this PR to unify/simplify/clarify code in local-cluster project.

- Use random ports allocator in

NetworkSpectests to ensure tests use own ports - Ouroboros tests are now consistently failing when allocating random ports, seems like tests are either very fast, or timeout, which means there is a race condition somewhere.

- Oddly enough, test in CI fails on the inner wait for taking recevied value from MVar

- Worked together on adding keys for identifying parties

Quick discussion about https://github.com/input-output-hk/hydra-poc/pull/23 on how to make tests better, solution to test's synchronization problem is to do the same thing as in EndToEnd test:

- If client's connection fails, retry until some timeout fires

- Sync the sending of message with client's being connected

Then we did not actually program but went through MPT principles to understand what their use entails, and get some intuitions on how they work

Going through construction of MPT: https://miro.com/app/board/o9J_lRyWcSY=/?moveToWidget=3074457360345521692&cot=14, with some references articles in mind:

- https://medium.com/@chiqing/merkle-patricia-trie-explained-ae3ac6a7e123

- https://blog.ethereum.org/2015/11/15/merkling-in-ethereum/

- https://easythereentropy.wordpress.com/2014/06/04/understanding-the-ethereum-trie/

- https://eth.wiki/en/fundamentals/patricia-tree

Some conclusions:

- We need to bound the size of UTxO in the snapshot and the number of hanging transactions to make it sure honest nodes can post a

CloseorContestas a single transaction - The Fanout is not bounded in size because it can be made into several transactions, but the close is because it needs to be atomic otherwise adversaries could stall the head and prevent closing or contestation by exhausting the contestation period (something like slowloris?)

- Nodes should track the size of snapshots and hanging txs in the head state and refuse to sign more txs when reaching a limit

- MPTs are useful for 2 aspects: having a O(log(n)) bound on the siez of proof for each txout to add/remove, enabling splitting of fanout

- We need to bound the number of UTxO "in flight", which is what the concurrency level is about, but we need not to track the TX themselves as what counts in size is number of txout add/removed

- what about size of contracts? if adding MPT handling to contracts increase the size too much, this will be annoying

- size of types, even when church-encoded

Syncing for solo work:

- AB: Coordinated protocol, tying loose ends on PRs

- MB: docker

- SN: Key material configuration

Considering adding graphmod generated diagram of modules in the architecture documents.

Graph can be generated with:

find . -name \*.hs | grep -v dist-newstyle | graphmod -isrc/ -itest | tred | dot -Tpdf -o deps.pdf

Which could be run as part of the build and added to the docs/ directory

Removes MockTx everywhere in favor of SimpleTx, including in "documentation". This highlights once again that Read/Show are not ideal serialisation formats.

Improving WS ServerSpec tests, it's annoying that runServer cannot be given a Socket but always requires a Port.

-

Warp provides

openFreePortfunction to allocate a randomSocketand aPort, and it happens we have warp package in scope so let's use that. - Using a "Semaphore" to synchronize clients and responses sending but still having a race condition as the clients can start before the server so are unable to connect.

-

runClient rethrows an exception thrown by

connectto the socket, and concurrently_ rethrows it too, but withAsync provides anAsyncwhich "holds" the return value, or exception, thrown by inner thread. - When running the test client, I need to catch exceptions and retry with a timeout limit

- Had a quick look into the "Configure nodes with key pairs" topic and it is actually quite undefined:

- Do we really want to parameterize the node with other parties pubkeys or rather pass them with the other

HeadParametersfrom the client using theInitcommand? - Where would the key material come from? i.e. would we use the

cardano-cli, another cardano tool or something we create? - I'm a bit overwhelmed by the wealth of "crypto modules" providing key handling in the already existing cardano ecosystem, here some notes:

-

cardano-base/cardano-crypto-class/src/Cardano/Crypto/DSIGN/Class.hsalong with it'sEd25519seems to be the very basic de-/serialization of ed25519 keys and signing/verifying them - The cardano-cli seems to use

cardano-node/cardano-cli/src/Cardano/CLI/Shelley/Key.hsfor deserializing keys -

readVerificationKeyOrFileindicates that verification keys are stored in an "TextEnvelope" as Bech32 encoded values, also that there are multiplekeyroles -

cardano-node/cardano-api/src/Cardano/Api/KeysShelley.hscontains several key types (roles) - how different are they really? - Normal and extended keys are seemingly distinguished:

- normal are basically tagged

Shelley.VKeyfromcardano-ledger-specs - extended keys are newtype wrapped

Crypto.HD.XPubfromcardano-crypto

- normal are basically tagged

- The

Shelley.VKeyfrom above is defined incardano-ledger-specs/shelley/chain-and-ledger/executable-spec/src/Shelley/Spec/Ledger/Keys.hsand is a newtype-wrappedVerKeyDSIGNfromcardano-crypto-class, parameterized withStandardCryptodefined inouroboros-network/ouroboros-consensus-shelley/src/Ouroboros/Consensus/Shelley/Protocol/Crypto.hs.. which is selectingEd25519viatype DSIGN StandardCrypto = Ed25519DSIGN

-

- How deep into this stack 👆 do we want dive / how coupled to these packages do we want to be?

- Merkle Patricia Trees (MPT) are supposed to come as a potential solution to the problem w.r.t to the size and feasibility of close / contest / fanout transactions.

- Verifying the membership of an element to the tree requires the MPT root hash, the element itself, and all the nodes on the path from the element to the root. Note that the path is typically rather short, especially on "small" (e.g. < 1000) UTxO sets where the chances of finding common prefixes longer than 3 digits is already quite small.

- An MPT are bigger than a simple key:value map since they include hashes of each nodes. Although on the plus side, since they are trees, MPT can be split into subtrees.

- One thing is still a little bit unclear to me w.r.t the "security proof" coming with the verification of a tree. Unlike Merkle trees, which requires all the OTHER nodes' hashes to perform a verification, (that is, requires the missing information needed to re-hash the structure and compare it with the root), MPT require the nodes on the path and prove membership by simply walking the path from the root down to the element. How does one prove that the given hashes do indeed correspond to what they claim? In the case of Ethereum (which also uses MPT for storing various piece of information, like the balances of all accounts), a node maintain the entire MPT, so arguably, if someone provides a node with a path and ask for verification, then necessarily if the node finds a path down to the element then the proof was valid (provided that the node itself only maintain a valid MPT, but that's a given). In the case of Hydra and on-chain validator however, the MPT is meant to be provided by the user in the Close / Contest or Fanout transactions. There's therefore no guarantee that the tree will actually be valid and it must be verified, which requires the entire tree to re-compute the chain of hashes (or more exactly, like Merkle trees, it requires all the other nodes, but since each layer can have 16 branches... all the other nodes is almost all the tree...).

- Quick introduction to impact mapping technique by Arnaud

- We exercise a quick example on the miro board

- Starting discussion about our goal - start with "why"

- It should be SMART (the acronym)?

- Our vision is "Hydra is supporting growth and adoption of the cardano ecosystem"

- But that is not a goal, per se; it is not measurable

- There is also OKRs (in how to specify goals)

- Is our goal to reach X number of transactions done through hydra, or rather X percent of all Cardano transactions being done on hydra? i.e. should it be relative?

- We can imagine many ways to contribute to many different goals, but which one is most valuable to us, to the business, to the world?

- Is value locked or number of transactions a useful metric for adoption?

- What are people using Cardano right now?

- NFT sales since mary

- Oracle providers

- Metadata for tracking and recording things

- Are fees a problem right now for Cardano? i.e. is it a good goal to reduce them using Hydra (in some applications)

- We are building a solution for the community; in the spirit of open-source development

- We are not building a turn-key-solution for individual customers

- However, we could build one showcase-product using a more general platform

- Creating a reference implementation is all good & fine, also the theoretical possibility of other implementations interacting with our solution; but we should not rely on it for our success (seeing Cardano)

- We part by thinking about "the goal" and meet again next week

Implementing Wait for ReqTx

- We don't need to add more information to

Waitas we know the event it carries from the event processor - But we would need it for tracing/infomration/tracking purpose higher up in the stack?

Writing a BehaviorSpec to check we are indeed processing the Wait:

- Although there is a

panicin the event processing logic, it's not forced so we don't see it fails there - ⇒

waitForResponsetransaction confirmed - We see test failing on a timeout, still not panicking through -> Why?

- The test fails before reaching

Waitfailure -> removing verification inNewTxfor the moment in order to ensure we actuallypanic - Do we need

Waitor can we just return aDelayeffect? Seems like even though they are equivalent, they are actually 2 different concepts, we want theoutcometo express the fact the state in unchanged, and unchangeable, while waiting

What's next?

- there's a

panicin ackTx - there's another one in

ackSn-> need to check all transactions apply - There's no way we can currently express the paper's constraints for

AckSnbecause messages are always ordered and all nodes are supposedly honest - in the paper we receive a list of hashes for transactions instead of transactions again -> optimisation for later

Back to reqSn:

- we can only right now write unit tests and not higher-level ones, because we don't have a way to construct a ahead-of-time snapshot. possible solutions: * write an adversarial node * increase concurrency level of event processing to produce out-of-order (or lagging code) * remove the limitation on network messages ordering so that we can simulate reordering of messages?

- lifting the condition on snapshot number to a guard at the case level expressed as

requirein the paper - adding more unit tests with ill-constructed snapshots, distinguishing

Waitresults from returnError

Implementing leader check in ReqSn, got surprised by the behaviour of REqSn and isLeader:

- having declarations far from their use in tests is annoying

- snapshot leader is still hard-coded to one

Renaming SnapshotAfter to SnapshotAfterEachTx

Signing and verifying is the next interesting to do as this will lead us to replace the fake Party type with some actually interesting data for peers in the network, eg. pub/private keys, signing, multisigning, hashes...

Status update on Multi-Signature work by @Inigo and how to proceed with "verification over a non prime order group"

- MuSig2 is non defined about non-prime curves

- Ristretto: encode non-prime group specially to "protect security?"

- Goal is still that the verifier does not need to know about this

- Where to go frome here?

- Sandro, Peter and Matthias would be able to work through current findings, but need to find time for it

- How urgent? Not needed right now.. but in the next weeks/months (quarter) would be good

- There is a rust implementation of Ristretto-Ed25519 used by Jormungandr?

- https://github.com/input-output-hk/chain-libs/blob/efe489d1bafa34ab763a4bfdddb6057d0080033a/chain-vote/src/gang/ristretto255.rs

- Uses ristretto for all cryptographic operations, maybe not fully transparent for verifier.

- Inigo will have a look whether this is similar.

- In general we think this should be possible (without changing the verifier), but we noted that we would need to know if this plan does not work out rather earlier than later

We want to speak about the On-Chain-Verification (OCV) algorithms:

- Q: OCV close is confusing, does "apply a transaction to snapshot" needs to be really done on-chain? it is theoretically possible, but practically too expensive in terms of space and time

- Q: Leaving (hanging) transactions aside, would closing / contesting a head mean that the whole UTxO set need to be posted on chain?

- Verifying signatures is possibly expensive, can't be offloaded to the ledger as "parts" of the tx

- When closing a head, you do not need to provide the full tx on close -> Merkle trees allow to post only hashed information

- yet? maybe avenue of improvement

- For distributing outputs (fanout), splitting it in multiple txs ought to be used

- MPT are pretty essential to implement the protocol

- We would only provide proofs of transactions being applicable to a UTXO set

- What happens if all participants lose the pre-images (not enough info on chain)?

- The security requires that at least one participant behaves well

- Losing memory of everything is not well-behaved

- Blockchains are not databases!

- Simulations showed that snapshotting is not affecting performance, so number of hanging transactions could be bounded

- Snapshotting after every transaction would limit number of txs per snapshot naturally

- Does not require much data being submitted or time expensive things (e.g. tx validation)

- Whats the point of signing transactions in the first place?

- Helps in performance because of concurrency

- Especially when txs do not depend on each other

- How expensive is signature validation in plutus?

- Discussions around, not sure, rough guesses at first, but then:

- 42 microseconds on Duncan's desktop

- How would be find out whether something fits in space/time with plutus

- Ballpark: micro or milliseconds is ok, more is not

- In the plutus repository there is a benchmark/tests and we could compare it with that (also with marlowe scripts)

- Re-assure: When broadcasting Acks we don't need Confs, right? Yes

- Pushed some minor "Quality-of-life" changes to

master:- Use a

TQueueinstead ofTMVaras a channel to send responses to clients in test, because the latter makes the communication synchronous which blocks the node if a client does not read sent responses - Add Node's id to logs because logs in

BehaviorSpeccan come from different nodes - Remove one

panicfromupdate, return aLogicErrorand throw returned exception at the level ofeventHandlerbecause otherwise we fail to properly capture the log and don't know what sequence of action lead to the error

- Use a

- Implemented a

SimpleTxtransaction type that mimics UTxOs and pushed a PR

Going to modify HeadLogic to actually commit and collect UTxOs.

- Changed Commit and CommitTx to take UTxOs. To minimize change I tried to make

MockTxaNumbut this was really silly so I just bit the bullet and changed all occurences with a list of numbers to aUTxO MockTxwhich is jsut a[MockTx] - Tests are now inconsistent because we return empty snapshots and do not take into account the committed UTxOs. I modified

BehaviorSpecto reflect how close and finalise txs should look like and now they all fail of course, so I will need to fix the actual code. - Now facing the issue that UTxO tx is not a Monoid, which is the case only because UTxOs in Mary is not a monoid: https://input-output-rnd.slack.com/archives/CCRB7BU8Y/p1623748685431600 Looking at UTxO and TxIn definition, it seems defining a Monoid instance would be straightforward... I define an orphan one just for the sake of moving forward.

- We can now commit and collect properly UTxOs and all unit tests are passing, fixing ete test

- ETE test is failing after modification with a cryptic error: Seems like it fails to detect the

HeadIsOpenmessage with correct values, now digging into traces- Found the issue: The

Commitcommand requires a UTxO whihc in our case is a list of txs. This reveals the limits of using Read/Show instance for communicating with the client, as it makes the messages dependent on spaces and textual representation which is hard to parse https://miro.com/app/board/o9J_lRyWcSY=/?moveToWidget=3074457360210681480&cot=14

- Found the issue: The

- submitted PR #20

Started work on a more thorough property test that will hopefully exercise more logic, using SimpleTx:

-

Property test is hanging mysteriously, probably because the run keeps failing, trying to make sense of it by writing a single test with a single generated list of txs and initial UTxO

-

The list was geneated in reverse order and reversed when applied, now generating in the correct order which implies modifying the shrinker. I changed the generator for sequences to take a UTxO but did not change the maximum index. This works fine when starting with

memptybut of course not when starting with something... -

Implemented waiting for

HeadIsFinalisedmessage but I still get no Utxo, so most probably I am not waiting enough when injecting new transactions. Tried to add a wait forSnapshotConfirmedbut was not conclusive either ⇒ Going to capture the traces of each node and dump them in case of errors, captured through theIOSimtracing capabilities. -

Capturing traces in IOSim, but now some other tests are failing, namely the ones about capturing logs which totally makes sense.

-

Wrote a tracer for

IOSimbut I fail to see the logs usingtraceMwhich usesDynamicbased tracing. Well, the problem is "obvious": I am trying to get dynamicHydraLoglogs but I only haveNodelogs... -

Still having failing tests when trying to apply several transactions, but I now have a failure:

FailureException (HUnitFailure (Just (SrcLoc {srcLocPackage = "main", srcLocModule = "Hydra.BehaviorSpec", srcLocFile = "hydra-node/test/Hydra/BehaviorSpec.hs", srcLocStartLine = 229, srcLocStartCol = 31, srcLocEndLine = 229, srcLocEndCol = 110})) (ExpectedButGot Nothing "SnapshotConfirmed 7" "TxInvalid (SimpleTx {txId = 2, txInputs = fromList [5,6,9], txOutputs = fromList [10,11]})"))What happens is that I try to apply transactions to quickly when we get the

NewTxcommand, whereas it should actuallyWaitfor it to be applicable. I guess this points to the need of handlingWaitoutcome... -

Handling

Waitis easy enough as we already have aDelayeffect. The test now fails beacuse thewaitForResponsechecks the next response whereas we want to wait for some response. -

The test fails with a

HUnitFailurebeing thrown which is annoying because normally I would expect the failure to be caught by therunSimTraceso I can react on it later on. The problem is that theselectTraceEventsfunction actually throws aFailureExceptionwhen it encounters an exception in the event trace, which is annoying, so I need a custom selector. -

Wrote another function to actually retrieve the logs from the

EventLogbut it's choking on the amount of data and representing the logs. Trimming the number of transactions finally gives me the logs, which are somewhat huge:No Utxo computed, trace: [ProcessingEvent (ClientEvent (Init [1,2])) , ProcessingEffect (OnChainEffect (InitTx (fromList [ParticipationToken {totalTokens = 2, thisToken = 1},ParticipationToken {totalTokens = 2, thisToken = 2}]))) , ProcessingEvent (OnChainEvent (InitTx (fromList [ParticipationToken {totalTokens = 2, thisToken = 1},ParticipationToken {totalTokens = 2, thisToken = 2}]))) , ProcessedEffect (OnChainEffect (InitTx (fromList [ParticipationToken {totalTokens = 2, thisToken = 1},ParticipationToken {totalTokens = 2, thisToken = 2}]))) , ProcessedEvent (ClientEvent (Init [1,2])) .... , ProcessingEvent (NetworkEvent (ReqTx (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]}))) , ProcessingEffect (NetworkEffect (AckTx 1 (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]}))) , ProcessedEffect (NetworkEffect (AckTx 1 (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]}))) , ProcessedEvent (NetworkEvent (ReqTx (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]}))) , ProcessingEvent (NetworkEvent (AckTx 1 (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]}))) , ProcessedEvent (NetworkEvent (AckTx 1 (SimpleTx {txId = 2, txInputs = fromList [3,6,7,8,11,12], txOutputs = fromList [13,14,15,16,17,18]})))] -

While running a longer test to apply transactions, I got the following error:

FatalError {fatalErrorMessage = "UNHANDLED EVENT: on 1 of event NetworkEvent (AckSn (Snapshot {number = 1, utxo = fromList [1,3,4,5,6,7,8,9], confirmed = [SimpleTx {txId = 1, txInputs = fromList [2], txOutputs = fromList [3,4,5,6,7,8,9]}]})) in state ClosedState (fromList [1,3,4,5,6,7,8,9])"}Which is quite unexpected, indeed.

Reviewing what needs to be done on HeadLogic

- InitTx parameters should probably be PubKeyHash, participation tokens are not really used in the protocol itself, they are part of the OCV

- Is the result of observing init tx different than posting it -> not at this stage

- commits are not ok -> we should replace that with UTxOs

- collectCom is faked -> empty UTxO and does not care with committed values -> should be a parameter of CollectComTx that's observed

- do the chainclient knows about tx? -> it needs to in order to create/consumes UTxOs at least in the

collectComfanouttransactions

discussion about how to use the mainchain as an event sourced DB?

-

Is the chain the ultimate source of truth? Is our property (that all chain events should be valid in all states) about chain events true?

- committx are not only layer 2, they are observable on chain and not only in the head

- the committx event does not change the state, but for the last Commit which triggers the

CollectComTx - a node could bootstrap its state from the chain by observing the events there

-

Pb: How to fit a list of tx inside a tx?

- you might not care because you are not going to post a tx which contains those, because nodes contain some state that allow them to check

- we really want to check that with the research team: How does the closetx/contest really should work on-chain?

- if the closetx is not posting the full data, there is no way observing it from the chain one can reconstruct it

-

What would we be seeing on the chain? it should be ok to post only proofs

- verify the OCV code for Head

-

what's the safety guarantees after all?

Problems w/ current head protocol implementation:

- size of txs, putting list of txs and snapshots as part of Close and Context will probably overcome the limits of Tx size in cardano

- OCV requires validation transactions and this seems very hard to do in the blockchain, or at least computationally intensive

- size of UTxOs in the FanOut tranasction might be very large

A lot of complexity comes from the fact txs can be confirmed outside of snapshots and we need to account for those supernumerary txs on top of snapshots, what if we only cared about snapshots?

- Continue work on getting a

hydra-pabrunning- The fact that all our

Contracts are parameterized byHeadParametersis a bit annoying, going to hardcode it like in theContractTestfor now - Using it in a PAB seems to require

ToSchemainstances,TxOuthas no such instances -> orphan instances - Compiles now and available endpoints can be queried using HTTP:

curl localhost:8080/api/new/contract/definitions | jq '.[].csrSchemas[].endpointDescription.getEndpointDescription' - Added code which activates all (currently 2) wallets and writes

Wxxx.cidfiles similar as Lars has done it in the plutus pioneers examples - The de-/serialization seems to silently fail

- e.g.

initendpoint does have a()param -

curl -X POST http://localhost:8080/api/new/contract/instance/$(cat W1.cid)/endpoint/init -H 'content-type: application/json' --data '{}'does not work, but returns200 OKand[]as body -

curl -X POST http://localhost:8080/api/new/contract/instance/$(cat W1.cid)/endpoint/init -H 'content-type: application/json' --data '[]'does work and simulate submitting the init tx - Also, after failing like above, the next invocation will block!?

- Multiple correct invocations of

initdo work

- e.g.

- The fact that all our

- Messed around with getting ouroboros-network and the ledger things work again (..because of bumped dependencies..because of plutus)

- Created a first

ExternalPABwhich just usesreqto invoke the "init" endpoint

Read the artcile about Life and death of plasma

- gives a good overview of Ethereum scalability solutions

- starts explaining former Plasma ideas and more recent zk- and optimistic rollups

- more details about rollups

- the zero-knowledge (zk) stuff is still a bit strange, but is maybe just more fancy crypto in place to do the same as Hydra's multi-signatures incl. plutus validation?

- optimistic rollups remind me of collateralization / punishment mechanisms as they are discussed for the something like the Hydra tail

Looking into how to interact with / embed the PAB as ChainClient:

- Starting with "using" the PAB the normal way, i.e. launch it + the playground from the plutus repository?

- This PAB Architecture document mentions that "Additional configurations can be created in Haskell using the plutus-pab library."

- The plutus-starter repository seems to be a good starting point to running the PAB

- Using vscode with it's devcontainer support (for now)

-

pab/Main.hsseems to a stand-alone PAB with theGameContract"pre-loaded" - contract instances can be "activated" and endpoints can be invoked using HTTP, while websockets are available for observing state changes

- The stand-alone PAB runs a simulation by

Simulator.runSimulationWithwithmkSimulatorHandlers, which essentially does simulate multiple wallets / contract instances like theEmulatorTrace - Using this we should be able to define a chain client, which talks to such a stand-alone (simulated) PAB instance

- Is there also a way to run

PABActionin a non-simulated way against a mocked wallet / cardano-node?- Besides the Simulator, there is only

PAB.App.runAppwhich callsrunPAB, this time withappEffectHandlers - This seems to be the "proper" handle for interfacing with a wallet and cardano-node, notably:

- Starts

Client.runTxSender,Client.runChainSync'andBlockchainEnv.startNodeClient, seemingly connecting to a cardano-node given aMockServerConfig - Keeps contract state optionally in an

SqliteBackend - Calls a "contract exe" using

handleContractEffectContractExe-> this is not what we want, right? - Interacts with a real wallet using

handleWalletClient

- Starts

- The

App.Cliseems to be used for various things (refer toConfigCommandtype) - This definitely hints towards the possibility of using PAB as-a-library for realizing a chain client interface, but seems to be quite involved now and not ready yet.

- Besides the Simulator, there is only

- Set off to draft a

Hydra.Chain.ExternalPABwhich uses HTTP requests to talk to a PAB running our contract offchain code- The scenario is very similar to lectures 6 and 10 of the plutus pioneer program

- Created a

hydra-pabexecutable inhydra-plutusrepository - Required to change many

source-repository-packageandindex-state... this was a PITA

- Change fanout logic to use the

FanoutTxinstead of theClosedState - Discussion that

FanoutTxshould be always able to be handled - Define a more general property that

OnChainTxcan be handled in all states- Uncovered another short cut we took at the

CloseTx - Note to ourselves: We should revisit the "contestation phase"...

- What would we do when we see a

CommitTxwhen we are not inCollectingState?

- Uncovered another short cut we took at the

-

Fiddling w/ CI: Looking at way to run github actions locally, using https://github.com/nektos/act

Seems like https://github.com/cachix/install-nix-action does not work properly because it is running in a docker image and requires systemd to provide multi-user nix access. Adding

install_options: '--no-daemon'does not help, the option gets superseded by other options from the installer. Following suggestions from the README, trying to use a different ubuntu image but that's a desparate move anyhow, because a docker image will probably never provide systemd -> 💣 -

Fixing cache problem in GitHub actions: https://github.com/input-output-hk/hydra-poc/runs/2795848475?check_suite_focus=true#step:6:1377 shows that we are rebuilding all dependencies, all the time. however, when I run locally a build within

nix-shellthose packages are properly cached in `~/.cabal/store so they should be cached in the CI build tooSeems like the cache is actually not created: https://github.com/input-output-hk/hydra-poc/runs/2795848475?check_suite_focus=true#step:10:2 The cache key is computed thus:

key: ${{ runner.os }}-${{ hashFiles('cabal.project.freeze') }}but the

cabal.project.freezefile was removed in a previous commit. Adding it back should give us back better caching behavior.However: cabal.project.freeze is generated with flags which then conflicts with potential

cabal.project.localflags, and it's not really needed as long as theindex-stateis pinned down -> usecabal.projectas the key to the caching index -

Red Bin:

Setup a Red bin for defects in the software development process: https://miro.com/app/board/o9J_lRyWcSY=/?moveToWidget=3074457360036232067&cot=14, and added it as part of coding Standards

-

Looking at how to improve tests results report in the CI:

- There is a junit-report-action which provides checks fro PR reporting

- another one is test-reporter

- and yet another publish-unit-test-results

This means we need to publish the tests execution result in JUnit XML format:

- hspec-junit-formatter provides a way to do that but it requires some configuration in the Main file which kind of breaks hspec-discover mechanism in a way? I think it's possible to use hspec-discover with a non empty file though...

-

Wrapping up work on publishing Haddock documentation in CI.

Instead of having multiple steps with inline bash stuff in the CI job, I would rather have a

build-ci.shscript that's invoked from the step and contains everythingWe now have a website published at URL https://input-output-hk.github.io/hydra-poc 🍾

-

Some discussions about UTxO vs Ledger state in the context of the Head. The entire paper really only considers UTxO and only makes it possible to snapshot and fanout UTxOs. This means that other typical information in the ledger state (e.g. stake keys, pools, protocol params) are not representable inside the head; Should the head refuse to validate transactions which carry such additional information / certificates?

-

Discussion about closing / fanout and inception problem. Asking ledger team about possibilities of how to validate such transactions without actually providing the transactions. We need some clarity on this and will bring this up in the next Hydra engineering meeting.

Ensembling on snapshotting code:

- We need a snapshot number and can assume strategy is always

SnapshotAfter 1, ie. we don't yet handle the number of txs to snapshot - We can handle leadership by having a single node with a

SnapshotAfterstrategy - Failing test is easy: invert the UTxo set and list of Txs in the Closing message

- We should use tags instead of

Textfor CBOR serialisation

Meta: Can we really TDDrive development of a protocol such as the Hydra Head? Working on the snapshot part makes it clear that there are a lot of details to get right, and a small step inside-out TDD approach might not fit so well: We lose the "big picture" of the protocol

Representation of UTXO in Mock TX is a bit cumbersome because it's the same as a list of TXs

- TODO: Use a

newtypewrapper to disambiguate?

Improving network heartbeat [PR])https://github.com/input-output-hk/hydra-poc/pull/15) following code review.

Reviewing test refactoring PR together

- need to introduce io-sim-classes lifted versions for various hspec combinators

- first step towards a

Test.Prelude - still not strict shutdown, there are dangling threads which are not properlu closed/bracketed

- we could have a more polymorphic describe/it combinators to allow running in somehitng else than IO

- for tracing, we could create a tracer that piggybacks on dynamic tracing in IOSim

Reviewing network heartbeat PR together:

- Current heartbeat is not very useful, a more useful one would be a

heartbeat that only sends a ping if last message was sent more

than

ping_intervalbefore - rename

HydraNetwork->Network

At least 2 shortcomings in current snapshotting:

- Leader never changes and is hardcoded to 1

- Actually tally Acks before confirming the snapshot

- Have a better representation for MockTx

- Replace single tx

applyTransactionwith applying a list of txs

Implementing BroadcastToSelf network transformer that will ensure all sent messages are also sent back to the node

Interestingly, when I wire in withBroadcastToSelf into Main at the outer layer, the test for prometheus events in ETE test fails with one less event: This is so because we now only send back network events that are actually sent by the logic, and so we are missing the Ping 1 which the node sends initially for heartbeat.

Inverting the order of withHeartbeat and withBroadcastToSelf fixes the issue and we get back the same number of events than before

Created PR for BroadcastToSelf

Want to publish haddock to github pages

Generating documentation for all our packages amounts to

$ cabal haddock -fdefer-plugin-errors all

@mpj put together some nix hack to combine the haddocks for a bunch of modules: https://github.com/input-output-hk/plutus/pull/484

The problem is that all docs are generated individually into packages' build directory which makes it somewhat painful to publish. I could hack something with hardcoded links to a root index.html for each project in a single page?

There is an updated nix expression at https://github.com/input-output-hk/plutus/blob/master/nix/lib/haddock-combine.nix

- Review PR #13 and made a lot improvements to wordings. Some

follow-up actions we might want to undertake to better align code

and architecture description:

- Rename

ClientRequestandClientResponsetoInputandOutputrespectively - Add some juicy bits from Nygard's article to ADR 0001

- Rename

OnChain->ChainClientand check consistency for this component - Check links in Github docs/ directory, could be done in an action

- publish haddock as github pages

- publish

docs/content alongside Haddock documentation

- Rename

- Left

ADR0007

as

Proposedpending some concrete example with the network layer

- Kept working and mostly done with working on Network Heartbeat as a kind of show case for ADR0007, PR should follow soon

- In the spirit of Living Documentation I have also started

exploring the using GHC

Annotations

to identify architecturally significant TLDs. Currently only scaffolding things and annotating elements with

Componentto identify the things which are components in our architecture.

I managed to have a working plugin that list all annotated elements along with their annotation into a file. The idea would be to generate a markdown-formatted file containing entries for each annotated element, possibly with comments and linking to the source code. This file would be generated during build and published to the documentation's site thus ensuring up-to-date and accurate information about each architecturally significant element in the source code.

Some more references on this :

- There is even a study on the impact of architectural annotations on software archiecture comprehension and understanding.

- Structurizr leverages annotations for documenting C4 architecture

- Nick Tune discusses the issues with living documentation and self-documenting architecture

When closing a head, a head member must provide:

- A set of transactions not yet in a previous snapshot

- A set of transaction signatures

- A snapshot number

- A snapshot signature

The on-chain code must then check that:

- All the signatures for transactions are valid

- The signature of the snapshot is valid

- All transactions can be applied to the current snapshot's UTxO

The Ledger has a mechanism for verifying additional signatures of the transaction body, but not for verifying signatures of any arbitrary piece of metadata in the transaction. Which pushes the signature verification onto the contract itself which is likely not realistic given (a) the complexity of such primitive and (b) the execution budget necessary to verify not one, but many signatures.

Besides, each transaction must figure in the close transaction, with their signature. Running some rapid calculation and considering a typical 250 bytes transaction on the mainchain, it would mean that around 60 transactions can fit in a close, not even considering the snapshot itself and other constituents such as, the contract.

Likely, transaction inside the head shall be more limited in size than mainnets, transactions, or, we must find a way to produce commits or snapshots which does not involve passing full transactions (ideally, passing only the resulting UTxO and providing a signature for each UTxO consumption could save a lot of space).

We managed to make the BehaviorSpec use io-sim and I continued a bit on refactoring, notably the startHydraNode is now a withHydraNode. https://github.com/input-output-hk/hydra-poc/pull/14

Multiple things though:

- I could not get rid of HydraProcess completely, as the test still needs a way to capture ClientResponse and waitForResponse, so I renamed it to TestHydraNode at least

- The

capturedLogcould be delegated toIOSim/Traceinstead of the custom handle /withHydraNode - This

withHydraNodelooks a hell like the more general with-pattern and we probably should refactor thehydra-node/exe/Main.hsto use such awithHydraNodeas well (with the API server attached), while the test requires the simplesendResponse = atomically . putTMVar responsedecoration

Discussed ADRs and the Architecture PR

- Add a README.md into

docs/adrscould help navigate it and serve as an index of important (non-superseeded) ADRs - Move component description to Haddocks of modules as they are very detailed and source code centric

- The component diagram could serve as an overview and serve as an index of important modules linking to the

.hsfile or ideally the rendered module documentation (later) - This way we have a minimal overhead and as-close-to-code architecture documentation

- We might not keep the Haddocks (when we also throw away code), but the ADRs definitely qualify as a deliverable and will serve us well for developing the "real" product

Paired on making the ETE tests green

- Initialize the

partiesinHeadParametersfromInitTxinstead of theInitclient request - Re-use

Set ParticipationTokenfor this, although we might not be able to do this anymore in a later, more realistic setting

Fixed the NetworkSpec property tests as they were failing with ArithUnderflow, by ensuring we generate Positive Int

Worked on refactoring the UTxO and LedgerState type families into a single class Tx

- This class also collects all

EqandShowconstraints as super classes - Makes the standalone

deriving instancea bit simpler as they only require aTx txconstraint - Possible next step: Get rid of the

txtype variable using existential types, eg.data HydraMessage = forall tx. Tx tx => ReqTx tx

Started work on driving protocol logic to snapshotting

- We changed

HeadIsClosedto report on snapshottedUTxO, aSnapshotNumberand a list of confirmed (hanging) transactions - We introduced

data SnapshotStrategy = NoSnapshots | SnapshotAfter Natural - Updated

BehaviorSpecto have this new format forHeadIsClosedand aSnapshotStrategy, but no actual assertion of the txs being in the snapshottedUTxOset or a non-zero snapshot number - Interesting question: What happens if nodes have different snapshot strategies?

- Continued in fleshing out the actual protocol by extending the

EndToEndSpec, following the user journey - Added a

NewTxclient request and assert that the submittedtx(only an integer inMockTx) is in the closed and finalizedUTxOset (only a list of txs forMockTx) - We could make that test green by simply taking the first

AckTxand treat it as enough to update theconfirmedLedger+nubwhen applying transactions to the mock ledger - This is obviously faking the real logic, so we wrote a

HeadLogicSpecunit test, which asserts that only after seeign anAckTxfrom eachparty, the tx is added to theHeadStateas confirmed transaction - We keep a

Map tx (Set Party)as a data structure for all seensignaturesand made this test pass- This hinted that we likely want to have a

TxId txtype family and forced us to add some moreEq txandShow txconstraints - We all think that refactoring all the type families into a type class is long overdue!

- This hinted that we likely want to have a

- When trying the full end-to-end test again, we realize that our

Hydra.Network.Ouroborosis not broadcasting messages to itself, but the logic relies on this fact- We add this semantics and a

pendingtest, which should assert this properly

- We add this semantics and a

Today's TDD pattern was interesting and we should reflect on how to improve it:

- We started with a (failing) ETE test that injects a new transaction and expect it to be seen confirmed eventually at all nodes

- We made the test pass naively by confirming it on the first

AckTxmessage received - We then wrote a unit test at the level of the

HeadLogic'supdatefunction to assert a more stringent condition, namely that a node confirms a transaction iff. it has receivedAckTxfrom all the parties in the Head, including itself - To make this unit test pass we had to modify the state of the Head by:

- adding the list of parties in the

HeadParameters, which lead to a discussion on whether or not this was the right thing to do as theHeadParametersbeing part ofHeadStateimplies the head's logic can change them which does not make sense for the list of parties - adding a map from transactions (ideally TxIDs) to a Set of signatures in the

SimpleHeadState, which is thetransactionObjectspart of the state in the paper - Then we tally the "signatures" from the

AckTxwe receive, until we get all of them and then we confirm the tx

- adding the list of parties in the

- The EndToEnd test was still failing though. Analysing the trace we noticed that nodes were not receiving their own

ReqTxandAckTxmessages which means the tx could never be confirmed- => This is an assumption we made but never concretised through a test

- This should be a property of the network layer and tested there

- Fixing that (without a test) was easy enough but the EndToEnd test still fails

- Going through the logs again, everything seemed fine, all messages were there but we simply did not see the expected

TxConfirmedmessage- Increasing timeouts did not help

- Only node 1 ever confirmed a transaction

- the issue was that the list of known parties is only initialised as a consequence of the

Initcommand, which contains this list, so the other nodes never receive it

- On the plus side:

- Our high-level EndToEnd test caught two errors

- The traces are relatively straighforward to analyse and provide accurate information about a node's behaviour (not so much for the network traces which are somewhat too noisy)

- On the minus side:

- Troubleshooting protocol errors from the traces is hard

- Our HeadLogic unit test somehow relied on the assumption the network would relay the node's own messages, an assumption which is true in the mock network in

BehaviorSpecbut not tested and hence false in concreteOuroborosandZeroMQimplementations.

- More work on Technical Architecture document, adding sections on networking and on-chain messaging

- Started extraction of principles section into Architecture Decision Records, which are currently available in a branch

- Also started work on generifying the

HydraNetworklayer in order to be able to implement network related features without relying on specific technological implementation. This is also in a branch

We discussed again what we learned from the engineering meeting:

- We don't need the buffer, a simple outbound queue suffices

- Mixing of business logic and network part we feel uneasy, although we get the point of the benefits

- The tx submission protocol is still different

- The 2-state part of it at least

- The size of our messages are not always big

- Separation of "events to handle" and "buffer"

- We got the point of robustness and are sold to it

- Resilience in presence of (network) crashes and less often need to close the head

- Main difference to tx submission:

- We maybe not have a problem with ordering

- As long as we relay all messages in order

- And do not drop some messages in the application

- Snapshots provide the same natural pruning

- Do we need Relaying?

- It complicates things, but eventually is required

- So we leave it out for now and refined the drawing for a fully connected network without relaying

What did the team achieve this week?

- Discussed the pull-based approach to networking using ouroboros-network, internally and in the Hydra engineering meeting

- Took our preliminary user journey, extended our end-to-end tests based on this and drove implementation of the "closing" of a Head using that

- Build Hydra-the-project using Hydra-the-CI, primarily to cache derivations used for our nix-shell

- Provide first metrics from the hydra-node using prometheus

- Provided feedback about data and tail simulation results to researchers, adapted the simulations with updates to the protocol and ran more simulations

What are the main goals to achieve next week?

- Welcome Ahmad as Product manager to the team and onboard him

- Fill in the gaps in our Head logic and implement the coordinated Head protocol in a test-driven way

- Finalize tail simulations for the paper

Worked on completing the user journey as an End-to-End test:

- Add closing logic of the head

- Add a

UTxOtype family in Ledge. This propagates constraints everywhere, we really need a proper typeclass as an interface to ledger and transaction related types and functions, while keeping a handle for validation and confirmation of transactions - We need to post fanout transaction after some contestation period, which requires a way to

Delaythis effect- Trying to model this as a special case of

postTxhighlights the fact this is putting too much responsibility on theOnChainClientinterface - Option 1 would be to handle the delay in the event loop: provide a

Delayeffect which enqueues an event, and do not process the event before it times out - Option 2 is to

Delayan effect and spawn anasyncto do the effect later - In the particular case at hand, we just handle a single effect which is posting a FanoutTx

- Trying to model this as a special case of

- Got a passing test with hardcoded contestation period and delay effect (option 2)

- Trying the other option (delay event) and keeping it as it seems "better":

- applying an effect unconditionally after some time is probably wrong in general

- when delaying an event, we make this delayed action dependent on the state at the time of its handling and not at the time of its initiation which makes more sense: Some planned for effects might have become irrelevant because of concurrent changes

- We also move

Waitout ofEffectand intoOutcomealthough it's not used yet

-

Implement

--versionflag forhydra-node, see PR- this reuses what is done in the cardano-wallet with a minor tweak to use the SHA1 as a label in the sense of semver. The version number is extracted from

hydra-node.cabalfile viaPaths_hydra_nodemodule and the git SHA1 is injected using TH from commandgit rev-parse HEAD - We could also use

git describe HEADas a way to get both tag and commit info from git but then this information would be redundant with what cabal provides - When running this command in

ouroboros-networkfor example, I get the following result:This is so because a git repo can contain several packages, each with an executable or library at a different version so version tags alone might not give enough information and one needs to namespace tags.$ git describe --tags HEAD node/1.18.0-1169-gba062897f

- this reuses what is done in the cardano-wallet with a minor tweak to use the SHA1 as a label in the sense of semver. The version number is extracted from

-

Working on Technical Architecture Document

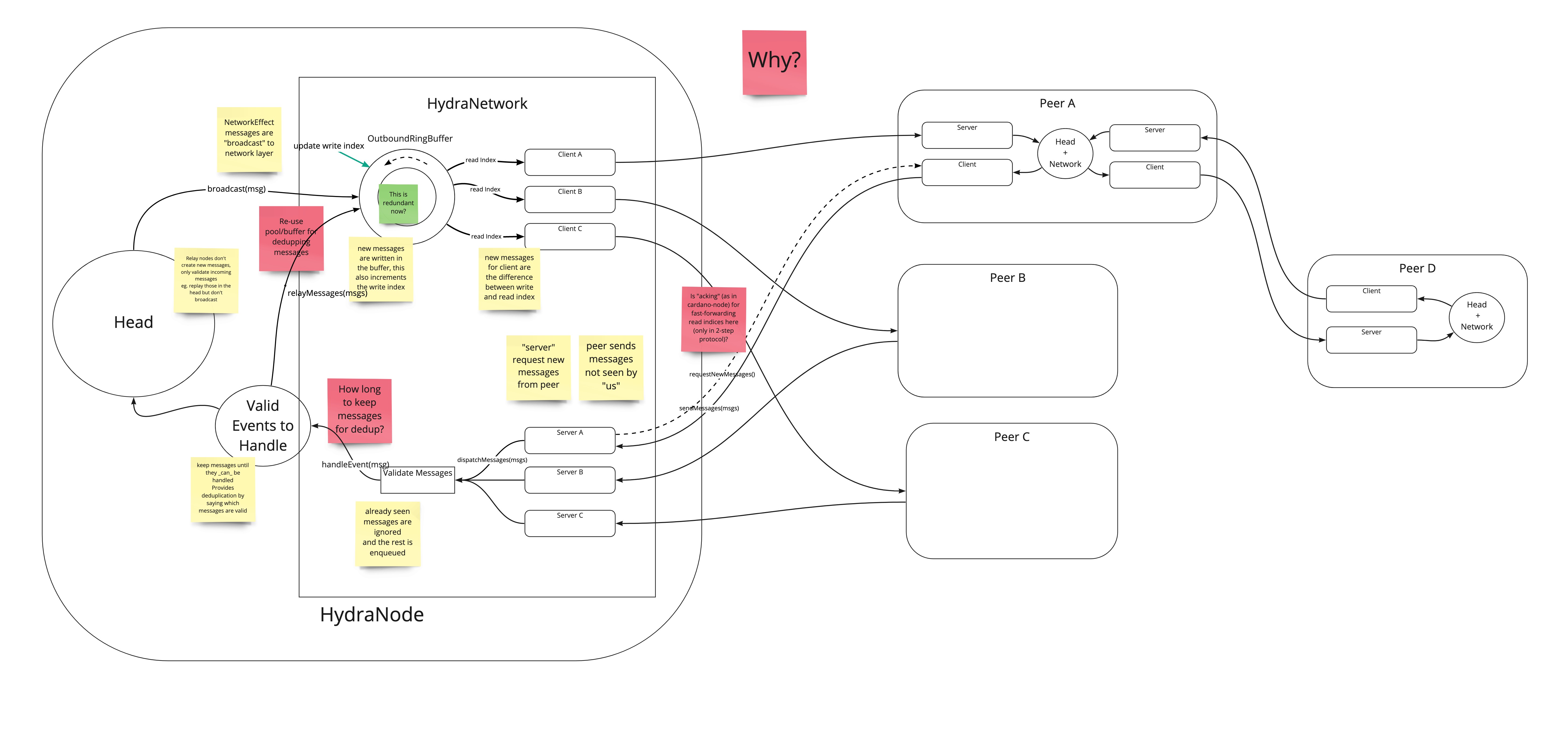

The following diagram was drawn to provide some more concrete grounds for the Engineering meeting (see below for minutes).

Agenda

- We showcase our current understanding of how Hydra head messaging could be done using a pull-based ouroboros-network layer (after we had a quick look on the cardano-node tx submission in-/outbound parts)

- Discussion on things we misunderstood or have not considered, as well as where to look in the cardano codebase for stealing code

Minutes

- Intro & Arnaud walks through the pull-based message flow image

- Did we understand it properly? Duncan starts to highlight differences to the cardano-node

- Why a ring-buffer?

- Tx validation does side-step deduplication, invalid txs can just be dropped

- Keep positive information instead of negative, i.e. keep track of expectations / whitelist instead of a blacklist9

- Use application knowledge to now what to keep -> is this possible?

- Maybe we can decide in the "application" level better "how long to keep" some messages

- 2-step protocol is to flood the network only with ids, not the actual transactions

- We might have big messages as well

- Adds latency, but not too much (because of pipelining)

- Mempool holds transactions and is flushed when there is a new block

- Implicitly because txs become invalid when they are incorporated in the ledger state

- Provides a natural point when to collect garbage by clearing the mempool

- Mempool code is using finger trees

- Mempool provides back-pressure because it has a bounded size

- similar idea as depicted ring buffer

- What is our positive tracking state? It is expecting AckTx upon seeing a ReqTx?

- Discuss "Events to handle"

- How long would the protocol wait for a ReqTx after seeing an AckTx -> application level decision

- Can we get stuck to this?

- How would relaying work? A relay node would not pick the ReqTx and keep it in a buffer, but still relay it

- Seeing a snapshot confirmed would flush the buffer in a relay as well

- Caveat in ouroboros-network right now:

- Possibility of multiple equal messages received at the same time

- Sharing some state between servers (inbound) could work around that

- We could use simulations to also simulate multiple hop broadcast

- What is bandwidth saturation property?

- Aligning the window of in-flight transaction + pipelining to saturate

- Why? What is the benefit of this kind of network architecture?

- very robust and very flexible

- everything is bounded

- deals with back-pressure cleanly

- deals with coming and going of (relaying) nodes

- it's efficient (maybe only using 2-step broadcast)

Our connection check for ZMQ-based networking was naive and the test is failing in CI. We need a proper protocol-level test that's based on actual messages sent and received between the nodes, but this is more involved as it requires some modifications to the logic itself: NetworkEffect should not be handled until the network is actually connected so there is a handshake phase before that.

Goal for pair session:

- move network check from HydraNode to the Network layer

- note that the

NetworkMutableStatelayer in Ouroboros network contains already information about the peers so we could use that, but this mean we need some monitoring thread to check changes in this state and send some notification

Discussing pull-based protocol:

- ask each peer what is "new"? => we need a global counter on messages, but how do you get one?

- Request for new messages

- -> How to provide new messages -> snapshot based synchronization point? => there is an intertwining of network and application layer

- locally incrementing counter -> vector of numbers?

- we would like to avoid having having a link between network layer which exchange messages and application layer which takes care of confirmation/snapshotting

- msgids are unique => "give me all msgids you have" then merge with what you have

- having multiple heads over a single network? -> we need prefix-based namespacing

Potential solution inspired by Ouroboros.Network.TxSubmission.Outbound/Inbound protocols:

- each message has a unique id (hash of message?)

- node maintains one outbound messages buffer (ring buffer?) which is fed by

NetworkEffect- => guarantees proper ordering of messages from the Head's logic

- server maintains an index for each peer about messages the peer has requested (similar to acknowledged txs)

- this index is advanced when peer requests "new" messages => we just send X messages from its index and advance it

- buffer can be flushed (write index advanced) to index Y when all peers are passed Y

- node has one inbound messages buffer

- it maintains an index of seen messages for each peer it knows

- it periodically polls the peers for new messages and retrieves those not seen, advancing its index

- messages received are sent to an inbound buffer

- head pull from inbound buffer and tries to apply message to its state

- if OK => message is put in the outbound queue, eg. it's a

NetworkEffect - if

Wait=> message is put back in inbound buffer to be reprocessed later

- if OK => message is put in the outbound queue, eg. it's a

- Problem: how do we prevent messages from looping?

- with unique message ids => only put in inbound buffer message with unknown ids => we need to keep an index of all seen message ids so far

- we could also link messages from a single peer in the same way a git commit is linked to its predecessor => we only have to keep "branches" per peer and prune branches as soon as we have received messages with IDs => instead of an index of all messages, we have an ordered index by peer

See also Miro board

- We got our

hydra-pocrepository enabled as a jobset on theCardanoproject: https://hydra.iohk.io/jobset/Cardano/hydra-poc#tabs-configuration - Goal: Have our shell derivation be built by Hydra (CI) so we can use the cached outputs from

hyrda.iohk.io - Try to get the canonical simple

pkgs.hellobuilt using<nixpkgs>fails, also pinning the nixpkgs usingbuiltins.fetchTarballseems not to work - Seems like only

masteris built (although there is some mention of "githubpulls" here) - Pinning using

bultings.fetchTarballresulted in

"access to URI 'https://github.com/nixos/nixpkgs/archive/1232484e2bbd1c84f968010ea5043a73111f7549.tar.gz' is forbidden in restricted mode"

- Adding a

sha256to thefetchTarballdoes not seem to help either - Using

nix-build -I release.nix ./release.nix -A hello --restrict-evalthe same error can be reproduced locally - This begs the question now, how upstream sources are fetched? niv?

- Setting off to re-creating the sources.nix/.json structure of similar projects using

niv - Seems like fetching

iohk-nixand using thatnixpkgsdid the trick andpkgs.hellocould be built now! - Verifying by changing the

hellojob (no rebuild if evaluation resulted in same derivation) - Adding a job to build the shell derivation of

shell.nix; this will definitely fail becausemkShellprevents build, but doing it to check whether fetching dependencies is allowed this time within restricted eval -> nice, got the expected error

nobuildPhase