-

Notifications

You must be signed in to change notification settings - Fork 330

List of Project Ideas for GSoC 2020

If you haven't read it already, please start with our How to Apply guide.

Below is a list of high impact projects that we think are of appropriate scope and complexity for the program.

There should be something for anyone fluent in C++ and interested in writing clean, performant code in a modern and well maintained codebase.

Projects are first sorted by application:

- appleseed: the core rendering library

- appleseed.studio: a graphical application to build, edit, render and debug scenes

- appleseed-max: a native plugin for Autodesk 3ds Max [1]

- appleseed-maya: a native plugin for Autodesk Maya [2]

[1] Student versions of Autodesk 3ds Max can be downloaded freely from Autodesk website.

[2] Student versions of Autodesk Maya can be downloaded freely from Autodesk website.

Then, for each application, projects are sorted by ascending difficulty. Medium/hard difficulties are not necessarily harder in a scientific sense (though they may be), but may simply require more refactoring or efforts to integrate the feature into the software.

Similarly, easy difficulty doesn't necessarily mean the project will be a walk in the park. As in all software projects there may be unexpected difficulties that you will need to identify and overcome with your mentor.

Several projects are only starting point for bigger adventures. For those, we give an overview of possible avenues to expand on them after the summer.

appleseed projects:

-

Easy difficulty:

-

Medium difficulty:

-

Hard difficulty:

appleseed.studio projects:

-

Easy difficulty:

-

Medium difficulty:

appleseed-max projects:

-

Easy difficulty:

-

Medium difficulty:

-

Hard difficulty:

appleseed-maya projects:

- Hard difficulty:

(Renders and photographs below used with permission of the authors.)

- Required skills: C++, Reading and understanding scientific papers

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

(Cornell Box rendered with false colors and relative luminance isolines.)

(Cornell Box rendered with false colors and relative luminance isolines.)

appleseed implements a stack of post-processing stages which are applied to the rendered image once rendering is complete. Two post-processing stages are currently available: a false color visualization stage, and a render stamp stage that allows to add an appleseed logo and various text information to a render.

The goal of this project is to add post-processing stages often requested by artists. In particular, two come to mind: tonemapping and bloom.

Tasks:

- Figure out by discussing with artists and users which post-processing stages are most often requested.

- Explore what post-processing features other renderers are offering.

- Find out what are the state-of-the-art algorithms and techniques available to implement these stages.

- Implement the stages.

- Create test scenes for the automatic test suite.

- Create one or more eye candy renders to showcase the results and to illustrate future release notes.

- Required skills: C++

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

IES light profiles describe light distribution in luminaires. This page has more information on the topic. IES light profile specifications can be found on this page. Many IES profiles can be downloaded from this page.

We already have the parsing code (iesparser.h, iesparser.cpp).

Tasks:

- Learn about IES profiles and understand the concepts involved.

- Review the parsing code: Does it work? Is it complete? Is it accurate?

- Finish/fix/refactor the parsing code, as necessary.

- Add a new light type that implements sampling of IES profiles.

- Create a few test scenes demonstrating the results.

- Create an eye candy scene to illustrate the feature in future release notes.

- Required skills: C++, Reading and understanding scientific papers

- Challenges: The science of colors and color spaces

- Primary mentor: Franz

- Secondary mentor: Esteban

(Solar Disk Renders from The Sky Dome Appearance Project.)

(Solar Disk Renders from The Sky Dome Appearance Project.)

appleseed implements two physically-based models for day light (the classic but fairly oudated Preetham model, and the more recent Hosek & Wilkie model) and one for the Sun (also from the Preetham paper).

Unfortunately, results from these models aren't entirely satisfactory (results tend to have strong blue/green tints), at least the way they are implemented in appleseed. Either we have some bugs, or we're missing some important tweaks, or there are issues with the way we convert spectral values to RGB (or any combination of the three). We are also missing some features such as a visible solar disk, and the ability to configure the Sun and the sky model with a geographic location, a date and a time.

Tasks:

- Get familiar with the topic and survey the state-of-the-art in physically-based Sun and sky rendering.

- Read the Preetham and Hosek & Wilkie papers.

- Check our implementations of these papers, compare our results with those from literature and from other renderers.

- Fix and improve the existing implementations.

- Implement visible solar disk.

- Implement the ability to configure the Sun and the sky with a geographic location, a date and a time.

- Create a couple eye candy renders to show the improvements and to illustrate future release notes.

- Required skills: C++, Reading and understanding scientific papers

- Challenges: Rendering efficiency

- Primary mentor: Kevin

- Secondary mentor: Esteban

appleseed allow you to use physical objects as light sources to render a scene. Currently, we can either use triangles or analytical shapes (this includes rectangles, disks and spheres).

One of the most quality decisive step that occurs while rendering a scene is light sampling -- the process sampling points on a light that enlighten a given surface. Improving this process has a direct impact on the image quality.

As of today, appleseed use uniform sampling to sample physical lights. This technique is easy to implement and to understand, but doesn't take benefits of shape properties. By knowing what kind of light we have in the scene, wether it's a triangle or an analytical shape, we can generate better samples using geometrical their properties.

Tasks:

- Get familiar with the topic of sampling lights and the solid angle sampling technique.

- Implement solid angle sampling for triangles.

- Analyse the technique and choose when solid angle sampling should be chosen over uniform sampling.

- Provide comparison renders and convergence graphs.

- Implement solid angle sampling for analytic shapes (rectangle, sphere and disk).

- Analyse the technique and choose when solid angle sampling should be chosen over uniform sampling.

- Provide renders and test scenes for the new features.

Additional tasks:

- Get familiar with more advanced sampling technique such as Stratified sampling of projected spherical caps.

- Read the Carlos Ureña & Iliyan Georgiev paper.

- Implement Stratified sampling of projected spherical caps in appleseed.

- Study the technique and choose carefully when to use it or not.

- Do some renders and convergence comparison to illustrate future release notes.

- Required skills: C++, solid understanding of light transport, reading and understanding scientific papers

- Challenges: Getting it right and fast

- Primary mentor: Franz

- Secondary mentor: Esteban

(Illustration from Practical Path Guiding for Efficient Light-Transport Simulation.)

(Illustration from Practical Path Guiding for Efficient Light-Transport Simulation.)

One way to improve the efficiency of path tracing is to perform better importance sampling. This is turns require to build (and potentially to iteratively improve) a data structure that helps figuring out which paths are the most relevant and promising (i.e. carry the most energy). This class of techniques is called Path Guiding.

The goal of this project is to introduce path guiding to appleseed to improve its ability to render scenes with complicated light paths which are fairly typical in architecture and product visualization.

Tasks:

- Get familiar with the topic and survey the state-of-the-art in path guiding. Two good starting points are Adjoint-Driven Russian Roulette and Splitting in Light Transport Simulation and Practical Path Guiding for Efficient Light-Transport Simulation.

- Choose the most relevant algorithm for our needs and implement it in appleseed.

- Carefully compare convergence rates with and without the help of the algorithm.

- Create test scenes to ensure that the algorithm performs as expected, now and in the future.

- Create one or more eye candy renders to showcase the improvement and to illustrate future release notes.

- Required skills: C++, reading and understanding scientific papers

- Challenges: Getting it right

- Primary mentor: Franz

- Secondary mentor: Esteban

Light transport is the central problem solved by a physically-based global illumination renderer. It implies finding ways to connect, with straight lines (in the absence of scattering media such as smoke or fog), light sources with the camera taking into account how light is reflected by objects. Efficient light transport is one of the most difficult problem in physically-based rendering.

The paper Unbiased Photon Gathering for Light Transport Simulation proposes to trace photons from light sources (like it is done in the photon mapping algorithm) and use these photons to establish paths from lights to the camera in an unbiased manner. This is unlike traditional photon mapping which performs photon density estimation.

Since appleseed already features an advanced photon tracer it should be possible to implement this algorithm, and play with it, with reasonable effort.

Tasks:

- Figure out what we need to store in photons in order to allow reconstruction of a light path.

- Add a new lighting engine, drawing inspiration from the SPPM lighting engine which also needs to trace both photons from lights, and paths from the camera.

- Trace camera paths. At the extreme end of these paths lookup the nearest photon and establish a connection.

- Render simple scenes, such as the built-in Cornell Box, for which we know exactly what to expect, and carefully compare results of the new algorithm with ground truth images.

- Required skills: C++, reading and understanding scientific papers

- Challenges: Refactoring

- Primary mentor: Esteban

- Secondary mentor: Franz

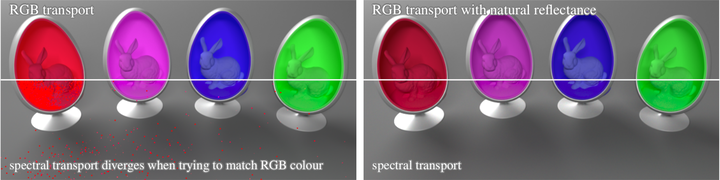

One of most unique features of appleseed is the possibility of rendering in both RGB (3 bands) and spectral (31 bands) colorspaces and to mix both color representations in the same scene.

This feature requires the ability to convert colors from RGB to a spectral representation. While the conversion is not well defined in a mathematical sense, many RGB colors map to the same spectral color, there are some algorithms that try to approximate this conversion.

The goal of this project would be to implement an improved RGB to spectral color conversion method based on this paper: Physically Meaningful Rendering using Tristimulus Colours.

This would improve the correctness of our renders for scenes containing both RGB and spectral colors.

Tasks:

- Implement the improved RGB to spectral color conversion method.

- Add unit tests and test scenes.

- Render the whole test suite, identify differences due to the new color conversion code and update reference images as appropriate.

- Required skills: C++, Qt

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

We need to allow appleseed.studio users to choose pre-made, high quality materials from a material library, as well as to save their own materials into a material library, instead of forcing them to recreate all materials in every scene. Moreover, even tiny scenes can have many materials. Artists can't rely on names to know which material is applied to an object. A material library with material previews would be a tremendous help to appleseed.studio users.

A prerequisite to this project (which may be taken care of by the mentor) is to enable import/export of all material types from/to files. This is already implemented for Disney materials (which is one particular type of materials in appleseed); this support should be extended to all types of materials.

Ideally, the material library/browser would have at least the following features:

- Show a visual collection of materials, with previews of each materials and some metadata (name, author, date and time of creation)

- Allow filtering materials based on metadata

- Allow to drag and drop a material onto an object instance

- Highlight the material under the mouse cursor when clicking in the scene (material picking)

- Allow replacing an existing material from the project by a material from the library

Possible follow-ups:

- Allow for adding "material library sources" (e.g., GitHub repositories) to a material library. This would allow people to publish and share their own collections of materials, in a decentralized manner.

- Integrate the material library from appleseed.studio into appleseed plugins for 3ds Max and Maya.

- Required skills: C++, Qt

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

Finding the right trade-off between render time and quality, lighting setup, or material parameters can involve a large number of "proof" renders. A tool that would saving a render to some kind of render history and compare renders from the history would be very helpful to both users and developers.

When saved to the history, renders should be tagged with the date and time of the render, total render time, render settings, and possibly even the rendering log. In addition, the user should be able to attach comments to renders.

Task:

- Build the user interface for the render history.

- Allow for saving a render to the history.

- Allow simple comparisons between two renders from the histoy.

- Allow comparing a render from the history with the current render (even during rendering).

- Allow for saving the render history along with the project.

Possible follow-ups:

- Add more comparison modes (side-by-side, toggle, overlay, slider).

- Add a way to attach arbitrary comments to renders.

- Required skills: C++, Qt, OpenGL

- Challenges: Interacting with the Project Explorer

- Primary mentor: Franz

- Secondary mentor: Esteban

The goal of this project is to allow users to translate, rotate and scale assembly instances, object instances and lights using visual widgets rendered with OpenGL on top of the raytraced output.

For example, here are the manipulator widgets of Autodesk 3ds Max 2019:

Tasks:

- Add a way to layer a framebuffer rendered with OpenGL on top of the raytraced output with as little an impact on performances as possible.

- Draw translation/rotation/scaling widgets when a transformable entity (assembly instance, object instance, light) is selected.

- Handle user interaction with these widgets.

- Update the entity's transformation during interaction.

- Required skills: C++

- Challenges: Using the 3ds Max API

- Primary mentor: Franz

- Secondary mentor: Esteban

We almost have transformation (rigid) motion blur support in the 3ds Max plugin, the pull request is currently in review: https://github.com/appleseedhq/appleseed-max/pull/260.

The goal of this project is to add deformation motion blur support to the plugin. Deformation motion blur happens when an object changes its shape (but never its topology), i.e. when its vertices move from one frame to the other. A typical example of deformation motion blur is a walking human character.

Tasks:

- Understand how deformation motion blur is specified in appleseed.

- Understand how to access geometry at various points in time using the 3ds Max SDK.

- Implement support for deformation motion blur.

- Create a few test scenes demonstrating the results.

Possible followup:

- Create an eye candy scene to demonstrate the feature in future release notes.

- Required skills: C++

- Challenges: Using the 3ds Max API

- Primary mentor: Herbert

- Secondary mentor: Franz

Currently, the appleseed plugin for Autodesk 3ds Max translates native 3ds Max cameras into appleseed cameras when rendering begins or when the scene is exported. While this works and is enough for simple scenes, native 3ds Max camera is lacking many features that are required for realistic renderings, such as depth of field and custom bokeh shapes.

The idea of this project is to allow 3ds Max users to instantiate, and manipulate, an appleseed camera entity that exposes all functionalities of appleseed's native cameras.

The principal difficulty of this project will be to determine how to write a camera plugin for 3ds Max as there appears to be little documentation on the topic. One alternative to creating a new camera plugin would be to simply inject appleseed settings into the 3ds Max camera's user interface, if that's possible. Another alternative solution would be to place appleseed camera settings in appleseed's render settings panel.

- Determine how to create a camera plugin in 3ds Max. If necessary, ask on CGTalk's 3dsMax SDK and MaxScript forum.

- Expose settings from native appleseed cameras.

Possible follow-ups:

- Add user interface widgets to adjust depth of field settings.

- Required skills: C++

- Challenges: Using the 3ds Max API

- Primary mentor: Franz

- Secondary mentor: Esteban

At the moment, appleseed plugin for Autodesk 3ds Max only supports "tiled" or "final" rendering mode of 3ds Max: you hit render, render starts and begins to appear tile after tile. Meanwhile, 3ds Max does not allow any user input apart from a way to stop the render.

With the vast amount of computational power in modern workstations, it is now possible to render a scene interactively, while moving the camera, manipulating objects and light sources or modifying material parameters.

In fact, appleseed and appleseed.studio already both have native support for interactive rendering.

The goal of this project is to enable interactive appleseed rendering inside 3ds Max via 3ds Max's ActiveShade functionality. Implementing interactive rendering in appleseed should be even easier with 3ds Max 2017 due to new APIs.

- Investigate the ActiveShade API.

- Investigate the new API related to interactive rendering in 3ds Max 2017.

- Implement a first version of interactive rendering limited to camera movements.

- Add support for live material and light adjustements.

- Add support for object movements and deformations.

- Required skills: C++

- Challenges: Using the Maya API

- Primary mentor: Esteban

- Secondary mentor: Franz

At the moment, the appleseed plugin for Autodesk Maya only supports "tiled" or "final" rendering mode of Maya: you hit render, render starts and begins to appear tile after tile. Meanwhile, Maya does not allow any user input apart from a way to stop the render.

With the vast amount of computational power in modern workstations, it is now possible to render a scene interactively, while moving the camera, manipulating objects and light sources or modifying material parameters.

In fact, appleseed and appleseed.studio already both have native support for interactive rendering.

The goal of this project is to enable interactive appleseed rendering inside Maya

- Investigate the Maya API.

- Implement a first version of interactive rendering limited to camera movements.

- Add support for live material and light adjustements.

- Add support for object movements and deformations.