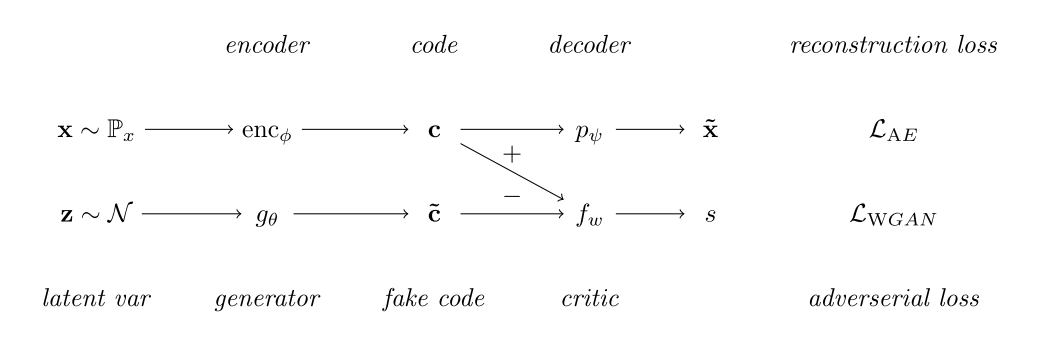

tensorflow implementation of Adversarially Regularized Autoencoders for Generating Discrete Structures (ARAE)

While the Paper used the Stanford Natural Language Inference dataset for the text generation, this implementation only used the mnist dataset. I implemented the continuous version for this implementaion, but discrete version is implemented as footnote in this code.

- tensorflow == 1.0.0

- numpy == 1.12.0

- matplotlib == 1.3.1

Run the following code for image reconstruction.

python train.py

-

The model trained 100000 steps

-

Generated from fake(noise) data

- Generated from real data

I didn't multiply the critic gradient before backpropping to the encoder.