Features • Installation • Quick Start • Tutorial • Contributing • Citation • License

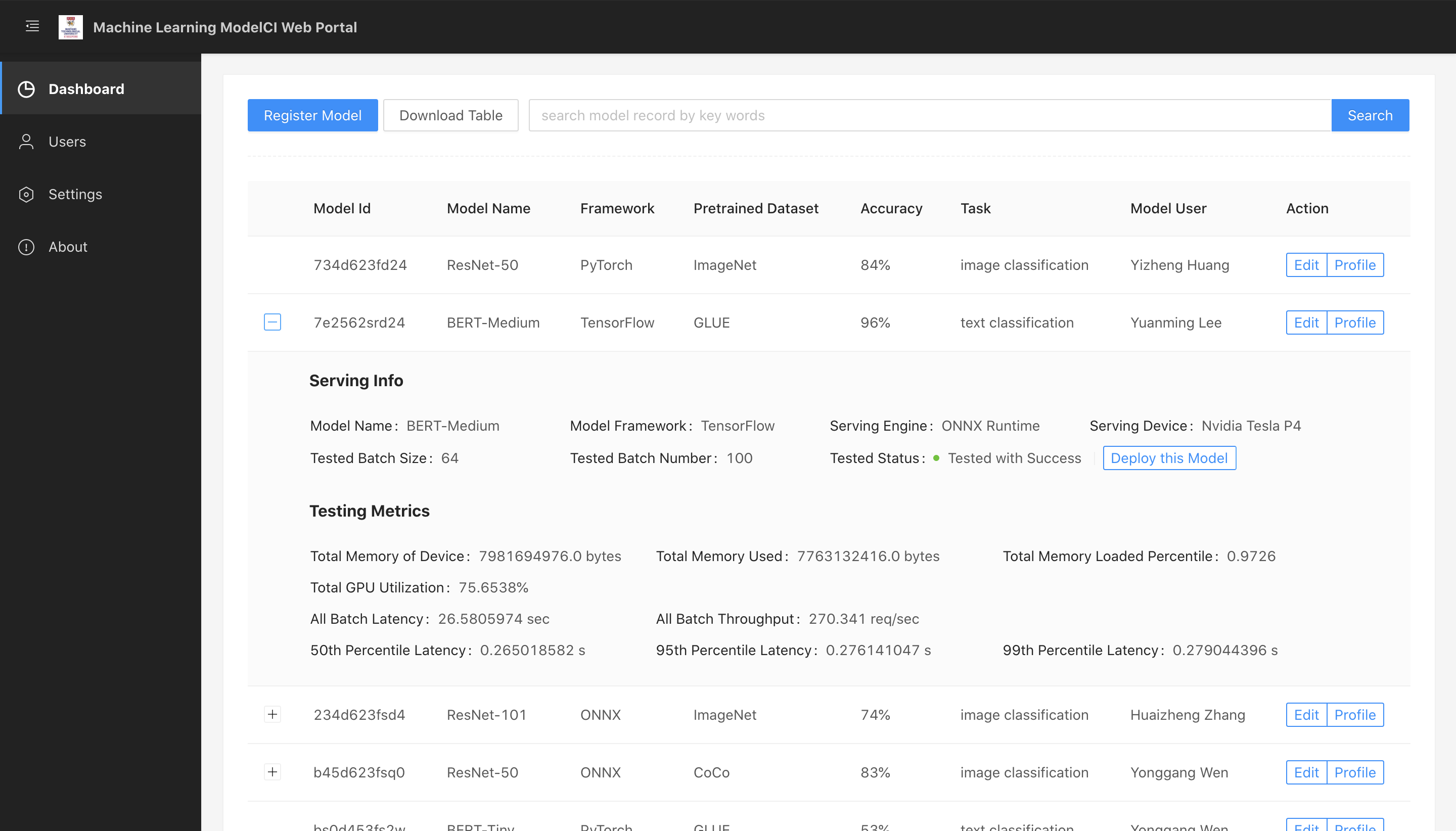

- Housekeeper provides a refined management for model (service) registration, deletion, update and selection.

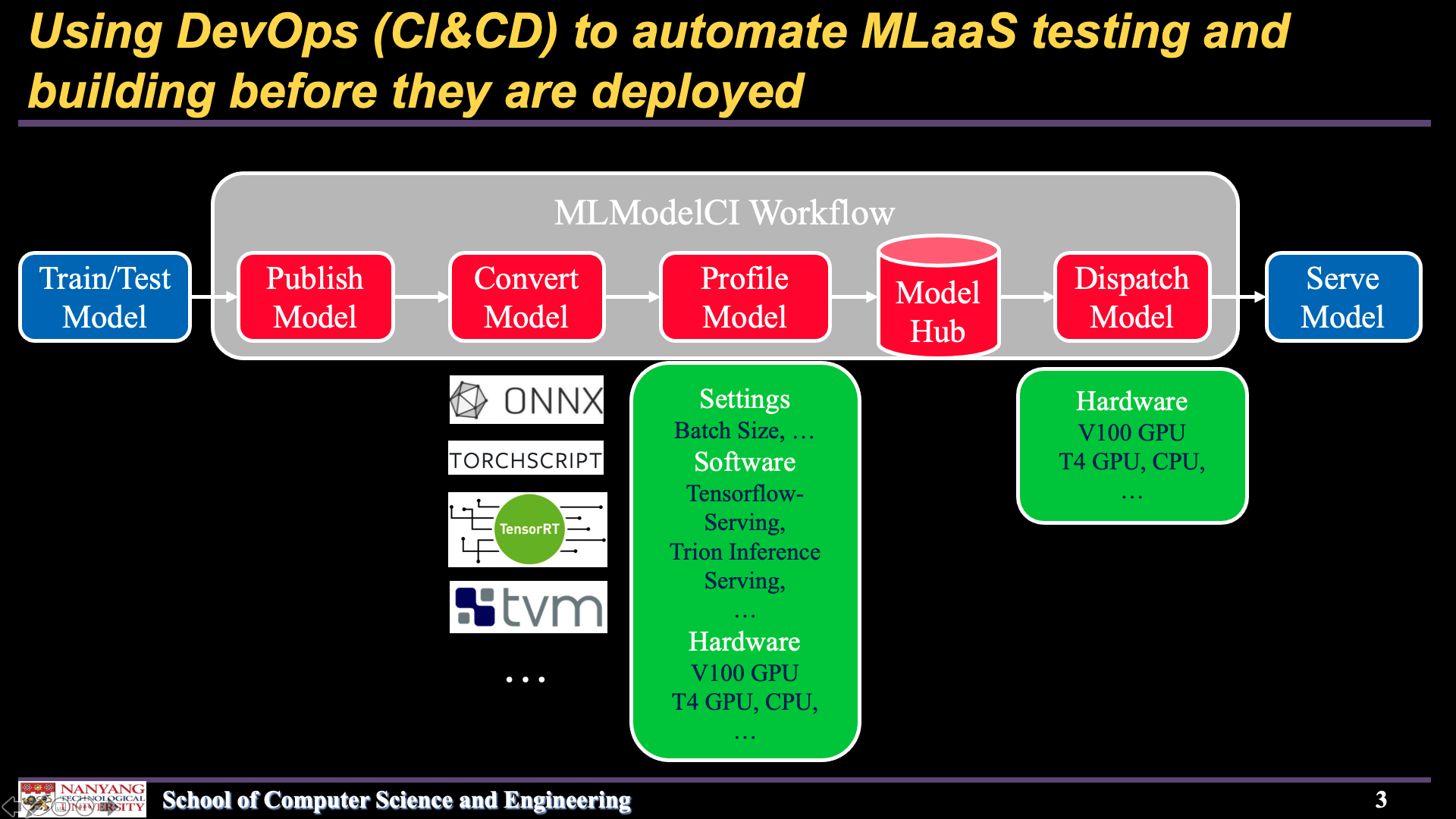

- Converter is designed to convert models to serialized and optimized formats so that the models can be deployed to cloud. Support Tensorflow SavedModel, ONNX, TorchScript, TensorRT

- Profiler simulates the real service behavior by invoking a gRPC client and a model service, and provides a detailed report about model runtime performance (e.g. P99-latency and throughput) in production environment.

- Dispatcher launches a serving system to load a model in a containerized manner and dispatches the MLaaS to a device. Support Tensorflow Serving, Trion Inference Serving, ONNX runtime, Web Framework (e.g., FastAPI)

- Controller receives data from the monitor and node exporter, and controls the whole workflow of our system.

The system is still under the active development. Please go to [CHANGELOG.md] to check our latest update information. If your want to join in our development team, please contact huaizhen001 @ e.ntu.edu.sg

News

- (2020-10) You can install the system with pip.

- (2020-08) The work has been accepted as an opensource competition paper at ACMMM2020! The full paper is available at https://arxiv.org/abs/2006.05096.

- (2020-06) The docker image has been built.

- (2020-05) The cAdvisor is applied to monitor the running containers.

# need to install requests package first

pip install setuptools requests==2.23.0

# then install modelci

pip install git+https://github.com/cap-ntu/ML-Model-CI.git@master --use-feature=2020-resolverbash scripts/install.shNote

- Conda and Docker are required to run this installation script.

- To use TensorRT, you have to manually install TensorRT (

sudois required). See instruction here.

docker pull mlmodelci/mlmodelciWe have built a demo, check here to run.

| Web frontend | Workflow |

|---|---|

|

|

MLModelCI provides a complete platform for managing, converting, profiling, and deploying models as cloud services (MLaaS). You just need to register your models to our platform and it will take over the rest tasks. To give a more clear start, we present the whole pipeline step by step as follows.

Once you have installed, start ModelCI service by:

modelci startAssume you have a ResNet50 model trained by PyTorch. To deploy it as a cloud service, the first step is to publish the model to our system.

from modelci.hub.manager import register_model

from modelci.types.bo import IOShape, Task, Metric

# Register a Trained ResNet50 Model to ModelHub.

register_model(

'home/ResNet50/pytorch/1.zip',

dataset='ImageNet',

metric={Metric.ACC: 0.76},

task=Task.IMAGE_CLASSIFICATION,

inputs=[IOShape([-1, 3, 224, 224], float)],

outputs=[IOShape([-1, 1000], float)],

convert=True,

profile=True

)As the a newly trained model can not be deployed to cloud, MLModelCI converts it to some optimized formats (e.g., TorchScript and ONNX) automatically.

You can finish this on your own:

from modelci.hub.converter import ONNXConverter

from modelci.types.bo import IOShape

ONNXConverter.from_torch_module(

'<path to torch model>',

'<path to export onnx model>',

inputs=[IOShape([-1, 3, 224, 224], float)],

)Before deploying an optimized model as a cloud service, developers need to understand its runtime performance (e.g., latency and throughput) so to set up a more cost-effective solution (batch size? device? serving system? etc.). MLModelCI provides a profile to automate the processing.

You can manually profile your models as follows:

from modelci.hub.client.torch_client import CVTorchClient

from modelci.hub.profiler import Profiler

test_data_item = ...

batch_num = ...

batch_size = ...

model_info = ...

# create a client

torch_client = CVTorchClient(test_data_item, batch_num, batch_size, asynchronous=False)

# init the profiler

profiler = Profiler(model_info=model_info, server_name='name of your server', inspector=torch_client)

# start profiling model

profiler.diagnose('device name')MLModelCI provides a dispatcher to deploy a model as a cloud service. The dispatcher launches a serving system (e.g. Tensorflow-Serving) to load a model in a containerized manner and dispatches the MLaaS to a device.

We search for a converted model and then dispatch it to a device with a specific batch size.

from modelci.hub.deployer.dispatcher import serve

from modelci.hub.manager import retrieve_model

from modelci.types.bo import Framework, Engine

model_info = ...

# get saved model information

model_info = retrieve_model(architecture_name='ResNet50', framework=Framework.PYTORCH, engine=Engine.TORCHSCRIPT)

# deploy the model to cuda device 0.

serve(save_path=model_info[0].saved_path, device='cuda:0', name='torchscript-serving', batch_size=16) Now your model is an efficient cloud service!

For more information please take a look at our tutorials.

- Installation, Converting and Registering Image Classification Model by ModelCI

- Converting and Registering Object Detection model by ModelCI

After the Quick Start, we provide detailed tutorials for users to understand our system.

- Register a Model in ModelHub

- Convert a Model to Optimized Formats

- Profile a Model for Cost-Effective MLaaS

- Dispatch a Model as a Cloud Service

- Manage Models with Housekeeper

MLModelCI welcomes your contributions! Please refer to here to get start.

If you use MLModelCI in your work or use any functions published in MLModelCI, we would appreciate if you could cite:

@inproceedings{10.1145/3394171.3414535,

author = {Zhang, Huaizheng and Li, Yuanming and Huang, Yizheng and Wen, Yonggang and Yin, Jianxiong and Guan, Kyle},

title = {MLModelCI: An Automatic Cloud Platform for Efficient MLaaS},

year = {2020},

url = {https://doi.org/10.1145/3394171.3414535},

doi = {10.1145/3394171.3414535},

booktitle = {Proceedings of the 28th ACM International Conference on Multimedia},

pages = {4453–4456},

numpages = {4},

location = {Seattle, WA, USA},

series = {MM '20}

}

Please feel free to contact our team if you meet any problem when using this source code. We are glad to upgrade the code meet to your requirements if it is reasonable.

We also open to collaboration based on this elementary system and research idea.

huaizhen001 AT e.ntu.edu.sg

Copyright 2020 Nanyang Technological University, Singapore

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.