mpcrl is a library for training model-based Reinforcement Learning (RL) agents with Model Predictive Control (MPC) as function approximation. This framework, also known as MPC-based RL, was first proposed in [1] and has so far been shown effective in various applications and with different learning algorithms, e.g., [2,3].

This framework merges two powerful control techinques into a single data-driven one

-

MPC, a well-known control methodology that exploits a prediction model to predict the future behaviour of the environment and compute the optimal action

-

and RL, a Machine Learning paradigm that showed many successes in recent years (with games such as chess, Go, etc.) and is highly adaptable to unknown and complex-to-model environments.

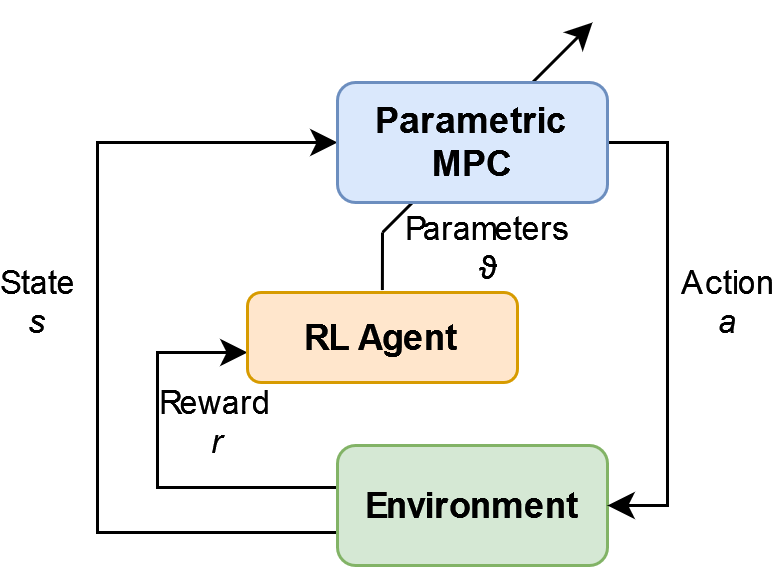

The figure shows the main idea behind this learning-based control approach. The MPC controller, parametrized in

To install the package, run

pip install mpcrlmpcrl has the following dependencies

For playing around with the source code instead, run

git clone https://github.com/FilippoAiraldi/mpc-reinforcement-learning.gitOur examples subdirectory contains an example application on a small linear time-invariant (LTI) system, tackled both with Q-learning and Deterministic Policy Gradient (DPG).

The repository is provided under the MIT License. See the LICENSE file included with this repository.

Filippo Airaldi, PhD Candidate [[email protected] | [email protected]]

Delft Center for Systems and Control in Delft University of Technology

Copyright (c) 2023 Filippo Airaldi.

Copyright notice: Technische Universiteit Delft hereby disclaims all copyright interest in the program “mpcrl” (Reinforcement Learning with Model Predictive Control) written by the Author(s). Prof. Dr. Ir. Fred van Keulen, Dean of 3mE.

[1] S. Gros and M. Zanon, "Data-Driven Economic NMPC Using Reinforcement Learning," in IEEE Transactions on Automatic Control, vol. 65, no. 2, pp. 636-648, Feb. 2020, doi: 10.1109/TAC.2019.2913768.

[2] H. N. Esfahani, A. B. Kordabad and S. Gros, "Approximate Robust NMPC using Reinforcement Learning," 2021 European Control Conference (ECC), 2021, pp. 132-137, doi: 10.23919/ECC54610.2021.9655129.

[3] W. Cai, A. B. Kordabad, H. N. Esfahani, A. M. Lekkas and S. Gros, "MPC-based Reinforcement Learning for a Simplified Freight Mission of Autonomous Surface Vehicles," 2021 60th IEEE Conference on Decision and Control (CDC), 2021, pp. 2990-2995, doi: 10.1109/CDC45484.2021.9683750.