-

Notifications

You must be signed in to change notification settings - Fork 0

Object Detection

Tensorflow's Object Detection API has several pre-trained models such as efficientnet and ssd_mobilenet_v2_fpnlite.

- We use the TF2 version, whose installation step-by-step can be found here.

- Pre-trained models can be found here.

- Our training script: ai/training/tfod/main.py.

- CLI for training:

python main.py.

tfod/config.py

...

TRAIN_IMAGES_DIR = "../data/raw/Images/"

TRAIN_ANNOTS_DIR = "../data/raw/Annotations/"

ANNOTATION_PATTERN = "xywh" # YOLO format

HAS_TEST_FOLDER = False

TEST_IMAGES_DIR = TRAIN_IMAGES_DIR

TEST_ANNOTS_DIR = TRAIN_ANNOTS_DIR

LABELMAP_PATH = "labelmap.pbtxt"

...

PRETRAINED_MODEL = "models/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8"

CONFIG_PATH = os.path.join(PRETRAINED_MODEL, "pipeline.config")

CKPT_PATH = os.path.join(PRETRAINED_MODEL, "checkpoint")

SUMMARY_PATH = f"{PRETRAINED_MODEL}/results"

...Please check config.py for more fine-tuning settings.

labelmap.pbtxt

item {

id: 1

name: 'person'

}

item {

id: 2

name: 'gun'

}

item {

id: 3

name: 'helmet'

}params.txt

0.01;0.5

We can change learning_rate and confidence_threshold online, meaning, while training.

DETR (DEtection TRanformer) treats object detection as a problem of predicting a (fixed) collection of objects.

Unlike traditional object detection methods, there are no anchors or filtering of bounding boxes via non-maximum suppression. DETR implements a traditional transformer architecture in the resulting feature vector of a CNN. The output, finally, is the prediction set.

- Repository: https://github.com/facebookresearch/detr/

- Pre-trained model: https://dl.fbaipublicfiles.com/detr/detr-r50-e632da11.pth

- Our training script: ai/training/detr/main.py

- CLI -- training from scratch:

python main.py --dataset_file hsc --coco_path ../data/yolo --epochs 300 --lr=1e-4 --batch_size=1 --num_workers=4 --output_dir="outputs" --num_queries 30 - CLI -- fine tuning:

python main.py --dataset_file hsc --coco_path ../data/yolo --epochs 300 --lr=1e-4 --batch_size=1 --num_workers=4 --output_dir="outputs" --resume="detr-r50-e632da11.pth

datasets/__init__.py

def build_dataset(image_set, args):

...

if args.dataset_file == 'hsc':

return build_hsc(image_set, args)

...datasets/coco.py

def build_hsc(image_set, args):

...

return dataset

...

Object detection with CenterNet models an object as a single point -- the center of its bounding box. Other properties, such as the object's size, are then regressed directly from the image's attributes at its central location.

- Repository: https://github.com/xingyizhou/CenterNet

- Training script: ai/training/CenterNet/src/main.py

- CLI:

python main.py ctdet --exp_id hsc --dataset hsc --batch_size 1 --lr 1.25e-4 --gpus 0 --debug 5 --val_intervals 1 --center_thresh 0.5 --scores_thresh 0.5

See HSC dataset.

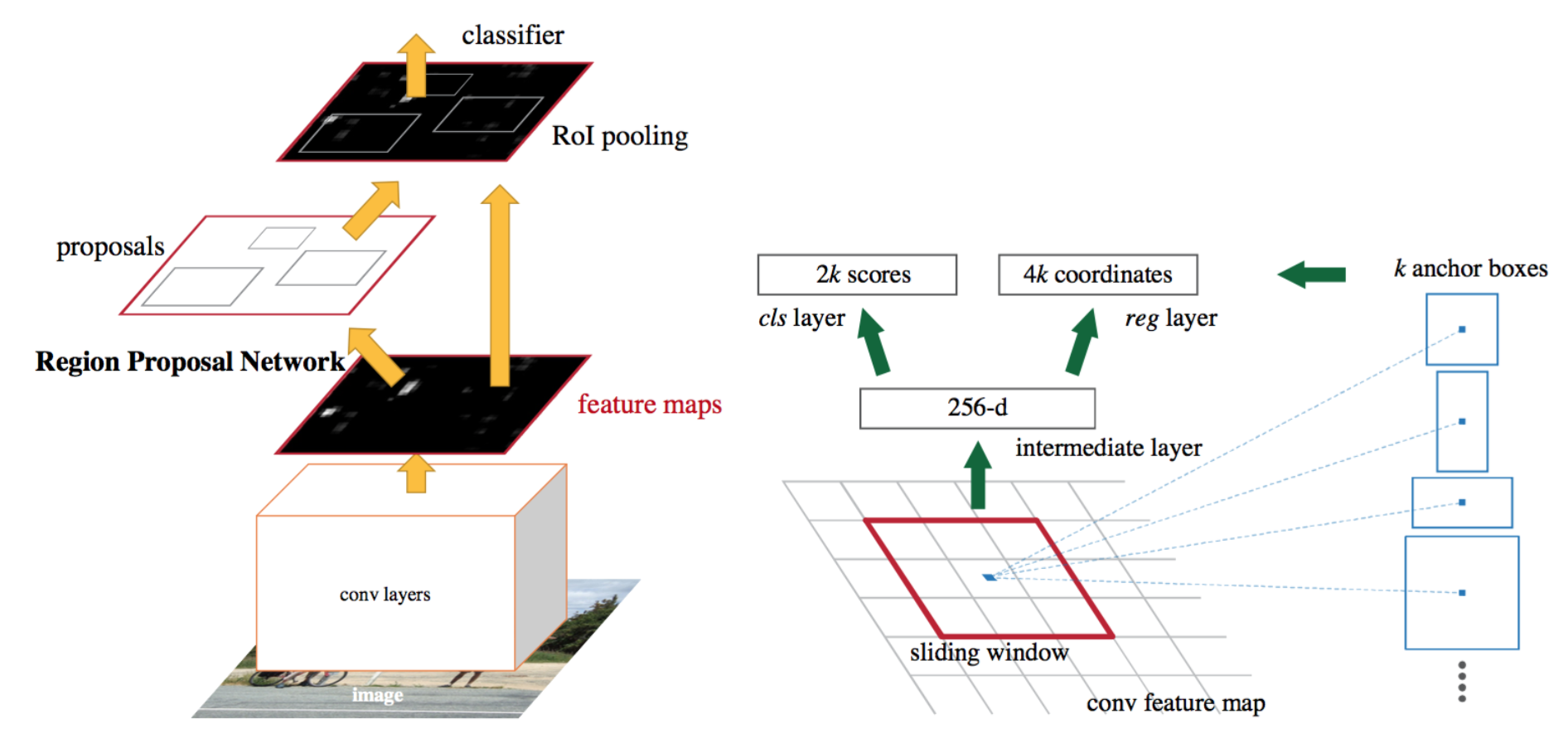

Faster RCNN comprises two-stage detectors, whose object detection happens after a region suggestion phase. It is an architecture that succeeds R-CNN and Fast RCNN, whose performance improvements come from the evolution in the suggestion of the regions of interest.

- Repository: TODO -- implementada aqui via https://pytorch.org/tutorials/intermediate/torchvision_tutorial.html.

- CLI:

python train.py --dataset hsc --bs 2 --epochs 1

See scripts in ai/training/frcnn.

Models in the YOLO (You Only Look Once) family are part of the single-stage detector category. Meaning its detection and classification of objects is performed in just one "pass" through the network. Other popular detectors, also single-stage, are SSD (we used with TF OD) and RetinaNet.

Among the YOLO models, we evaluated two: YOLOv4 and YOLOv5. The first proved difficult to achieve convergence, so we decided to try the second, YOLOv5. Despite the original authors' not implementing it, this version proved to be equivalent to the previous one in COCO val2017. However, its differentials were allowing a higher input resolution, having different sizes of architectures, and providing a script framework for the model's implementation, such as the export to ONNX.

- Repository: https://github.com/Tianxiaomo/pytorch-YOLOv4

-- Source: https://github.com/ultralytics/yolov5/issues/280#issuecomment-1001850116

-- Source: https://github.com/ultralytics/yolov5/issues/280#issuecomment-1001850116

- Repository: https://github.com/ultralytics/yolov5

- CLI:

- Training:

python train.py --img 384 --batch 8 --epochs 100 --data ../data/hsc.yaml --weights yolov5x.pt --project hsc --name v1_yolov5x

- Training:

Check hsc.yml and YOLOv5 documentation.

Check the sidebar menu to navigate through the wiki.

(Use o menu da barra lateral para navegar pelo wiki.)