This repo contains the official PyTorch code for ActiveNeRF [paper].

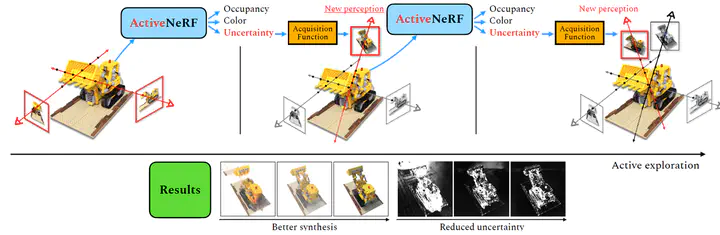

We present a novel learning framework, ActiveNeRF, aiming to model a 3D scene with a constrained input budget. We first incorporate uncertainty estimation into a NeRF model, which ensures robustness under few observations and provides an interpretation of how NeRF understands the scene. On this basis, we propose to supplement the existing training set with newly captured samples based on an active learning scheme. By evaluating the reduction of uncertainty given new inputs, we select the samples that bring the most information gain. In this way, the quality of novel view synthesis can be improved with minimal additional resources.

git clone https://github.com/abubake/ActiveNeRF.git

cd ActiveNeRF

We provide a conda environment setup file as analterantive to installing dependencies via requirements.txt. Create the conda environment activenerf by running:

conda env create -f environment.yml

conda activate activenerf

OR

pip install -r requirements.txt

Download data for example dataset: lego

bash download_example_data.sh

Then move "transforms_holdout.json" from "./data/lego" into "./data/nerf_synthetic/lego" which is created when data is downloaded.

Train ActiveNeRF:

python run_nerf.py --config configs/lego_active.txt --expname active_lego --datadir ./data/nerf_synthetic/lego

If you have any question, please feel free to contact the authors. Xuran Pan: [email protected].

Our code is based on NeRF-Pytorch, and NeRF-Tensorflow.

If you find our work is useful in your research, please consider citing:

@inproceedings{pan2022activenerf,

title={ActiveNeRF: Learning Where to See with Uncertainty Estimation},

author={Pan, Xuran and Lai, Zihang and Song, Shiji and Huang, Gao},

booktitle={Computer Vision--ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23--27, 2022, Proceedings, Part XXXIII},

pages={230--246},

year={2022},

organization={Springer}

}