-

Notifications

You must be signed in to change notification settings - Fork 69

Creating Handlers

LTLMoP allows us to use a language very close to structured English in order to control a robot in a region-based environment. However, different environments and different robots involve the use of different motion planning techniques, robot dynamics and kinematics, sensor/actuator information, and different scenarios under which to perform.

DISCLAIMER: This tutorial is designed to guide you through how to use LTLMoP to integrate a new environment into the framework. That is, after reading this tutorial you should know exactly which files you will need to modify for your own experiment and which files to leave as they are. You should already be familiar with what LTLMoP is and have it installed on your computer ready for development.

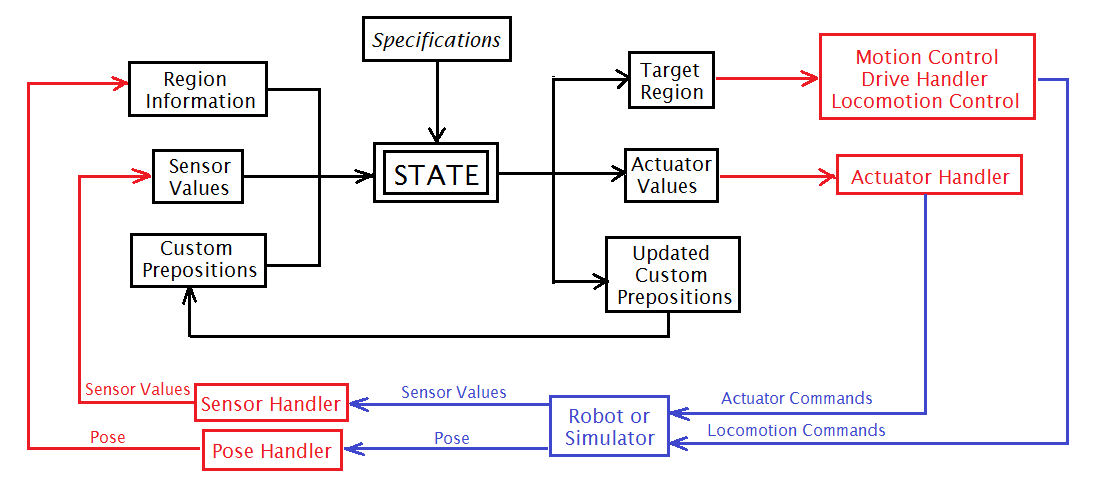

The general state-based architecture for LTLMoP is shown below. Information about the region the robot is in, its sensor values and custom prepositions will generate a state for the robot. Based on this state, LTLMoP then looks at the structured English specifications we have written and instructs the robot to transition to a new region, turn actuators on and off, and modifies the custom prepositions. Custom prepositions are user-defined Boolean (True/False) values that can make writing new specifications easier (much like abbreviating something that would perhaps be too long to type multiple times). For instance, we could write a custom preposition "AllOff" to denote that a robot's sensors are currently all reading false. Think about how much time we could save for a robot with 100 sensors in this case!

LTLMoP Architecture Schematic

LTLMoP Architecture Schematic

These commands then must pass through the Handlers, which are all shown in red. These handlers are what we need to modify as software developers in order to tailor the functionality of LTLMoP to our experiment's collection of robots and laboratory environments. These handlers, and the robot/lab configuration files that define them, will be explained in detail in the following sections.

LTLMoP is a modular framework which allows users to write code specific to any experiment which may use Linear Temporal Logic. By defining a set of handler, robot and lab files we can integrate a multitude of robots (whether real or simulated) into LTLMoP. We can then specify this collection of files in LTLMoP and run an entire scenario which uses the LTL language specification and generates an automaton. There are seven (7) different types of handlers, each in their own subfolder in the "Handlers" directory of the LTLMoP code package.

The schematic below shows the relationship between the handler files and an arbitrary robotic platform.

LTLMoP Handler Architecture

LTLMoP Handler Architecture

First we will look at the Initialization handler. This is the only handler in the framework that runs only once as soon as you begin simulation in LTLMoP. As shown in the system architecture diagram, this handler serves the purpose of populating the Project with shared data that will be used by other handlers, and starting any processes necessary, such as external calls to activate robots or simulators or to begin collecting data from a pose provider (such as Vicon).

The skeleton initHandler looks as follows:

import sys, time

class initHandler:

def __init__(self, proj, calib=False):

# TODO: Do any initialization that needs to occur before other handlers are

# instantiated. This also includes the opening of any sockets that need to be

# shared between handlers.

pass

def getSharedData(self):

# TODO: Return a dictionary of any objects that will need to be shared with

# other handlers

return {}

For example, the CKBot Simulator initialization handler has the following shared information under getSharedData().

return {'Simulator': self.simulator}

Then, if we need the field 'simulator' for later use, such as to get Pose, we use the following commands, where 'get2DPose(num)' is a function of this simulator class. If we want this simulator to be referenced in another handler, we must ensure that we use this first line in the constructor of the handler. For all LTLMoP handlers, the variable 'shared_data' is an input (it's simply InitHandler.getSharedData()).

# In any function of this handler class: pose = self.simulator.get2DPose(0)

Next is the Pose Handler. This handler is in charge of retrieving pose from the pose provider used in a given scenario. For a real robot, this will extract pose from a Vicon broadcast and (possibly) manipulate it somewhat to get it in the right format for LTLMoP. LTLMoP represents pose as a 2-Dimensional set of (x, y, theta) in the form of a NumPy array. This is why, in the file below, we must import the numpy package.

class poseHandler:

def __init__(self, proj, shared_data):

# TODO: Initialize any network connections that might be necessary.

#

# This is only called once at the beginning of execution.

#

# Hostnames/port numbers can be loaded as proj.robot_data['XXXX_PORT'][0]

# if you stick them in the robot configuration file

#

# Note that if the same network socket needs to be shared with another handlers,

# you should initialize it in an initializationHandler and stick a reference

# to the socket in shared_data.

pass

def getPose(self):

# TODO: Find out current pose from the localization system

[pos_x, pos_y, theta] = [0, 0, 0]

return array([pos_x, pos_y, theta])

The Motion Control handler is slightly more complicated than the two above, so we will break down the skeleton code and go through it in more detail. This first block shows how we instantiate the project's motion handler. Note that we are using the project's data to extract the drive handler and pose handler. This is because we need the robot's current pose to figure out a drive command, and then we need the drive handler to send this command to. Finally, we need to load the region information to figure out where to find reference points, and to keep track of the transformation between "real" pose information and the simulation pose information which is consistent with the regions image file.

class motionControlHandler:

def __init__(self, proj, shared_data):

# Get references to handlers we'll need to communicate with

self.drive_handler = proj.drive_handler

self.pose_handler = proj.pose_handler

# Get information about regions

self.rfi = proj.rfi

self.coordmap_map2lab = proj.coordmap_map2lab

In this next part we command a robot to go to a region. Note that the function keeps track of the current and next regions to go to, and the controller behaves differently depending on the relationship between these regions. As shown in the comments below, this function should output whether or not we have arrived at the target region. Based on which region we need to go to, the positions of the region boundaries and the robot's current pose, we must then figure out a way to command the robot to move accordingly.

if current_reg == next_reg and not last:

# No need to move!

self.drive_handler.setVelocity(0, 0) # So let's stop

return True

# Find our current configuration

pose = self.pose_handler.getPose()

# TODO: Calculate a velocity vector in the *GLOBAL REFERENCE FRAME*

# that will get us on our way to the next region

# NOTE: Information about region geometry can be found in self.rfi.regions:

pointArray = [x for x in self.rfi.regions[current].getPoints()]

pointArray = map(self.coordmap_map2lab, pointArray)

[vx, vy, w] = [0, 0, 0]

Finally, we take the velocity vector computed (in a real handler, it shouldn't be all zeros) and send its two linear and one angular components to the drive handler.

# TODO: Figure out whether we've reached the destination region

arrived = False

return arrived

The drive handler would be trivial for a holonomic robot, as the exact command from the motion controller could be fed directly to the locomotion handler and the robot would move accordingly. Unfortunately for all of us, robots are non-holonomic and we must find a way for them to move as best as they can in a given direction. In the case of a two-wheeled differential drive robot (like the Pioneer), we could convert linear speed to the speeds of the two individual wheels. In the case of a legged robot we would have to come up with specialized gaits that can get it to move in several directions.

The skeleton code below finds the locomotion handler for the project and sends this manipulated control input to the handler in the last line.

def setVelocity(self, x, y, theta=0):

# TODO: Given desired translational [X,Y] velocity specified in the

# *GLOBAL REFERENCE FRAME* from the motion controller, and current robot

# orientation theta (measured counter-clockwise from the positive X-axis),

# construct a drive command for our robot that will get us as close as

# possible and send it on to the locomotion command handler

cmd = [x,y]

self.loco.sendCommand(cmd)

The locomotion command handler serves the purpose of interfacing between LTLMoP and the hardware (or software) necessary to communicate with a robot and command it to move with the control input determined by the drive handler. The block of code below shows that we can include any Hostnames or Port numbers necessary for communication as part of the robot configuration file (see next section). This way, the locomotion handler can send commands via these numbers if that is the way to communicate between them. Ideally, we would like to use the DEASL framework to communicate between these platforms.

class locomotionCommandHandler:

pass

def sendCommand(self, cmd):

# TODO: Send locomotion command ``cmd`` to the robot

pass

The sensor and actuator handlers deal with sensing and actuation of robots, not to be confused with the pose sensing and drive command actuation we have described before. For example, we can use sensor and actuator handlers to change the behavior of a robot based on extraneous information. With the original LTLMoP example that used Pioneers, these handlers dealt with a camera that could observe red or blue spheres and actuators that represented a "radio" (we could imagine this as a blinking light or sound).

The skeleton code below shows that, like the locomotion or pose handlers, we must establish any connections necessary to get this information at every iteration of the LTLMoP state machine. We can then use the sensor handler's getSensorValue function to determine the boolean (True/False) value of each sensor. This would affect the state of our experiment as defined by the LTL specifications written in the specEditor.

pass

def getSensorValue(self, sensor_name):

# TODO: Return a boolean value corresponding to the state of the sensor

# with name ``sensor_name``

#

# If such a sensor does not exist, return ``None``

return None

Similarly to the other interfacing handlers, we must keep track of any network connections necessary to send commands to the actuators of the robot. These actuators, just like the sensors, are also Boolean (True/False) variables. We set actuators based on other parameters in the state machine, as specified by the LTL specifications in specEditor. Whenever we transition to a new state that dictates the turning on/off of an actuator we will call the actuator handler's setActuator function with either a true or false argument. In this function we must therefore specify how to physically control each actuator.

class actuatorHandler:

def __init__(self, proj, shared_data):

# TODO: Initialize any network connections that might be necessary.

#

# This is only called once at the beginning of execution.

#

# Hostnames/port numbers can be loaded as proj.robot_data['XXXX_PORT'][0]

# if you stick them in the robot configuration file

#

# Note that if the same network socket needs to be shared with another handlers,

# you should initialize it in an initializationHandler and stick a reference

# to the socket in shared_data.

pass

def setActuator(self, name, val):

# TODO: Set actuator of name ``name`` to be in state ``val`` (binary).

print "(ACT) Actuator %s is now %s!" % tuple(map(str, (name, val)))

We must now tell LTLMoP which handlers to use for the experiment we wish to run. Every experiment that you save in the "Examples" folder of the LTLMoP directory has its own Region and Specification information (which we assume you are familiar with now); however, we now need a robot and lab configuration file to define which handlers we will be using. The reason why these files are detached from the handlers is that we may need to use different robots within the same laboratory, so we would only have to swap our robot file and keep our lab file intact.

The robot configuration file keeps track of the sensors and actuators of a robot, and the motion control and drive handlers which are defined by the dynamics and kinematics of the robot. In addition to specifying which handlers to use, we also incorporate any extra parameters like Hostnames or Port numbers necessary to communicate between LTLMoP and the robot. In the example file below, the Orca framework (which is now replaced by DEASL) is used to communicate between LTLMoP and the Vicon Pose information, camera sensing information and to command the Pioneer to move its wheels.

Sensors: # Available binary sensor propositions fire person hazardous_item Actions: # Available binary actuator propositions pick_up radio extinguish drop MotionControlHandler: # Module with continuous controller for moving between regions handlers.motionControl.heatController DriveHandler: # Module for converting a desired velocity vector to realistic motor commands handlers.drive.differentialDrive ### Below are settings for Scorpion08 OrcaVelocityHost: 10.0.0.195 OrcaVelocityPort: 13339 OrcaPositionGroup: 239.255.255.0 OrcaPositionPort: 40976 ColorDetectionGroup: 239.255.255.3 ColorDetectionPort: 13332

This comment, found in the skeleton Pose handler, eloquently explains how we can reference these additional parameters.

# Hostnames/port numbers can be loaded as proj.robot_data['XXXX_PORT'][0] # if you stick them in the robot configuration file

In other words, if we wanted to access, say, 'ColorDetectionGroup' and 'ColorDetectionPort' from the example above, we would need to type 'proj.robot_data['ColorDetectionGroup'][0]' and 'proj.robot_data['ColorDetectionPort'][0]' respectively. This extra '[0]' is just saying that we want the first String in the relevant line of the text file; for instance, 'proj.robot_data['ColorDetectionGroup'][0]' should return the String "239.255.255.3".

Robot files can be selected by opening "specEditor.py", then under the drop-down menu select "File -> Import -> Robot Description File". These files can be found (and should be placed) in the "Robots" folder in the LTLMoP code package.

The lab configuration file defines the rest of the information that the robot configuration file did not (that is, the remaining handlers). The sample lab configuration file below shows the information for the CKBot simulator that was integrated into LTLMoP. Note that all the handlers were named "CKBotSim[handler]" to facilitate development.

Name: # Name for this lab configuration CKBot-ODE (Local) InitializationHandler: # Initializes ODE Simulator handlers.init.CKBotSimInit PoseHandler: # Pulls Base Object's Pose from ODE handlers.pose.CKBotSimPose SensorHandler: handlers.sensor.dummySensor ActuatorHandler: handlers.actuator.CKBotSimActuator LocomotionCommandHandler: # Commands joint angles to ODE Simulator handlers.locomotionCommand.CKBotSimLocomotionCommand

In order to import this information into LTLMoP, we should (again) be running "specEditor.py". Then, select under the drop-down menu "Run -> Configure Simulation". Here you will be able to select the lab, robot, starting pose and real pose-to-LTLMoP image pose calibration parameters.