🌟 Get 120 Leads in 2.5 Minutes! 🤖

Welcome to the Google Maps Scraper, a powerful scraper designed to scrape up to 1200 Google Map Leads in just 25 minutes. Its Top Features are:

- Scrapes 1200 Google Map Leads in just 25 minutes giving you lots of prospects to make potential sales.

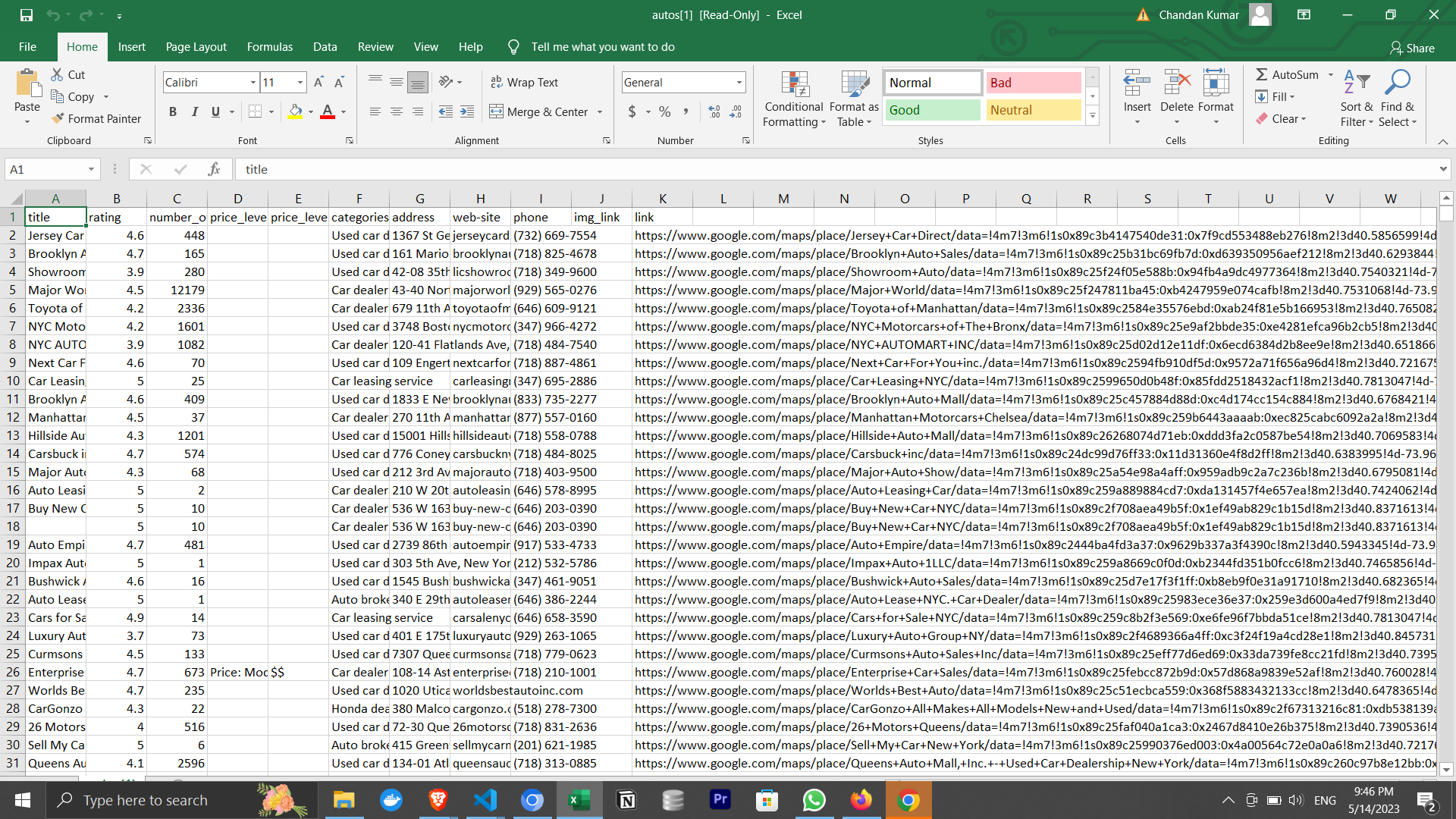

- Scrapes 30 Data Points including website, phone, category, owner, geo-coordinates, and 26 more data points. Even the ones that are not publicly shown in Google Maps, so you have all the data you need.

- You can sort, select and filter the leads to get you the leads relevant to your Business.

- You can scrape multiple queries in one go.

- Scrapes Data in Real Time, so you have the latest data.

- Saves Data as both JSON and CSV for easy usage.

The Table below shows the most important data points that will be generated in both CSV and JSON formats:

If you are not a techy person or don't know how to use git. You can follow this video to make bot run.

Let's get started by generating Google Maps Leads by following these simple steps

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/google-maps-scraper

cd google-maps-scraper2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt3️⃣ Let the Rain of Google Map Leads Begin 😎:

python main.pyOnce the scraping process is complete, you can find your leads in the output directory.

Watch this video to see the bot in action!

A: Open the file src/config.py and comment out the line that sets the max_results parameter.

By doing so, you can scrape all the search results from Google Maps. For example, to scrape all restaurants in Delhi, modify the code as follows:

queries = [

{

"keyword": "restaurants in delhi",

# "max_results" : 5,

},

]Note: You will be able to scrape up to 120 leads per search due to Google's scrolling limitation.

A: Open the file src/config.py and update the keyword with your desired search query.

For example, if you want to scrape data about stupas in Kathmandu 🇳🇵, modify the code as follows:

queries = [

{

"keyword": "stupas in kathmandu",

},

]A: You have the option to apply filters to your Google Maps search results using the following parameters:

- min_rating

- min_reviews

- max_reviews

- has_phone

- has_website

To specify filters, open src/config.py and specify your filters.

The Following example will scrape only those listings with a minimum of 5 reviews, a maximum of 100 reviews, and a phone number.

queries = [

{

"keyword": "restaurants in delhi",

"min_reviews": 5 ,

"max_reviews": 100,

"has_phone": True,

},

]To sort your results by reviews, rating, or title, follow these steps:

Open the file src/config.py and set the sort key.

For example, to sort by reviews in descending order, modify the code as follows:

queries = [

{

"keyword": "stupas in kathmandu",

"sort": {

"by": "reviews",

"order": "desc",

},

},

]You can sort by any field, such as reviews, main_category, title, rating, or any other field. Here are some common sort examples:

- Sort by

reviewscount in descending order:

"sort": {

"by": "reviews",

"order": "desc",

},- Sort by

main_categoryin ascending order:

"sort": {

"by": "main_category",

"order": "asc",

},- Sort by

titlein ascending order:

"sort": {

"by": "title",

"order": "asc",

},- Sort by

ratingin descending order:

"sort": {

"by": "rating",

"order": "desc",

},If you want to select specific fields to be output in your CSV and JSON files, you can do so by following these steps:

- Open

src/config.py. - Modify the

selectkey to include the fields you want to select.

For example, if you want to select "title", "link", "main_category", "rating", "reviews", "website", "phone", and "address", you should adjust the code as follows:

queries = [

{

"keyword": "stupas in kathmandu",

"select": ["title", "link", "main_category", "rating", "reviews", "website", "phone" , "address"],

},

]You are free to select any field. Here are a couple of common field selections:

- Standard field selection:

"select": ["title", "link", "main_category", "rating", "reviews", "website", "phone" , "address"],- Selection of all fields (Default):

"select": "ALL",To boost the scraping speed, the scraper launches multiple browsers simultaneously. Here's how you can increase the speed furthur:

- Adjust the

number_of_scrapersvariable in the configuration file. Recommended values are:- Standard laptop: 4 or 8

- Powerful laptop: 12 or 16

Note: Avoid setting number_of_scrapers above 16, as Google Maps typically returns only 120 results. Using more than 16 scrapers may lead to a longer time spent on launching the scrapers than on scraping the places. Hence, it is best to stick to 4, 8, 12, or 16.

In case you encounter any issues, like the scraper crashing due to low-end PC specifications, follow these recommendations:

- Reduce the

number_of_scrapersby 4 points. - Ensure you have sufficient storage (at least 4 GB) available, as running multiple browser instances consumes storage space.

- Close other applications on your computer to free up memory.

Additionally, consider improving your internet speed to further enhance the scraping process.

A: Absolutely! Open src/config.py and add as many queries as you like.

For example, if you want to scrape restaurants in both Delhi 😎 and Bangalore 👨💻, use the following code:

queries = [

{

"keyword": "restaurants in delhi",

},

{

"keyword": "restaurants in bangalore",

}

]Google Maps does not display the emails and social links of shop owners. However, it does show the owner's Google profile, which we scrape and store in the owner field as shown below:

"owner": {

"id": "102395794819517641473",

"link": "https://www.google.com/maps/contrib/102395794819517641473",

"name": "38 Barracks Restaurant and Bar (Owner)"

},To obtain the shop owner's emails and social links, you have two options:

- Visit the website of each shop and manually find the contact details.

- Use the Contact Details Scraper by vdrmota, available here, to automatically scrape the contact details.

If you are using the Google Maps Scraper for prospecting and selling your products or services, it is recommended to manually visit the first 100 lead's websites. This will provide you with a better understanding of your prospects. Also, the task of finding contact details from 100 websites, can be done within 2 hours if you work with focus and speed.

Once you have validated the success of your Google Maps scraper strategy, you can scale up your efforts using the Contact Details Scraper, which is also quite affordable.

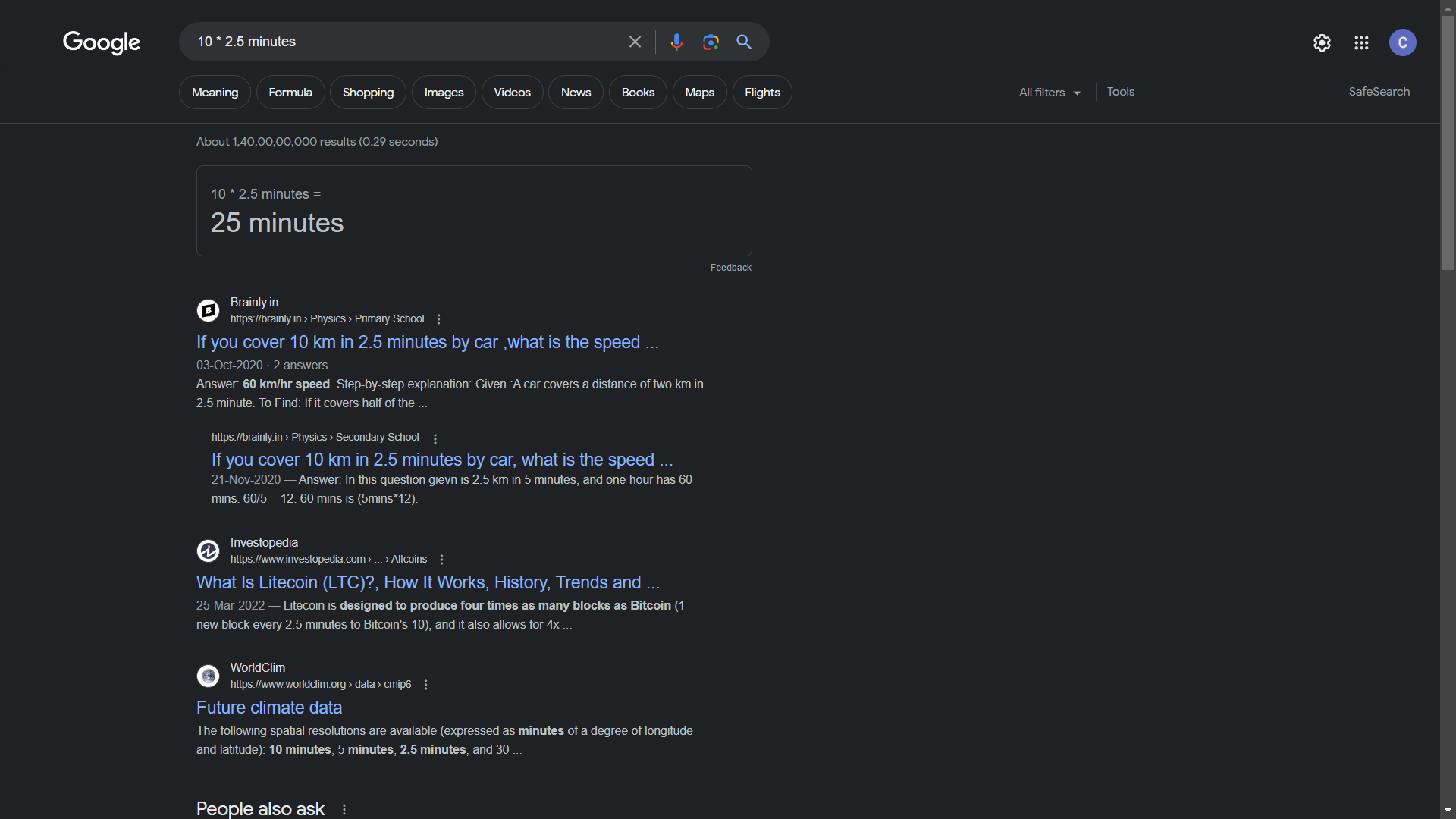

On average, each Google Maps search gives 120 listings. It takes approximately 2.5 minutes to scrape these 120 listings.

To calculate the number of hours it takes to scrape "n" searches, you can google search this formula substituting n with number of searches you want to conduct:

n * 2.5 minutes

For example, if you want to scrape 10 google map queries or 1200 listings, it will take around 25m.

- Run the following command to build Docker Image:

docker-compose build - Run the following command to start the Conatiner:

docker-compose up

The scraped data will be stored in the output folder. If you make changes to config.py like changing search query, please repeat steps 1 and 2.

A: Most people scrape Google Maps Listings to sell things!

For example, you can search for restaurants in Amritsar and pitch your web development services to them.

You can also find real estate listings in Delhi and promote your exceptional real estate software.

Google Maps is seriously a great platform to find B2B customers to sell things to!

Would you help a complete stranger if it didn’t cost money and you didn’t get any credit?

If you answered yes, I want to ask to star our repository ⭐ at https://github.com/omkarcloud/google-maps-scraper/, it will help one more entrepreneur like you change their life for the better :)