Suppose a hypothetical music streaming company has decided that it is time to introduce more automation and monitoring to their data warehouse ETL pipelines. In this project, I use Apache Airflow to create a dynamic data pipeline that is built from reusable tasks, can be monitored, and allow easy backfills. The resulting tasks and DAG structure is shown below.

The source data resides in S3 and needs to be processed in a data warehouse in Amazon Redshift. The source datasets consist of JSON logs that tell about user activity in the application and JSON metadata about the songs the users listen to.

The chosen database structure is a star schema with songplays as its fact table, and users, songs, artist, and time, as its dimension tables. A star schema is a denormalized database design which allows for simplified queries and faster aggregations on the data. This structure is ideal for OLAP operations such as rolling-up, drilling-down, slicing, and dicing.

The data used comes from two datasets that reside in S3:

Song data: s3://udacity-dend/song_data

Log data: s3://udacity-dend/log_data

The first dataset is a subset of real data from the Million Song Dataset. Each file is in JSON format and contains metadata about a song and the artist of that song. The files are partitioned by the first three letters of each song's track ID. For example, here are filepaths to two files in this dataset.

song_data/A/B/C/TRABCEI128F424C983.json

song_data/A/A/B/TRAABJL12903CDCF1A.json

And below is an example of what a single song file, TRAABJL12903CDCF1A.json, looks like.

{"num_songs": 1, "artist_id": "ARJIE2Y1187B994AB7", "artist_latitude": null, "artist_longitude": null, "artist_location": "", "artist_name": "Line Renaud", "song_id": "SOUPIRU12A6D4FA1E1", "title": "Der Kleine Dompfaff", "duration": 152.92036, "year": 0}

The second dataset consists of listener log files in JSON format generated by this event simulator based on the songs in the dataset above. These simulate app activity logs from an imaginary music streaming app based on configuration settings.

The log files in the dataset are partitioned by year and month. For example, here are filepaths to two files in this dataset.

log_data/2018/11/2018-11-12-events.json

log_data/2018/11/2018-11-13-events.json

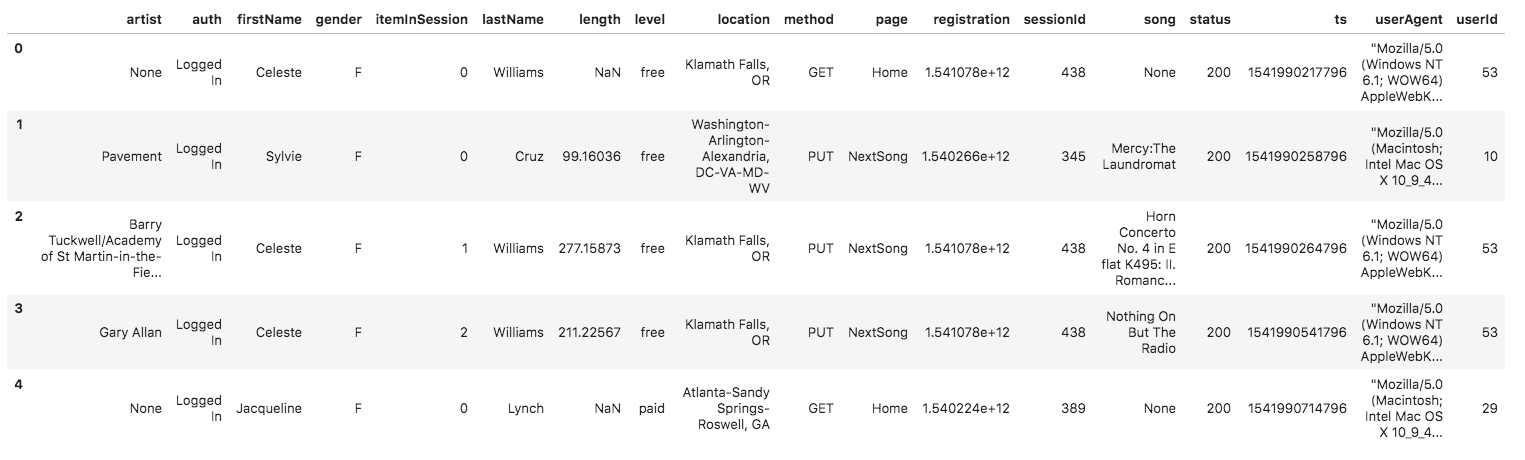

And below is an example of what the data in a log file, 2018-11-12-events.json, looks like.

In order to setup this pipeline, several steps must be taken. An AWS Redshift cluster must be configured and running. Airflow must be configured with the necessary connections to connect to the Redshift cluster. The initial tables for the database must be created using the Redshift Query Editor. The SQL commands to create all the necessary tables are provided in create_tables.sql.