-

Notifications

You must be signed in to change notification settings - Fork 60

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Can't run a single linear layer #128

Comments

|

Can you post the onnx file here? |

|

The 1 and 2 in the file names correspond to the 2 different errors mentioned above. |

|

@pixelspark Any luck replicating? |

|

As for the first problem, long story short: WONNX does not implement Gemm when transA/transB!=0. The error seems to be the result of this check: let dim_n = output_shape.dim(1);

//...

if dim_n != input_right_shape.dim(1) || dim_k != input_right_shape.dim(0) {

return Err(CompileError::InvalidInputShape {

input_index: 1,

input_shape: input_right_shape.clone(),

});

}This check follows from the spec. Note that when transA or transB is non-zero (as it is in your example), the constraints are transposed. WONNX should throw an error earlier if it detects non-zero transA or transB, but for some reason it doesn't in this case (according to Netron, transB==1). |

|

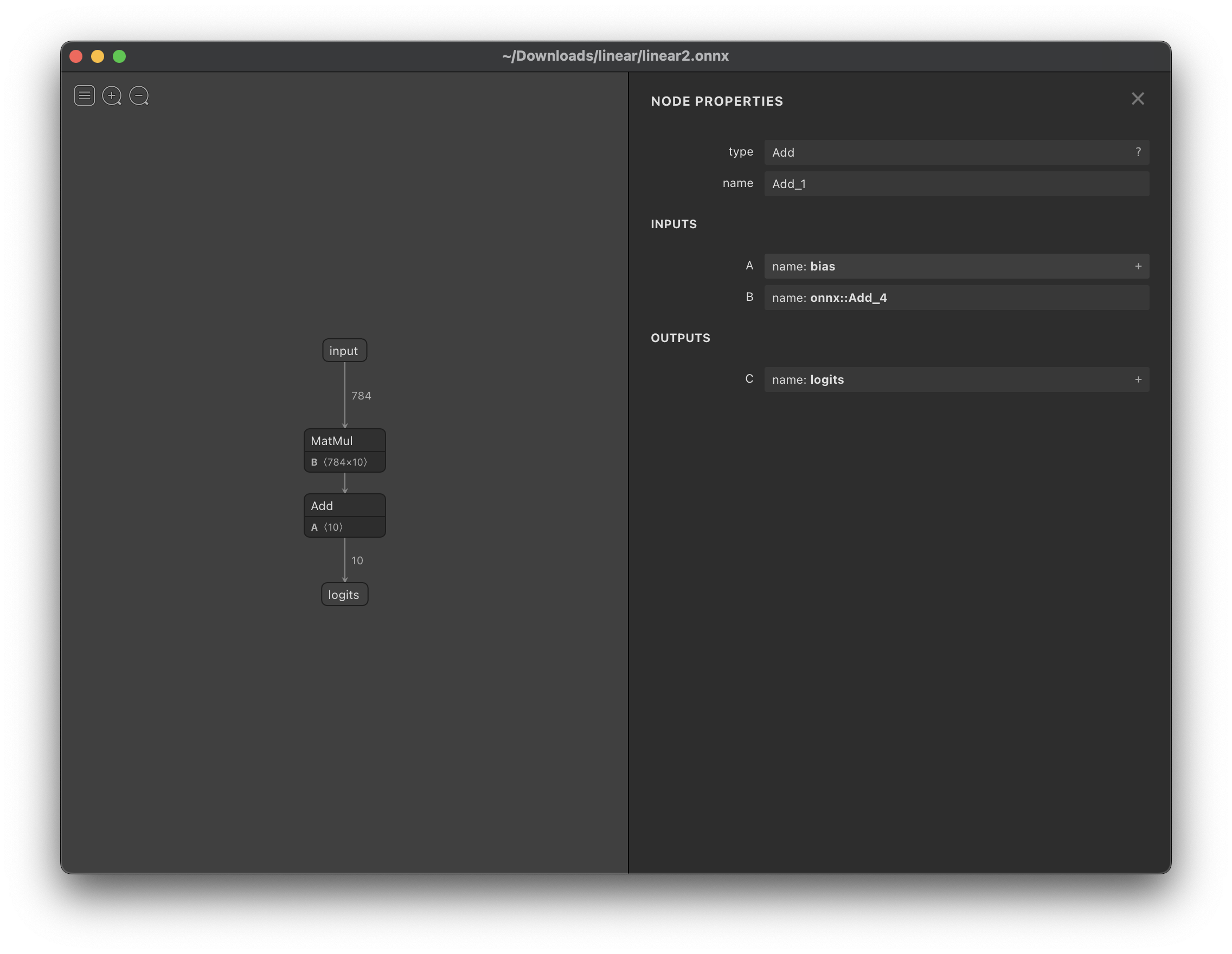

As for the second error: it seems something is just wrong with the ONNX file. Netron also has difficulty finding the type for IIUC the name |

|

@pixelspark Thanks for the explanation but still I'm not sure how to resolve the issue exactly. Do you have any idea why PyTorch might be exporting these incorrectly? Is there a configuration I have wrong in my exporter script for example? |

|

I am having the same issue, any ideas? |

|

@JulienSiems I got the same issue as @Ryul0rd. I think this is due to PyTorch implementation of def __init__(self, ...) -> None:

...

self.weight = Parameter(

torch.empty((out_features, in_features), **factory_kwargs)

)

...and its def forward(self, input: Tensor) -> Tensor:

return F.linear(input, self.weight, self.bias) which is equivalent to class LinearCustom(torch.nn.Module):

__constants__ = ["in_features", "out_features"]

in_features: int

out_features: int

weight: Tensor

def __init__(

self,

in_features: int,

out_features: int,

bias: bool = True,

device=None,

dtype=None,

) -> None:

factory_kwargs = {"device": device, "dtype": dtype}

super().__init__()

self.in_features = in_features

self.out_features = out_features

self.weight = Parameter(

# torch.empty((out_features, in_features), **factory_kwargs) <-- change this

torch.empty((in_features, out_features), **factory_kwargs) # <-- to this

)

if bias:

self.bias = Parameter(torch.empty(out_features, **factory_kwargs))

else:

self.register_parameter("bias", None)

self.reset_parameters()

def reset_parameters(self) -> None:

init.kaiming_uniform_(self.weight, a=math.sqrt(5))

if self.bias is not None:

fan_in, _ = init._calculate_fan_in_and_fan_out(self.weight)

bound = 1 / math.sqrt(fan_in) if fan_in > 0 else 0

init.uniform_(self.bias, -bound, bound)

def forward(self, input: Tensor) -> Tensor:

# return F.linear(input, self.weight, self.bias) <-- change this

return torch.matmul(input, self.weight) + self.bias # <-- to this

def extra_repr(self) -> str:

return "in_features={}, out_features={}, bias={}".format(

self.in_features, self.out_features, self.bias is not None

)I still need to run @pixelspark I'm wondering how much changes required if we implement handling the case of |

Describe the bug

I try to export a single linear layer from PyTorch and get one of the following errors.

Error 1:

GpuError(CompileError { node: "Gemm_0", error: InvalidInputShape { input_index: 1, input_shape: Shape { dims: [10, 784], data_type: F32 } } })Error 2:

IrError(OutputNodeNotFound("onnx::Add_4"))I viewed the resulting onnx file at netron.app at it appeared to be correct.

To Reproduce

Expected behavior

The model should load successfully.

Desktop

PopOS 20.04

The text was updated successfully, but these errors were encountered: