-

Notifications

You must be signed in to change notification settings - Fork 103

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Can it work with a single image? #17

Comments

|

I also encounter the same issue (output grayscale when single frame as input). Have you addressed this issue? |

|

I encounter this issue, too. Is there anyone make it ? |

Dr zhao, have you overcome this problem? |

|

You have to emulate multiple frames by duplicating the image to make the temporal convolutions work: The network still isn't able to use colors from the reference images if they are significantly different from the gray image. |

I wrote simple code to run model on a single image but result is gray still !!

minimal demo :

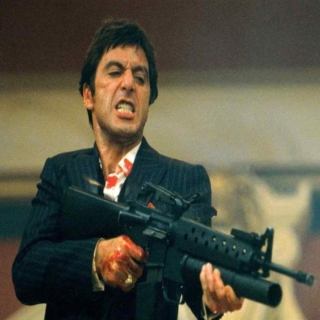

input image :

out put :

** Note : reference image is equal to input

The text was updated successfully, but these errors were encountered: