-

Notifications

You must be signed in to change notification settings - Fork 249

HowTo: fasterq dump

The fasterq-dump tool uses temporary files and multi-threading to speed up the extraction of FASTQ-files or FASTA-files from SRA-accessions. Be aware that the commandline-parameters are similar to the older fastq-dump, but not identical.

If a minimal commandline is given:

$fasterq-dump SRR000001

The tool produces output files named 'SRR000001.fastq', 'SRR000001_1.fastq' and 'SRR000001_2.fastq' in the current directory. The tool will also create a directory named 'fasterq.tmp.host.procid' in the current directory. The host- and procid-parts will be replaced by the hostname of the computer you are using and the process-id. After the extraction is finished, this directory and its content will be deleted. This temporary directory will use approximately up to 10 times the size of the final output-file. If you do not have enough space in your current directory for the output-file and the temporary files, the tool will fail.

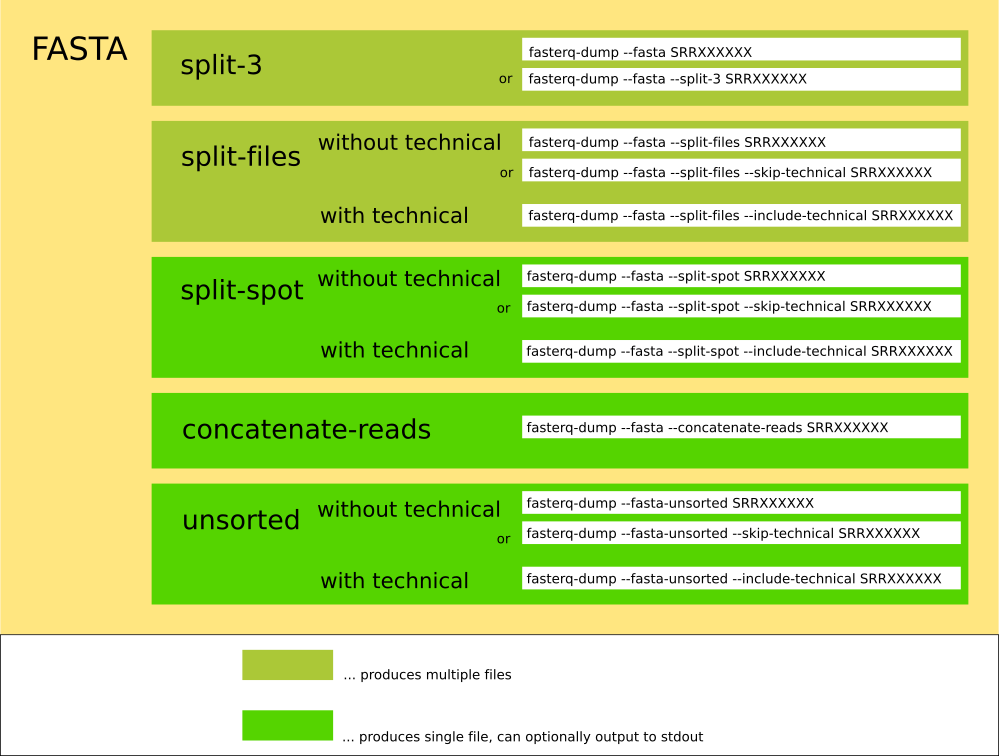

Fasterq-dump can operate in different modes:

and

The location (output directory) of the output-files can be changed:

$fasterq-dump SRR000001 -O /mnt/big_hddq

If parts of the output-path do not exist, they will be created. If the output-files already exist, the tool will not overwrite them, but fail instead. If you want already existing output-files to be overwritten, use the force option -f.

The location of the temporary directory can be changed too:

$fasterq-dump SRR000001 -O /mnt/big_hdd -t /tmp/scratch

Now the temporary files will be created in the '/tmp/scratch' directory. These temporary files will be deleted on finish, but the directory itself will not be deleted. If the temporary directory does not exist, it will be created.

It is helpful for the speed-up, if the output-path and the scratch-path are on different file-systems. For instance it is a good idea to point the temporary directory to a SSD if available or a RAM-disk like /dev/shm if enough RAM is available.

Another factor is the number of threads. If no option is given (as above) the tool uses 6 threads for its work. If you have more CPU cores it might help to increase this number. The option to do this is -e, for instance -e 8 increases the thread-count to 8. However even if you have a computer with much more CPU cores, increasing the thread count can lead to diminishing returns, because you exhaust the I/O - bandwidth. You can test your speed by measuring how long it takes to convert a smaller accession, like this:

$time fasterq-dump SRR000001 -t /dev/shm

$time fasterq-dump SRR000001 -t /dev/shm -e 8

$time fasterq-dump SRR0000018 -t /dev/shm -e 10

Don't forget to repeat the commands at least 2 times, to exclude other influences like caching or network load.

To detect how many cpu-cores your machine has:

on Linux: $nproc --all

on Mac: $/usr/sbin/sysctl -n hw.ncpu

The tool can create different formats:

The spots are split into ( biological ) reads, for each read - 4 lines of FASTQ are written. For spots having 2 reads, the reads are written into the *_1.fastq and *_2.fastq files. Unmated reads are placed in *.fastq. If the accession has no spots with one single read, the *.fastq-file will not be created.

This is the default ( no option is necessary )

The spots are split into reads, for each read 4 lines of FASTQ are written into one output-file

--split-spot ( -s )

The spots are split into reads, for each read 4 lines of FASTQ are written, each n-th read into a different file

--split-file ( -S )

The spots are not split, for each spot 4 lines of FASTQ are written into one output-file.

--concatenate-reads

It is possible that you exhaust the space at your filesystem while converting large accessions. This can happen with this tool more often because it uses additional scratch-space to increase speed. It is a good idea to perform some simple checks before you perform the conversion. First you should know how big an accession is. Let us use the accession SRR341578 as an example:

$vdb-dump --info SRR341578

will give you a lot of information about this accession. The important line is the 3rd one: 'size : 932,308,473'. After running fasterq-dump without any other options you will have these fastq-files in your current directory: 'SRR341578_1.fastq' and 'SRR341578_2.fastq'. Each having a file-size of 2,109,473,264 bytes. In this case we have inflated the accession by a factor of approximately 4. But that is not all, the tool will need aproximately the same amout as scratch-space. As a rule of thumb you should have about 8x ... 10x the size of the accession available on your filesystem. How do you know how much space is available? Just run this command on linux or mac:

$df -h .

Under the 4th column ( 'Avail' ), you see the amount of space you have available.

Filesystem Size Used Avail Use% Mounted on

server:/vol/export/user 20G 15G 5.9G 71% /home/user

This user has only 5.9 Gigabyte available. In this case there is not enough space available in its home directory. Either try to delete files, or perform the conversion to a different location with more space.

If you want to use for instance a virtual 'RAM-drive' as scratch-space: (If you have such a device and how big it is, dependes on your system-admin!)

$df -h /dev/shm

If you have enough space there, run the tool:

$fasterq-dump SRR341578 -t /dev/shm

In order to give you some information about the progress of the conversion there is a progress-bar that can be activated.

$fasterq-dump SRR341578 -t /dev/shm -p

The conversion happens in multiple steps, depending on the internal type of the accession. You will see either 2 or 3 progress bars after each other. The full output with progress-bars for a cSRA-accession like SRR341578 looks like this:

lookup :|-------------------------------------------------- 100.00%

merge : 13255208

join :|-------------------------------------------------- 100.00%

concat :|-------------------------------------------------- 100.00%

spots read : 7,549,706

reads read : 15,099,412

reads written : 15,099,412

for a flat table like SRR000001 it looks like this:

join :|-------------------------------------------------- 100.00%

concat :|-------------------------------------------------- 100.00%

spots read : 470,985

reads read : 470,985

reads written : 470,985

Because we have changed the defaults to be different and more meaningful than fastq-dump, here is a list of equivalent command-lines, but fasterq-dump will be faster.

fastq-dump SRRXXXXXX --split-3 --skip-technical

fasterq-dump SRRXXXXXX

fastq-dump SRRXXXXXX --split-spot --skip-technical

fasterq-dump SRRXXXXXX --split-spot

fastq-dump SRRXXXXXX --split-files --skip-technical

fasterq-dump SRRXXXXXX --split-files

fastq-dump SRRXXXXXX

fasterq-dump SRRXXXXXX --concatenate-reads --include-technical

Here are some important differences to fastq-dump:

-

The

-Z|--stdoutoption does not work for split-3 and split-files. The tool will fall back to producing files in these cases. -

There is no

--gzip|--bizp2option, you have to compress your files explicitly after they have been written. -

There is no

-Aoption for the accession; just specify the accession or the absolute path directly. -

fasterq-dumpdoes not take multiple accessions, just one. -

There is no

-N|--minSpotIdand no-X|--maxSpotIdoption.fasterq-dumpversion 2.9.1 processes always the whole accession, although it may support partial access in future versions. -

fasterq-dumpis available for all 3 platforms : Linux, MacOS, and Windows.