diff --git a/datahub-frontend/app/auth/AuthModule.java b/datahub-frontend/app/auth/AuthModule.java

index d0d17fda26392..7bb2547890701 100644

--- a/datahub-frontend/app/auth/AuthModule.java

+++ b/datahub-frontend/app/auth/AuthModule.java

@@ -21,6 +21,7 @@

import com.linkedin.util.Configuration;

import config.ConfigurationProvider;

import controllers.SsoCallbackController;

+import io.datahubproject.metadata.context.ValidationContext;

import java.nio.charset.StandardCharsets;

import java.util.Collections;

@@ -187,6 +188,7 @@ protected OperationContext provideOperationContext(

.authorizationContext(AuthorizationContext.builder().authorizer(Authorizer.EMPTY).build())

.searchContext(SearchContext.EMPTY)

.entityRegistryContext(EntityRegistryContext.builder().build(EmptyEntityRegistry.EMPTY))

+ .validationContext(ValidationContext.builder().alternateValidation(false).build())

.build(systemAuthentication);

}

diff --git a/datahub-upgrade/src/main/java/com/linkedin/datahub/upgrade/config/SystemUpdateConfig.java b/datahub-upgrade/src/main/java/com/linkedin/datahub/upgrade/config/SystemUpdateConfig.java

index f3a4c47c59f0b..661717c6309cf 100644

--- a/datahub-upgrade/src/main/java/com/linkedin/datahub/upgrade/config/SystemUpdateConfig.java

+++ b/datahub-upgrade/src/main/java/com/linkedin/datahub/upgrade/config/SystemUpdateConfig.java

@@ -29,6 +29,7 @@

import io.datahubproject.metadata.context.OperationContextConfig;

import io.datahubproject.metadata.context.RetrieverContext;

import io.datahubproject.metadata.context.ServicesRegistryContext;

+import io.datahubproject.metadata.context.ValidationContext;

import io.datahubproject.metadata.services.RestrictedService;

import java.util.List;

import javax.annotation.Nonnull;

@@ -161,7 +162,8 @@ protected OperationContext javaSystemOperationContext(

@Nonnull final GraphService graphService,

@Nonnull final SearchService searchService,

@Qualifier("baseElasticSearchComponents")

- BaseElasticSearchComponentsFactory.BaseElasticSearchComponents components) {

+ BaseElasticSearchComponentsFactory.BaseElasticSearchComponents components,

+ @Nonnull final ConfigurationProvider configurationProvider) {

EntityServiceAspectRetriever entityServiceAspectRetriever =

EntityServiceAspectRetriever.builder()

@@ -186,6 +188,10 @@ protected OperationContext javaSystemOperationContext(

.aspectRetriever(entityServiceAspectRetriever)

.graphRetriever(systemGraphRetriever)

.searchRetriever(searchServiceSearchRetriever)

+ .build(),

+ ValidationContext.builder()

+ .alternateValidation(

+ configurationProvider.getFeatureFlags().isAlternateMCPValidation())

.build());

entityServiceAspectRetriever.setSystemOperationContext(systemOperationContext);

diff --git a/docker/kafka-setup/Dockerfile b/docker/kafka-setup/Dockerfile

index dd88060cd7165..a11f823f5efa5 100644

--- a/docker/kafka-setup/Dockerfile

+++ b/docker/kafka-setup/Dockerfile

@@ -1,4 +1,4 @@

-ARG KAFKA_DOCKER_VERSION=7.4.6

+ARG KAFKA_DOCKER_VERSION=7.7.1

# Defining custom repo urls for use in enterprise environments. Re-used between stages below.

ARG ALPINE_REPO_URL=http://dl-cdn.alpinelinux.org/alpine

diff --git a/docker/profiles/docker-compose.gms.yml b/docker/profiles/docker-compose.gms.yml

index 2683734c2d5e5..147bbd35ff646 100644

--- a/docker/profiles/docker-compose.gms.yml

+++ b/docker/profiles/docker-compose.gms.yml

@@ -101,6 +101,7 @@ x-datahub-gms-service: &datahub-gms-service

environment: &datahub-gms-env

<<: [*primary-datastore-mysql-env, *graph-datastore-search-env, *search-datastore-env, *datahub-quickstart-telemetry-env, *kafka-env]

ELASTICSEARCH_QUERY_CUSTOM_CONFIG_FILE: ${ELASTICSEARCH_QUERY_CUSTOM_CONFIG_FILE:-search_config.yaml}

+ ALTERNATE_MCP_VALIDATION: ${ALTERNATE_MCP_VALIDATION:-true}

healthcheck:

test: curl -sS --fail http://datahub-gms:${DATAHUB_GMS_PORT:-8080}/health

start_period: 90s

@@ -182,6 +183,7 @@ x-datahub-mce-consumer-service: &datahub-mce-consumer-service

- ${DATAHUB_LOCAL_MCE_ENV:-empty2.env}

environment: &datahub-mce-consumer-env

<<: [*primary-datastore-mysql-env, *graph-datastore-search-env, *search-datastore-env, *datahub-quickstart-telemetry-env, *kafka-env]

+ ALTERNATE_MCP_VALIDATION: ${ALTERNATE_MCP_VALIDATION:-true}

x-datahub-mce-consumer-service-dev: &datahub-mce-consumer-service-dev

<<: *datahub-mce-consumer-service

diff --git a/docs-website/docusaurus.config.js b/docs-website/docusaurus.config.js

index cc421748557be..a3315ee071339 100644

--- a/docs-website/docusaurus.config.js

+++ b/docs-website/docusaurus.config.js

@@ -68,7 +68,7 @@ module.exports = {

announcementBar: {

id: "announcement-2",

content:

- 'NEW

Join us at Metadata & AI Summit, Oct. 29 & 30!

Register →NEW

Join us at Metadata & AI Summit, Oct. 29 & 30!

Register →

`bigquery.taxonomies.update` |

+| Assign/remove policy tags from columns | `bigquery.tables.updateTag` |

+| Edit table description | `bigquery.tables.update` |

+| Edit column description | `bigquery.tables.update` |

+| Assign/remove labels from tables | `bigquery.tables.update` |

+

+## Enabling BigQuery Sync Automation

+

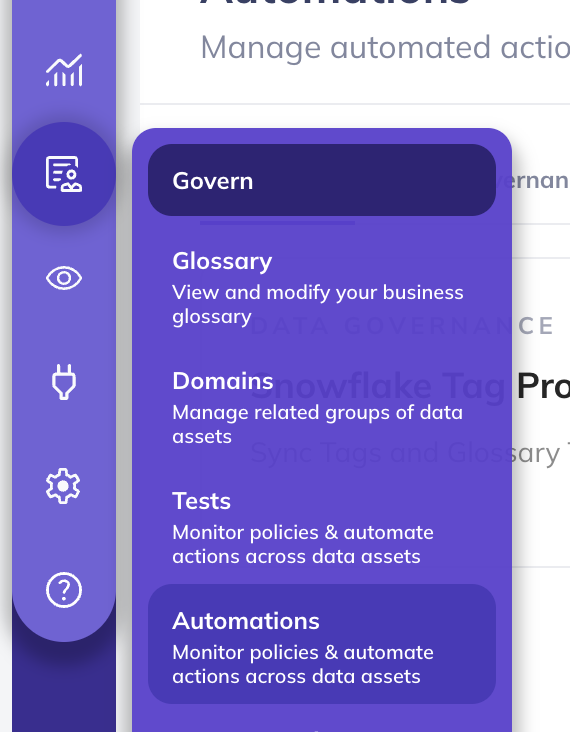

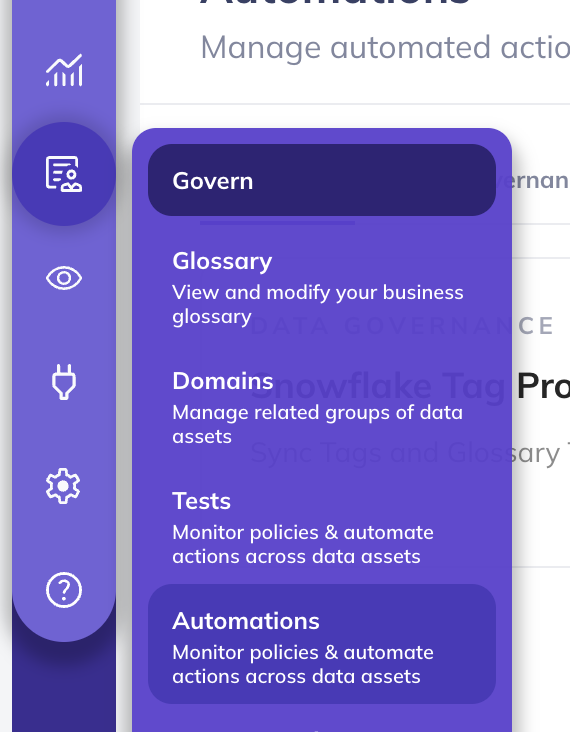

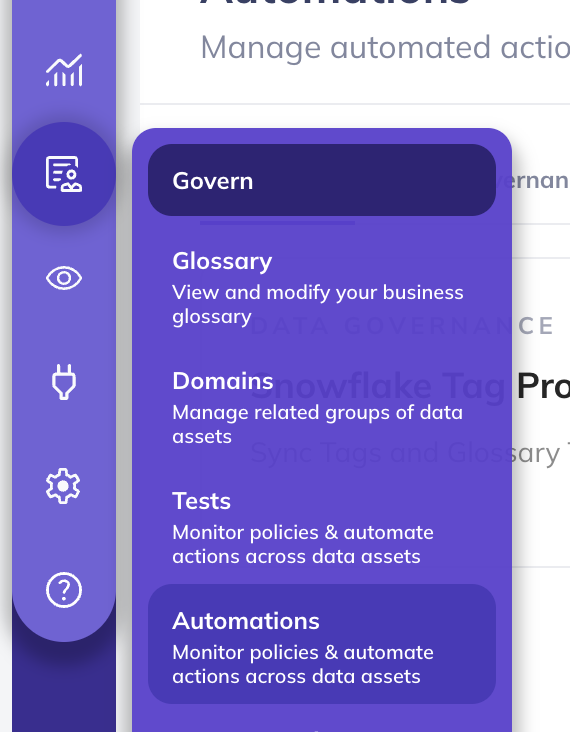

+1. **Navigate to Automations**: Click on 'Govern' > 'Automations' in the navigation bar.

+

+

+  +

+

+

+2. **Create An Automation**: Click on 'Create' and select 'BigQuery Tag Propagation'.

+

+

+  +

+

+

+3. **Configure Automation**:

+

+ 1. **Select a Propagation Action**

+

+

+  +

+

+

+ | Propagation Type | DataHub Entity | BigQuery Entity | Note |

+ | -------- | ------- | ------- | ------- |

+ | Table Tags as Labels | [Table Tag](https://datahubproject.io/docs/tags/) | [BigQuery Label](https://cloud.google.com/bigquery/docs/labels-intro) | - |

+ | Column Glossary Terms as Policy Tags | [Glossary Term on Table Column](https://datahubproject.io/docs/0.14.0/glossary/business-glossary/) | [Policy Tag](https://cloud.google.com/bigquery/docs/best-practices-policy-tags) | - Assigned Policy tags are created under DataHub taxonomy.

- Only the latest assigned glossary term set as policy tag. BigQuery only supports one assigned policy tag.

- Policy Tags are not synced to DataHub as glossary term from BigQuery.

+ | Table Descriptions | [Table Description](https://datahubproject.io/docs/api/tutorials/descriptions/) | Table Description | - |

+ | Column Descriptions | [Column Description](https://datahubproject.io/docs/api/tutorials/descriptions/) | Column Description | - |

+

+ :::note

+

+ You can limit propagation based on specific Tags and Glossary Terms. If none are selected, ALL Tags or Glossary Terms will be automatically propagated to BigQuery tables and columns. (The recommended approach is to not specify a filter to avoid inconsistent states.)

+

+ :::

+

+ :::note

+

+ - BigQuery supports only one Policy Tag per table field. Consequently, the most recently assigned Glossary Term will be set as the Policy Tag for that field.

+ - Policy Tags cannot be applied to fields in External tables. Therefore, if a Glossary Term is assigned to a field in an External table, it will not be applied.

+

+ :::

+

+ 2. **Fill in the required fields to connect to BigQuery, along with the name, description, and category**

+

+

+  +

+

+

+ 3. **Finally, click 'Save and Run' to start the automation**

+

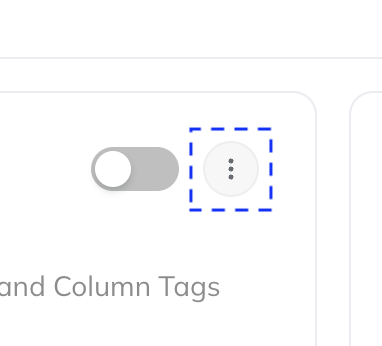

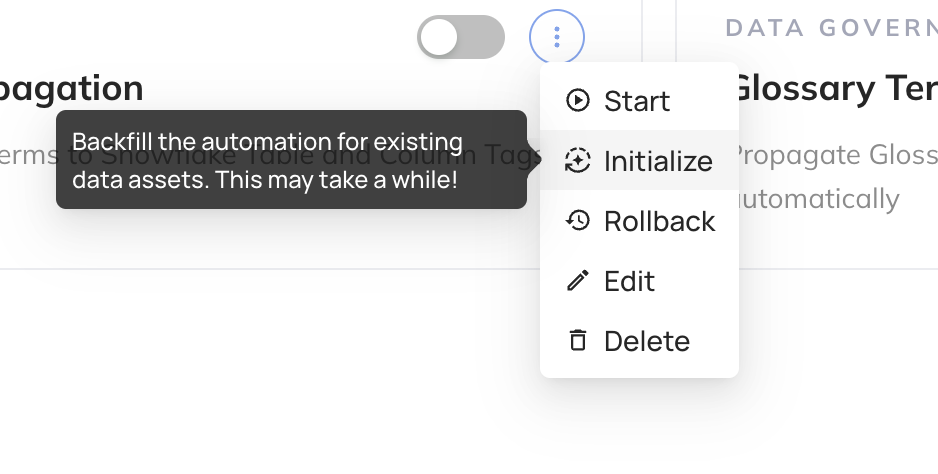

+## Propagating for Existing Assets

+

+To ensure that all existing table Tags and Column Glossary Terms are propagated to BigQuery, you can back-fill historical data for existing assets. Note that the initial back-filling process may take some time, depending on the number of BigQuery assets you have.

+

+To do so, follow these steps:

+

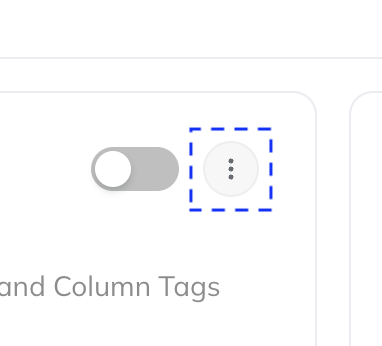

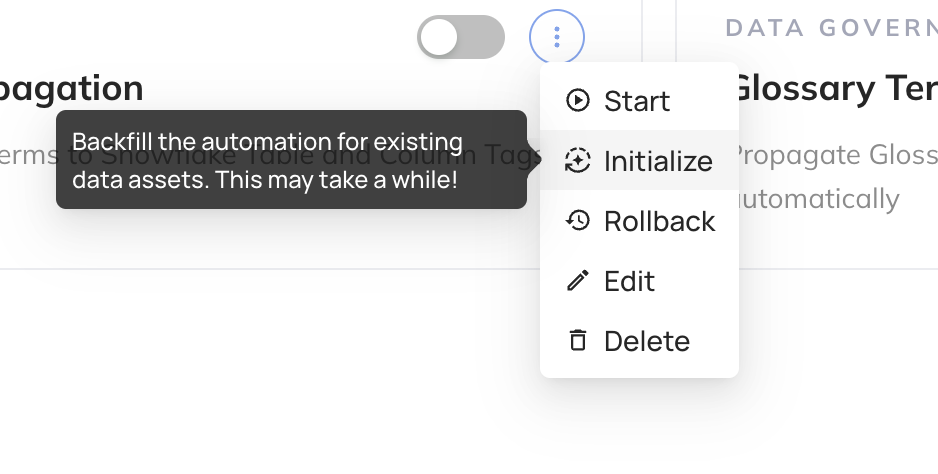

+1. Navigate to the Automation you created in Step 3 above

+2. Click the 3-dot "More" menu

+

+

+  +

+

+

+3. Click "Initialize"

+

+

+  +

+

+

+This one-time step will kick off the back-filling process for existing descriptions. If you only want to begin propagating descriptions going forward, you can skip this step.

+

+## Viewing Propagated Tags

+

+You can view propagated Tags inside the BigQuery UI to confirm the automation is working as expected.

+

+

+  +

+

+

+## Troubleshooting BigQuery Propagation

+

+### Q: What metadata elements support bi-directional syncing between DataHub and BigQuery?

+

+A: The following metadata elements support bi-directional syncing:

+

+- Tags (via BigQuery Labels): Changes made in either DataHub Table Tags or BigQuery Table Labels will be reflected in the other system.

+- Descriptions: Both table and column descriptions are synced bi-directionally.

+

+### Q: Are Policy Tags bi-directionally synced?

+

+A: No, BigQuery Policy Tags are only propagated from DataHub to BigQuery, not vice versa. This means that Policy Tags should be mastered in DataHub using the [Business Glossary](https://datahubproject.io/docs/glossary/business-glossary/).

+

+It is recommended to avoid enabling `extract_policy_tags_from_catalog` during

+ingestion, as this will ingest policy tags as BigQuery labels. Our sync process

+propagates Glossary Term assignments to BigQuery as Policy Tags.

+

+In a future release, we plan to remove this restriction to support full bi-directional syncing.

+

+### Q: What metadata is synced from BigQuery to DataHub during ingestion?

+

+A: During ingestion from BigQuery:

+

+- Tags and descriptions from BigQuery will be ingested into DataHub.

+- Existing Policy Tags in BigQuery will not overwrite or create Business Glossary Terms in DataHub. It only syncs assigned column Glossary Terms from DataHub to BigQuery.

+

+### Q: Where should I manage my Business Glossary?

+

+A: The expectation is that you author and manage the glossary in DataHub. Policy tags in BigQuery should be treated as a reflection of the DataHub glossary, not as the primary source of truth.

+

+### Q: Are there any limitations with Policy Tags in BigQuery?

+

+A: Yes, BigQuery only supports one Policy Tag per column. If multiple glossary

+terms are assigned to a column in DataHub, only the most recently assigned term

+will be set as the policy tag in BigQuery. To reduce the scope of conflicts, you

+can set up filters in the BigQuery Metadata Sync to only synchronize terms from

+a specific area of the Business Glossary.

+

+### Q: How frequently are changes synced between DataHub and BigQuery?

+

+A: From DataHub to BigQuery, the sync happens instantly (within a few seconds)

+when the change occurs in DataHub.

+

+From BigQuery to DataHub, changes are synced when ingestion occurs, and the frequency depends on your custom ingestion schedule. (Visible on the **Integrations** page)

+

+### Q: What happens if there's a conflict between DataHub and BigQuery metadata?

+

+A: In case of conflicts (e.g., a tag is modified in both systems between syncs), the DataHub version will typically take precedence. However, it's best to make changes in one system consistently to avoid potential conflicts.

+

+### Q: What permissions are required for bi-directional syncing?

+

+A: Ensure that the service account used for the automation has the necessary permissions in both DataHub and BigQuery to read and write metadata. See the required BigQuery permissions at the top of the page.

+

+## Related Documentation

+

+- [DataHub Tags Documentation](https://datahubproject.io/docs/tags/)

+- [DataHub Glossary Documentation](https://datahubproject.io/docs/glossary/business-glossary/)

+- [BigQuery Labels Documentation](https://cloud.google.com/bigquery/docs/labels-intro)

+- [BigQuery Policy Tags Documentation](https://cloud.google.com/bigquery/docs/best-practices-policy-tags)

diff --git a/entity-registry/src/main/java/com/linkedin/metadata/aspect/plugins/PluginSpec.java b/entity-registry/src/main/java/com/linkedin/metadata/aspect/plugins/PluginSpec.java

index f99dd18d3c9c1..54ccd3877395f 100644

--- a/entity-registry/src/main/java/com/linkedin/metadata/aspect/plugins/PluginSpec.java

+++ b/entity-registry/src/main/java/com/linkedin/metadata/aspect/plugins/PluginSpec.java

@@ -12,7 +12,7 @@

@AllArgsConstructor

@EqualsAndHashCode

public abstract class PluginSpec {

- protected static String ENTITY_WILDCARD = "*";

+ protected static String WILDCARD = "*";

@Nonnull

public abstract AspectPluginConfig getConfig();

@@ -50,7 +50,7 @@ protected boolean isEntityAspectSupported(

return (getConfig().getSupportedEntityAspectNames().stream()

.anyMatch(

supported ->

- ENTITY_WILDCARD.equals(supported.getEntityName())

+ WILDCARD.equals(supported.getEntityName())

|| supported.getEntityName().equals(entityName)))

&& isAspectSupported(aspectName);

}

@@ -59,13 +59,16 @@ protected boolean isAspectSupported(@Nonnull String aspectName) {

return getConfig().getSupportedEntityAspectNames().stream()

.anyMatch(

supported ->

- ENTITY_WILDCARD.equals(supported.getAspectName())

+ WILDCARD.equals(supported.getAspectName())

|| supported.getAspectName().equals(aspectName));

}

protected boolean isChangeTypeSupported(@Nullable ChangeType changeType) {

return (changeType == null && getConfig().getSupportedOperations().isEmpty())

|| getConfig().getSupportedOperations().stream()

- .anyMatch(supported -> supported.equalsIgnoreCase(String.valueOf(changeType)));

+ .anyMatch(

+ supported ->

+ WILDCARD.equals(supported)

+ || supported.equalsIgnoreCase(String.valueOf(changeType)));

}

}

diff --git a/metadata-ingestion-modules/dagster-plugin/setup.py b/metadata-ingestion-modules/dagster-plugin/setup.py

index 50450ddd5917a..660dbb2981c51 100644

--- a/metadata-ingestion-modules/dagster-plugin/setup.py

+++ b/metadata-ingestion-modules/dagster-plugin/setup.py

@@ -13,14 +13,6 @@ def get_long_description():

return pathlib.Path(os.path.join(root, "README.md")).read_text()

-rest_common = {"requests", "requests_file"}

-

-sqlglot_lib = {

- # Using an Acryl fork of sqlglot.

- # https://github.com/tobymao/sqlglot/compare/main...hsheth2:sqlglot:main?expand=1

- "acryl-sqlglot[rs]==24.0.1.dev7",

-}

-

_version: str = package_metadata["__version__"]

_self_pin = (

f"=={_version}"

@@ -32,11 +24,7 @@ def get_long_description():

# Actual dependencies.

"dagster >= 1.3.3",

"dagit >= 1.3.3",

- *rest_common,

- # Ignoring the dependency below because it causes issues with the vercel built wheel install

- # f"acryl-datahub[datahub-rest]{_self_pin}",

- "acryl-datahub[datahub-rest]",

- *sqlglot_lib,

+ f"acryl-datahub[datahub-rest,sql-parser]{_self_pin}",

}

mypy_stubs = {

diff --git a/metadata-ingestion-modules/prefect-plugin/src/prefect_datahub/datahub_emitter.py b/metadata-ingestion-modules/prefect-plugin/src/prefect_datahub/datahub_emitter.py

index 5991503416aec..8617381cf1613 100644

--- a/metadata-ingestion-modules/prefect-plugin/src/prefect_datahub/datahub_emitter.py

+++ b/metadata-ingestion-modules/prefect-plugin/src/prefect_datahub/datahub_emitter.py

@@ -155,12 +155,11 @@ async def _get_flow_run_graph(self, flow_run_id: str) -> Optional[List[Dict]]:

The flow run graph in json format.

"""

try:

- response = orchestration.get_client()._client.get(

+ response_coroutine = orchestration.get_client()._client.get(

f"/flow_runs/{flow_run_id}/graph"

)

- if asyncio.iscoroutine(response):

- response = await response

+ response = await response_coroutine

if hasattr(response, "json"):

response_json = response.json()

@@ -410,10 +409,9 @@ async def get_flow_run(flow_run_id: UUID) -> FlowRun:

if not hasattr(client, "read_flow_run"):

raise ValueError("Client does not support async read_flow_run method")

- response = client.read_flow_run(flow_run_id=flow_run_id)

+ response_coroutine = client.read_flow_run(flow_run_id=flow_run_id)

- if asyncio.iscoroutine(response):

- response = await response

+ response = await response_coroutine

return FlowRun.parse_obj(response)

@@ -477,10 +475,9 @@ async def get_task_run(task_run_id: UUID) -> TaskRun:

if not hasattr(client, "read_task_run"):

raise ValueError("Client does not support async read_task_run method")

- response = client.read_task_run(task_run_id=task_run_id)

+ response_coroutine = client.read_task_run(task_run_id=task_run_id)

- if asyncio.iscoroutine(response):

- response = await response

+ response = await response_coroutine

return TaskRun.parse_obj(response)

diff --git a/metadata-ingestion/pyproject.toml b/metadata-ingestion/pyproject.toml

index 2b6c87926c6c4..94e06fd53a70e 100644

--- a/metadata-ingestion/pyproject.toml

+++ b/metadata-ingestion/pyproject.toml

@@ -14,7 +14,7 @@ target-version = ['py37', 'py38', 'py39', 'py310']

[tool.isort]

combine_as_imports = true

indent = ' '

-known_future_library = ['__future__', 'datahub.utilities._markupsafe_compat', 'datahub_provider._airflow_compat']

+known_future_library = ['__future__', 'datahub.utilities._markupsafe_compat', 'datahub.sql_parsing._sqlglot_patch']

profile = 'black'

sections = 'FUTURE,STDLIB,THIRDPARTY,FIRSTPARTY,LOCALFOLDER'

skip_glob = 'src/datahub/metadata'

diff --git a/metadata-ingestion/scripts/avro_codegen.py b/metadata-ingestion/scripts/avro_codegen.py

index 5be8b6ed4cc21..e2dd515143992 100644

--- a/metadata-ingestion/scripts/avro_codegen.py

+++ b/metadata-ingestion/scripts/avro_codegen.py

@@ -361,9 +361,6 @@ def write_urn_classes(key_aspects: List[dict], urn_dir: Path) -> None:

for aspect in key_aspects:

entity_type = aspect["Aspect"]["keyForEntity"]

- if aspect["Aspect"]["entityCategory"] == "internal":

- continue

-

code += generate_urn_class(entity_type, aspect)

(urn_dir / "urn_defs.py").write_text(code)

diff --git a/metadata-ingestion/setup.py b/metadata-ingestion/setup.py

index 365da21208ecc..35dbff5cc2c71 100644

--- a/metadata-ingestion/setup.py

+++ b/metadata-ingestion/setup.py

@@ -99,9 +99,11 @@

}

sqlglot_lib = {

- # Using an Acryl fork of sqlglot.

+ # We heavily monkeypatch sqlglot.

+ # Prior to the patching, we originally maintained an acryl-sqlglot fork:

# https://github.com/tobymao/sqlglot/compare/main...hsheth2:sqlglot:main?expand=1

- "acryl-sqlglot[rs]==25.25.2.dev9",

+ "sqlglot[rs]==25.26.0",

+ "patchy==2.8.0",

}

classification_lib = {

@@ -122,6 +124,10 @@

"more_itertools",

}

+cachetools_lib = {

+ "cachetools",

+}

+

sql_common = (

{

# Required for all SQL sources.

@@ -138,6 +144,7 @@

# https://github.com/ipython/traitlets/issues/741

"traitlets<5.2.2",

"greenlet",

+ *cachetools_lib,

}

| usage_common

| sqlglot_lib

@@ -213,7 +220,7 @@

"pandas",

"cryptography",

"msal",

- "cachetools",

+ *cachetools_lib,

} | classification_lib

trino = {

@@ -457,7 +464,7 @@

| sqlglot_lib

| classification_lib

| {"db-dtypes"} # Pandas extension data types

- | {"cachetools"},

+ | cachetools_lib,

"s3": {*s3_base, *data_lake_profiling},

"gcs": {*s3_base, *data_lake_profiling},

"abs": {*abs_base, *data_lake_profiling},

diff --git a/metadata-ingestion/src/datahub/api/entities/platformresource/platform_resource.py b/metadata-ingestion/src/datahub/api/entities/platformresource/platform_resource.py

index 0f7b10a067053..0ba43d7b101e5 100644

--- a/metadata-ingestion/src/datahub/api/entities/platformresource/platform_resource.py

+++ b/metadata-ingestion/src/datahub/api/entities/platformresource/platform_resource.py

@@ -14,7 +14,12 @@

from datahub.emitter.mcp import MetadataChangeProposalWrapper

from datahub.emitter.mcp_builder import DatahubKey

from datahub.ingestion.graph.client import DataHubGraph

-from datahub.metadata.urns import DataPlatformUrn, PlatformResourceUrn, Urn

+from datahub.metadata.urns import (

+ DataPlatformInstanceUrn,

+ DataPlatformUrn,

+ PlatformResourceUrn,

+ Urn,

+)

from datahub.utilities.openapi_utils import OpenAPIGraphClient

from datahub.utilities.search_utils import (

ElasticDocumentQuery,

@@ -76,21 +81,6 @@ def to_resource_info(self) -> models.PlatformResourceInfoClass:

)

-class DataPlatformInstanceUrn:

- """

- A simple implementation of a URN class for DataPlatformInstance.

- Since this is not present in the URN registry, we need to implement it here.

- """

-

- @staticmethod

- def create_from_id(platform_instance_urn: str) -> Urn:

- if platform_instance_urn.startswith("urn:li:platformInstance:"):

- string_urn = platform_instance_urn

- else:

- string_urn = f"urn:li:platformInstance:{platform_instance_urn}"

- return Urn.from_string(string_urn)

-

-

class UrnSearchField(SearchField):

"""

A search field that supports URN values.

@@ -130,7 +120,7 @@ class PlatformResourceSearchFields:

PLATFORM_INSTANCE = PlatformResourceSearchField.from_search_field(

UrnSearchField(

field_name="platformInstance.keyword",

- urn_value_extractor=DataPlatformInstanceUrn.create_from_id,

+ urn_value_extractor=DataPlatformInstanceUrn.from_string,

)

)

diff --git a/metadata-ingestion/src/datahub/ingestion/source/bigquery_v2/bigquery_schema_gen.py b/metadata-ingestion/src/datahub/ingestion/source/bigquery_v2/bigquery_schema_gen.py

index 1235f638f68ff..f53642d1fead2 100644

--- a/metadata-ingestion/src/datahub/ingestion/source/bigquery_v2/bigquery_schema_gen.py

+++ b/metadata-ingestion/src/datahub/ingestion/source/bigquery_v2/bigquery_schema_gen.py

@@ -310,7 +310,7 @@ def gen_dataset_containers(

logger.warning(

f"Failed to generate platform resource for label {k}:{v}: {e}"

)

- tags_joined.append(tag_urn.urn())

+ tags_joined.append(tag_urn.name)

database_container_key = self.gen_project_id_key(database=project_id)

diff --git a/metadata-ingestion/src/datahub/ingestion/source/sql/sql_config.py b/metadata-ingestion/src/datahub/ingestion/source/sql/sql_config.py

index 3ead59eed2d39..7d82d99412ffe 100644

--- a/metadata-ingestion/src/datahub/ingestion/source/sql/sql_config.py

+++ b/metadata-ingestion/src/datahub/ingestion/source/sql/sql_config.py

@@ -2,6 +2,8 @@

from abc import abstractmethod

from typing import Any, Dict, Optional

+import cachetools

+import cachetools.keys

import pydantic

from pydantic import Field

from sqlalchemy.engine import URL

@@ -27,6 +29,7 @@

StatefulIngestionConfigBase,

)

from datahub.ingestion.source_config.operation_config import is_profiling_enabled

+from datahub.utilities.cachetools_keys import self_methodkey

logger: logging.Logger = logging.getLogger(__name__)

@@ -115,6 +118,13 @@ class SQLCommonConfig(

# Custom Stateful Ingestion settings

stateful_ingestion: Optional[StatefulStaleMetadataRemovalConfig] = None

+ # TRICKY: The operation_config is time-dependent. Because we don't want to change

+ # whether or not we're running profiling mid-ingestion, we cache the result of this method.

+ # TODO: This decorator should be moved to the is_profiling_enabled(operation_config) method.

+ @cachetools.cached(

+ cache=cachetools.LRUCache(maxsize=1),

+ key=self_methodkey,

+ )

def is_profiling_enabled(self) -> bool:

return self.profiling.enabled and is_profiling_enabled(

self.profiling.operation_config

diff --git a/metadata-ingestion/src/datahub/sql_parsing/_sqlglot_patch.py b/metadata-ingestion/src/datahub/sql_parsing/_sqlglot_patch.py

new file mode 100644

index 0000000000000..fc3f877ede629

--- /dev/null

+++ b/metadata-ingestion/src/datahub/sql_parsing/_sqlglot_patch.py

@@ -0,0 +1,215 @@

+import dataclasses

+import difflib

+import logging

+

+import patchy.api

+import sqlglot

+import sqlglot.expressions

+import sqlglot.lineage

+import sqlglot.optimizer.scope

+import sqlglot.optimizer.unnest_subqueries

+

+from datahub.utilities.is_pytest import is_pytest_running

+from datahub.utilities.unified_diff import apply_diff

+

+# This injects a few patches into sqlglot to add features and mitigate

+# some bugs and performance issues.

+# The diffs in this file should match the diffs declared in our fork.

+# https://github.com/tobymao/sqlglot/compare/main...hsheth2:sqlglot:main

+# For a diff-formatted view, see:

+# https://github.com/tobymao/sqlglot/compare/main...hsheth2:sqlglot:main.diff

+

+_DEBUG_PATCHER = is_pytest_running() or True

+logger = logging.getLogger(__name__)

+

+_apply_diff_subprocess = patchy.api._apply_patch

+

+

+def _new_apply_patch(source: str, patch_text: str, forwards: bool, name: str) -> str:

+ assert forwards, "Only forward patches are supported"

+

+ result = apply_diff(source, patch_text)

+

+ # TODO: When in testing mode, still run the subprocess and check that the

+ # results line up.

+ if _DEBUG_PATCHER:

+ result_subprocess = _apply_diff_subprocess(source, patch_text, forwards, name)

+ if result_subprocess != result:

+ logger.info("Results from subprocess and _apply_diff do not match")

+ logger.debug(f"Subprocess result:\n{result_subprocess}")

+ logger.debug(f"Our result:\n{result}")

+ diff = difflib.unified_diff(

+ result_subprocess.splitlines(), result.splitlines()

+ )

+ logger.debug("Diff:\n" + "\n".join(diff))

+ raise ValueError("Results from subprocess and _apply_diff do not match")

+

+ return result

+

+

+patchy.api._apply_patch = _new_apply_patch

+

+

+def _patch_deepcopy() -> None:

+ patchy.patch(

+ sqlglot.expressions.Expression.__deepcopy__,

+ """\

+@@ -1,4 +1,7 @@ def meta(self) -> t.Dict[str, t.Any]:

+ def __deepcopy__(self, memo):

++ import datahub.utilities.cooperative_timeout

++ datahub.utilities.cooperative_timeout.cooperate()

++

+ root = self.__class__()

+ stack = [(self, root)]

+""",

+ )

+

+

+def _patch_scope_traverse() -> None:

+ # Circular scope dependencies can happen in somewhat specific circumstances

+ # due to our usage of sqlglot.

+ # See https://github.com/tobymao/sqlglot/pull/4244

+ patchy.patch(

+ sqlglot.optimizer.scope.Scope.traverse,

+ """\

+@@ -5,9 +5,16 @@ def traverse(self):

+ Scope: scope instances in depth-first-search post-order

+ \"""

+ stack = [self]

++ seen_scopes = set()

+ result = []

+ while stack:

+ scope = stack.pop()

++

++ # Scopes aren't hashable, so we use id(scope) instead.

++ if id(scope) in seen_scopes:

++ raise OptimizeError(f"Scope {scope} has a circular scope dependency")

++ seen_scopes.add(id(scope))

++

+ result.append(scope)

+ stack.extend(

+ itertools.chain(

+""",

+ )

+

+

+def _patch_unnest_subqueries() -> None:

+ patchy.patch(

+ sqlglot.optimizer.unnest_subqueries.decorrelate,

+ """\

+@@ -261,16 +261,19 @@ def remove_aggs(node):

+ if key in group_by:

+ key.replace(nested)

+ elif isinstance(predicate, exp.EQ):

+- parent_predicate = _replace(

+- parent_predicate,

+- f"({parent_predicate} AND ARRAY_CONTAINS({nested}, {column}))",

+- )

++ if parent_predicate:

++ parent_predicate = _replace(

++ parent_predicate,

++ f"({parent_predicate} AND ARRAY_CONTAINS({nested}, {column}))",

++ )

+ else:

+ key.replace(exp.to_identifier("_x"))

+- parent_predicate = _replace(

+- parent_predicate,

+- f"({parent_predicate} AND ARRAY_ANY({nested}, _x -> {predicate}))",

+- )

++

++ if parent_predicate:

++ parent_predicate = _replace(

++ parent_predicate,

++ f"({parent_predicate} AND ARRAY_ANY({nested}, _x -> {predicate}))",

++ )

+""",

+ )

+

+

+def _patch_lineage() -> None:

+ # Add the "subfield" attribute to sqlglot.lineage.Node.

+ # With dataclasses, the easiest way to do this is with inheritance.

+ # Unfortunately, mypy won't pick up on the new field, so we need to

+ # use type ignores everywhere we use subfield.

+ @dataclasses.dataclass(frozen=True)

+ class Node(sqlglot.lineage.Node):

+ subfield: str = ""

+

+ sqlglot.lineage.Node = Node # type: ignore

+

+ patchy.patch(

+ sqlglot.lineage.lineage,

+ """\

+@@ -12,7 +12,8 @@ def lineage(

+ \"""

+

+ expression = maybe_parse(sql, dialect=dialect)

+- column = normalize_identifiers.normalize_identifiers(column, dialect=dialect).name

++ # column = normalize_identifiers.normalize_identifiers(column, dialect=dialect).name

++ assert isinstance(column, str)

+

+ if sources:

+ expression = exp.expand(

+""",

+ )

+

+ patchy.patch(

+ sqlglot.lineage.to_node,

+ """\

+@@ -235,11 +237,12 @@ def to_node(

+ )

+

+ # Find all columns that went into creating this one to list their lineage nodes.

+- source_columns = set(find_all_in_scope(select, exp.Column))

++ source_columns = list(find_all_in_scope(select, exp.Column))

+

+- # If the source is a UDTF find columns used in the UTDF to generate the table

++ # If the source is a UDTF find columns used in the UDTF to generate the table

++ source = scope.expression

+ if isinstance(source, exp.UDTF):

+- source_columns |= set(source.find_all(exp.Column))

++ source_columns += list(source.find_all(exp.Column))

+ derived_tables = [

+ source.expression.parent

+ for source in scope.sources.values()

+@@ -254,6 +257,7 @@ def to_node(

+ if dt.comments and dt.comments[0].startswith("source: ")

+ }

+

++ c: exp.Column

+ for c in source_columns:

+ table = c.table

+ source = scope.sources.get(table)

+@@ -281,8 +285,21 @@ def to_node(

+ # it means this column's lineage is unknown. This can happen if the definition of a source used in a query

+ # is not passed into the `sources` map.

+ source = source or exp.Placeholder()

++

++ subfields = []

++ field: exp.Expression = c

++ while isinstance(field.parent, exp.Dot):

++ field = field.parent

++ subfields.append(field.name)

++ subfield = ".".join(subfields)

++

+ node.downstream.append(

+- Node(name=c.sql(comments=False), source=source, expression=source)

++ Node(

++ name=c.sql(comments=False),

++ source=source,

++ expression=source,

++ subfield=subfield,

++ )

+ )

+

+ return node

+""",

+ )

+

+

+_patch_deepcopy()

+_patch_scope_traverse()

+_patch_unnest_subqueries()

+_patch_lineage()

+

+SQLGLOT_PATCHED = True

diff --git a/metadata-ingestion/src/datahub/sql_parsing/sqlglot_lineage.py b/metadata-ingestion/src/datahub/sql_parsing/sqlglot_lineage.py

index 6a7ff5be6d1ea..b635f8cb47b6d 100644

--- a/metadata-ingestion/src/datahub/sql_parsing/sqlglot_lineage.py

+++ b/metadata-ingestion/src/datahub/sql_parsing/sqlglot_lineage.py

@@ -1,3 +1,5 @@

+from datahub.sql_parsing._sqlglot_patch import SQLGLOT_PATCHED

+

import dataclasses

import functools

import logging

@@ -53,6 +55,8 @@

cooperative_timeout,

)

+assert SQLGLOT_PATCHED

+

logger = logging.getLogger(__name__)

Urn = str

diff --git a/metadata-ingestion/src/datahub/sql_parsing/sqlglot_utils.py b/metadata-ingestion/src/datahub/sql_parsing/sqlglot_utils.py

index 71245353101f6..c62312c9004cd 100644

--- a/metadata-ingestion/src/datahub/sql_parsing/sqlglot_utils.py

+++ b/metadata-ingestion/src/datahub/sql_parsing/sqlglot_utils.py

@@ -1,3 +1,5 @@

+from datahub.sql_parsing._sqlglot_patch import SQLGLOT_PATCHED

+

import functools

import hashlib

import logging

@@ -8,6 +10,8 @@

import sqlglot.errors

import sqlglot.optimizer.eliminate_ctes

+assert SQLGLOT_PATCHED

+

logger = logging.getLogger(__name__)

DialectOrStr = Union[sqlglot.Dialect, str]

SQL_PARSE_CACHE_SIZE = 1000

diff --git a/metadata-ingestion/src/datahub/utilities/cachetools_keys.py b/metadata-ingestion/src/datahub/utilities/cachetools_keys.py

new file mode 100644

index 0000000000000..e3c7d67c81cd3

--- /dev/null

+++ b/metadata-ingestion/src/datahub/utilities/cachetools_keys.py

@@ -0,0 +1,8 @@

+from typing import Any

+

+import cachetools.keys

+

+

+def self_methodkey(self: Any, *args: Any, **kwargs: Any) -> Any:

+ # Keeps the id of self around

+ return cachetools.keys.hashkey(id(self), *args, **kwargs)

diff --git a/metadata-ingestion/src/datahub/utilities/is_pytest.py b/metadata-ingestion/src/datahub/utilities/is_pytest.py

new file mode 100644

index 0000000000000..68bb1b285a50e

--- /dev/null

+++ b/metadata-ingestion/src/datahub/utilities/is_pytest.py

@@ -0,0 +1,5 @@

+import sys

+

+

+def is_pytest_running() -> bool:

+ return "pytest" in sys.modules

diff --git a/metadata-ingestion/src/datahub/utilities/unified_diff.py b/metadata-ingestion/src/datahub/utilities/unified_diff.py

new file mode 100644

index 0000000000000..c896fd4df4d8f

--- /dev/null

+++ b/metadata-ingestion/src/datahub/utilities/unified_diff.py

@@ -0,0 +1,236 @@

+import logging

+from dataclasses import dataclass

+from typing import List, Tuple

+

+logger = logging.getLogger(__name__)

+logger.setLevel(logging.INFO)

+

+_LOOKAROUND_LINES = 300

+

+# The Python difflib library can generate unified diffs, but it cannot apply them.

+# There weren't any well-maintained and easy-to-use libraries for applying

+# unified diffs, so I wrote my own.

+#

+# My implementation is focused on ensuring correctness, and will throw

+# an exception whenever it detects an issue.

+#

+# Alternatives considered:

+# - diff-match-patch: This was the most promising since it's from Google.

+# Unfortunately, they deprecated the library in Aug 2024. That may not have

+# been a dealbreaker, since a somewhat greenfield community fork exists:

+# https://github.com/dmsnell/diff-match-patch

+# However, there's also a long-standing bug in the library around the

+# handling of line breaks when parsing diffs. See:

+# https://github.com/google/diff-match-patch/issues/157

+# - python-patch: Seems abandoned.

+# - patch-ng: Fork of python-patch, but mainly targeted at applying patches to trees.

+# It did not have simple "apply patch to string" abstractions.

+# - unidiff: Parses diffs, but cannot apply them.

+

+

+class InvalidDiffError(Exception):

+ pass

+

+

+class DiffApplyError(Exception):

+ pass

+

+

+@dataclass

+class Hunk:

+ source_start: int

+ source_lines: int

+ target_start: int

+ target_lines: int

+ lines: List[Tuple[str, str]]

+

+

+def parse_patch(patch_text: str) -> List[Hunk]:

+ """

+ Parses a unified diff patch into a list of Hunk objects.

+

+ Args:

+ patch_text: Unified diff format patch text

+

+ Returns:

+ List of parsed Hunk objects

+

+ Raises:

+ InvalidDiffError: If the patch is in an invalid format

+ """

+ hunks = []

+ patch_lines = patch_text.splitlines()

+ i = 0

+

+ while i < len(patch_lines):

+ line = patch_lines[i]

+

+ if line.startswith("@@"):

+ try:

+ header_parts = line.split()

+ if len(header_parts) < 3:

+ raise ValueError(f"Invalid hunk header format: {line}")

+

+ source_changes, target_changes = header_parts[1:3]

+ source_start, source_lines = map(int, source_changes[1:].split(","))

+ target_start, target_lines = map(int, target_changes[1:].split(","))

+

+ hunk = Hunk(source_start, source_lines, target_start, target_lines, [])

+ i += 1

+

+ while i < len(patch_lines) and not patch_lines[i].startswith("@@"):

+ hunk_line = patch_lines[i]

+ if hunk_line:

+ hunk.lines.append((hunk_line[0], hunk_line[1:]))

+ else:

+ # Fully empty lines usually means an empty context line that was

+ # trimmed by trailing whitespace removal.

+ hunk.lines.append((" ", ""))

+ i += 1

+

+ hunks.append(hunk)

+ except (IndexError, ValueError) as e:

+ raise InvalidDiffError(f"Failed to parse hunk: {str(e)}") from e

+ else:

+ raise InvalidDiffError(f"Invalid line format: {line}")

+

+ return hunks

+

+

+def find_hunk_start(source_lines: List[str], hunk: Hunk) -> int:

+ """

+ Finds the actual starting line of a hunk in the source lines.

+

+ Args:

+ source_lines: The original source lines

+ hunk: The hunk to locate

+

+ Returns:

+ The actual line number where the hunk starts

+

+ Raises:

+ DiffApplyError: If the hunk's context cannot be found in the source lines

+ """

+

+ # Extract context lines from the hunk, stopping at the first non-context line

+ context_lines = []

+ for prefix, line in hunk.lines:

+ if prefix == " ":

+ context_lines.append(line)

+ else:

+ break

+

+ if not context_lines:

+ logger.debug("No context lines found in hunk.")

+ return hunk.source_start - 1 # Default to the original start if no context

+

+ logger.debug(

+ f"Searching for {len(context_lines)} context lines, starting with {context_lines[0]}"

+ )

+

+ # Define the range to search for the context lines

+ search_start = max(0, hunk.source_start - _LOOKAROUND_LINES)

+ search_end = min(len(source_lines), hunk.source_start + _LOOKAROUND_LINES)

+

+ # Iterate over the possible starting positions in the source lines

+ for i in range(search_start, search_end):

+ # Check if the context lines match the source lines starting at position i

+ match = True

+ for j, context_line in enumerate(context_lines):

+ if (i + j >= len(source_lines)) or source_lines[i + j] != context_line:

+ match = False

+ break

+ if match:

+ # logger.debug(f"Context match found at line: {i}")

+ return i

+

+ logger.debug(f"Could not find match for hunk context lines: {context_lines}")

+ raise DiffApplyError("Could not find match for hunk context.")

+

+

+def apply_hunk(result_lines: List[str], hunk: Hunk, hunk_index: int) -> None:

+ """

+ Applies a single hunk to the result lines.

+

+ Args:

+ result_lines: The current state of the patched file

+ hunk: The hunk to apply

+ hunk_index: The index of the hunk (for logging purposes)

+

+ Raises:

+ DiffApplyError: If the hunk cannot be applied correctly

+ """

+ current_line = find_hunk_start(result_lines, hunk)

+ logger.debug(f"Hunk {hunk_index + 1} start line: {current_line}")

+

+ for line_index, (prefix, content) in enumerate(hunk.lines):

+ # logger.debug(f"Processing line {line_index + 1} of hunk {hunk_index + 1}")

+ # logger.debug(f"Current line: {current_line}, Total lines: {len(result_lines)}")

+ # logger.debug(f"Prefix: {prefix}, Content: {content}")

+

+ if current_line >= len(result_lines):

+ logger.debug(f"Reached end of file while applying hunk {hunk_index + 1}")

+ while line_index < len(hunk.lines) and hunk.lines[line_index][0] == "+":

+ result_lines.append(hunk.lines[line_index][1])

+ line_index += 1

+

+ # If there's context or deletions past the end of the file, that's an error.

+ if line_index < len(hunk.lines):

+ raise DiffApplyError(

+ f"Found context or deletions after end of file in hunk {hunk_index + 1}"

+ )

+ break

+

+ if prefix == "-":

+ if result_lines[current_line].strip() != content.strip():

+ raise DiffApplyError(

+ f"Removing line that doesn't exactly match. Expected: '{content.strip()}', Found: '{result_lines[current_line].strip()}'"

+ )

+ result_lines.pop(current_line)

+ elif prefix == "+":

+ result_lines.insert(current_line, content)

+ current_line += 1

+ elif prefix == " ":

+ if result_lines[current_line].strip() != content.strip():

+ raise DiffApplyError(

+ f"Context line doesn't exactly match. Expected: '{content.strip()}', Found: '{result_lines[current_line].strip()}'"

+ )

+ current_line += 1

+ else:

+ raise DiffApplyError(

+ f"Invalid line prefix '{prefix}' in hunk {hunk_index + 1}, line {line_index + 1}"

+ )

+

+

+def apply_diff(source: str, patch_text: str) -> str:

+ """

+ Applies a unified diff patch to source text and returns the patched result.

+

+ Args:

+ source: Original source text to be patched

+ patch_text: Unified diff format patch text (with @@ markers and hunks)

+

+ Returns:

+ The patched text result

+

+ Raises:

+ InvalidDiffError: If the patch is in an invalid format

+ DiffApplyError: If the patch cannot be applied correctly

+ """

+

+ # logger.debug(f"Original source:\n{source}")

+ # logger.debug(f"Patch text:\n{patch_text}")

+

+ hunks = parse_patch(patch_text)

+ logger.debug(f"Parsed into {len(hunks)} hunks")

+

+ source_lines = source.splitlines()

+ result_lines = source_lines.copy()

+

+ for hunk_index, hunk in enumerate(hunks):

+ logger.debug(f"Processing hunk {hunk_index + 1}")

+ apply_hunk(result_lines, hunk, hunk_index)

+

+ result = "\n".join(result_lines) + "\n"

+ # logger.debug(f"Patched result:\n{result}")

+ return result

diff --git a/metadata-ingestion/tests/integration/bigquery_v2/bigquery_mcp_golden.json b/metadata-ingestion/tests/integration/bigquery_v2/bigquery_mcp_golden.json

index 640ee1bf436b0..5e091596cc0f7 100644

--- a/metadata-ingestion/tests/integration/bigquery_v2/bigquery_mcp_golden.json

+++ b/metadata-ingestion/tests/integration/bigquery_v2/bigquery_mcp_golden.json

@@ -112,6 +112,26 @@

"lastRunId": "no-run-id-provided"

}

},

+{

+ "entityType": "container",

+ "entityUrn": "urn:li:container:8df46c5e3ded05a3122b0015822c0ef0",

+ "changeType": "UPSERT",

+ "aspectName": "globalTags",

+ "aspect": {

+ "json": {

+ "tags": [

+ {

+ "tag": "urn:li:tag:priority:medium:test"

+ }

+ ]

+ }

+ },

+ "systemMetadata": {

+ "lastObserved": 1643871600000,

+ "runId": "bigquery-2022_02_03-07_00_00",

+ "lastRunId": "no-run-id-provided"

+ }

+},

{

"entityType": "container",

"entityUrn": "urn:li:container:8df46c5e3ded05a3122b0015822c0ef0",

@@ -257,6 +277,64 @@

"lastRunId": "no-run-id-provided"

}

},

+{

+ "entityType": "platformResource",

+ "entityUrn": "urn:li:platformResource:7fbbf79fb726422dc2434222a8e30630",

+ "changeType": "UPSERT",

+ "aspectName": "platformResourceInfo",

+ "aspect": {

+ "json": {

+ "resourceType": "BigQueryLabelInfo",

+ "primaryKey": "priority/medium:test",

+ "secondaryKeys": [

+ "urn:li:tag:priority:medium:test"

+ ],

+ "value": {

+ "blob": "{\"datahub_urn\": \"urn:li:tag:priority:medium:test\", \"managed_by_datahub\": false, \"key\": \"priority\", \"value\": \"medium:test\"}",

+ "contentType": "JSON",

+ "schemaType": "JSON",

+ "schemaRef": "BigQueryLabelInfo"

+ }

+ }

+ },

+ "systemMetadata": {

+ "lastObserved": 1643871600000,

+ "runId": "bigquery-2022_02_03-07_00_00-2j2qqv",

+ "lastRunId": "no-run-id-provided"

+ }

+},

+{

+ "entityType": "platformResource",

+ "entityUrn": "urn:li:platformResource:7fbbf79fb726422dc2434222a8e30630",

+ "changeType": "UPSERT",

+ "aspectName": "dataPlatformInstance",

+ "aspect": {

+ "json": {

+ "platform": "urn:li:dataPlatform:bigquery"

+ }

+ },

+ "systemMetadata": {

+ "lastObserved": 1643871600000,

+ "runId": "bigquery-2022_02_03-07_00_00-2j2qqv",

+ "lastRunId": "no-run-id-provided"

+ }

+},

+{

+ "entityType": "platformResource",

+ "entityUrn": "urn:li:platformResource:7fbbf79fb726422dc2434222a8e30630",

+ "changeType": "UPSERT",

+ "aspectName": "status",

+ "aspect": {

+ "json": {

+ "removed": false

+ }

+ },

+ "systemMetadata": {

+ "lastObserved": 1643871600000,

+ "runId": "bigquery-2022_02_03-07_00_00-2j2qqv",

+ "lastRunId": "no-run-id-provided"

+ }

+},

{

"entityType": "platformResource",

"entityUrn": "urn:li:platformResource:99b34051bd90d28d922b0e107277a916",

@@ -1241,6 +1319,22 @@

"lastRunId": "no-run-id-provided"

}

},

+{

+ "entityType": "tag",

+ "entityUrn": "urn:li:tag:priority:medium:test",

+ "changeType": "UPSERT",

+ "aspectName": "tagKey",

+ "aspect": {

+ "json": {

+ "name": "priority:medium:test"

+ }

+ },

+ "systemMetadata": {

+ "lastObserved": 1643871600000,

+ "runId": "bigquery-2022_02_03-07_00_00",

+ "lastRunId": "no-run-id-provided"

+ }

+},

{

"entityType": "tag",

"entityUrn": "urn:li:tag:purchase",

diff --git a/metadata-ingestion/tests/integration/bigquery_v2/test_bigquery.py b/metadata-ingestion/tests/integration/bigquery_v2/test_bigquery.py

index 39cefcb42f360..1f14688636161 100644

--- a/metadata-ingestion/tests/integration/bigquery_v2/test_bigquery.py

+++ b/metadata-ingestion/tests/integration/bigquery_v2/test_bigquery.py

@@ -70,6 +70,7 @@ def recipe(mcp_output_path: str, source_config_override: dict = {}) -> dict:

"include_table_lineage": True,

"include_data_platform_instance": True,

"capture_table_label_as_tag": True,

+ "capture_dataset_label_as_tag": True,

"classification": ClassificationConfig(

enabled=True,

classifiers=[

@@ -141,7 +142,10 @@ def side_effect(*args: Any) -> Optional[PlatformResource]:

get_platform_resource.side_effect = side_effect

get_datasets_for_project_id.return_value = [

- BigqueryDataset(name=dataset_name, location="US")

+ # BigqueryDataset(name=dataset_name, location="US")

+ BigqueryDataset(

+ name=dataset_name, location="US", labels={"priority": "medium:test"}

+ )

]

table_list_item = TableListItem(

diff --git a/metadata-ingestion/tests/unit/sql_parsing/test_sqlglot_patch.py b/metadata-ingestion/tests/unit/sql_parsing/test_sqlglot_patch.py

new file mode 100644

index 0000000000000..dee6d9630c12e

--- /dev/null

+++ b/metadata-ingestion/tests/unit/sql_parsing/test_sqlglot_patch.py

@@ -0,0 +1,48 @@

+from datahub.sql_parsing._sqlglot_patch import SQLGLOT_PATCHED

+

+import time

+

+import pytest

+import sqlglot

+import sqlglot.errors

+import sqlglot.lineage

+import sqlglot.optimizer

+

+from datahub.utilities.cooperative_timeout import (

+ CooperativeTimeoutError,

+ cooperative_timeout,

+)

+from datahub.utilities.perf_timer import PerfTimer

+

+assert SQLGLOT_PATCHED

+

+

+def test_cooperative_timeout_sql() -> None:

+ statement = sqlglot.parse_one("SELECT pg_sleep(3)", dialect="postgres")

+ with pytest.raises(

+ CooperativeTimeoutError

+ ), PerfTimer() as timer, cooperative_timeout(timeout=0.6):

+ while True:

+ # sql() implicitly calls copy(), which is where we check for the timeout.

+ assert statement.sql() is not None

+ time.sleep(0.0001)

+ assert 0.6 <= timer.elapsed_seconds() <= 1.0

+

+

+def test_scope_circular_dependency() -> None:

+ scope = sqlglot.optimizer.build_scope(

+ sqlglot.parse_one("WITH w AS (SELECT * FROM q) SELECT * FROM w")

+ )

+ assert scope is not None

+

+ cte_scope = scope.cte_scopes[0]

+ cte_scope.cte_scopes.append(cte_scope)

+

+ with pytest.raises(sqlglot.errors.OptimizeError, match="circular scope dependency"):

+ list(scope.traverse())

+

+

+def test_lineage_node_subfield() -> None:

+ expression = sqlglot.parse_one("SELECT 1 AS test")

+ node = sqlglot.lineage.Node("test", expression, expression, subfield="subfield") # type: ignore

+ assert node.subfield == "subfield" # type: ignore

diff --git a/metadata-ingestion/tests/unit/utilities/test_unified_diff.py b/metadata-ingestion/tests/unit/utilities/test_unified_diff.py

new file mode 100644

index 0000000000000..05277ec3fa0ab

--- /dev/null

+++ b/metadata-ingestion/tests/unit/utilities/test_unified_diff.py

@@ -0,0 +1,191 @@

+import pytest

+

+from datahub.utilities.unified_diff import (

+ DiffApplyError,

+ Hunk,

+ InvalidDiffError,

+ apply_diff,

+ apply_hunk,

+ find_hunk_start,

+ parse_patch,

+)

+

+

+def test_parse_patch():

+ patch_text = """@@ -1,3 +1,4 @@

+ Line 1

+-Line 2

++Line 2 modified

++Line 2.5

+ Line 3"""

+ hunks = parse_patch(patch_text)

+ assert len(hunks) == 1

+ assert hunks[0].source_start == 1

+ assert hunks[0].source_lines == 3

+ assert hunks[0].target_start == 1

+ assert hunks[0].target_lines == 4

+ assert hunks[0].lines == [

+ (" ", "Line 1"),

+ ("-", "Line 2"),

+ ("+", "Line 2 modified"),

+ ("+", "Line 2.5"),

+ (" ", "Line 3"),

+ ]

+

+

+def test_parse_patch_invalid():

+ with pytest.raises(InvalidDiffError):

+ parse_patch("Invalid patch")

+

+

+def test_parse_patch_bad_header():

+ # A patch with a malformed header

+ bad_patch_text = """@@ -1,3

+ Line 1

+-Line 2

++Line 2 modified

+ Line 3"""

+ with pytest.raises(InvalidDiffError):

+ parse_patch(bad_patch_text)

+

+

+def test_find_hunk_start():

+ source_lines = ["Line 1", "Line 2", "Line 3", "Line 4"]

+ hunk = Hunk(2, 2, 2, 2, [(" ", "Line 2"), (" ", "Line 3")])

+ assert find_hunk_start(source_lines, hunk) == 1

+

+

+def test_find_hunk_start_not_found():

+ source_lines = ["Line 1", "Line 2", "Line 3", "Line 4"]

+ hunk = Hunk(2, 2, 2, 2, [(" ", "Line X"), (" ", "Line Y")])

+ with pytest.raises(DiffApplyError, match="Could not find match for hunk context."):

+ find_hunk_start(source_lines, hunk)

+

+

+def test_apply_hunk_success():

+ result_lines = ["Line 1", "Line 2", "Line 3"]

+ hunk = Hunk(

+ 2,

+ 2,

+ 2,

+ 3,

+ [(" ", "Line 2"), ("-", "Line 3"), ("+", "Line 3 modified"), ("+", "Line 3.5")],

+ )

+ apply_hunk(result_lines, hunk, 0)

+ assert result_lines == ["Line 1", "Line 2", "Line 3 modified", "Line 3.5"]

+

+

+def test_apply_hunk_mismatch():

+ result_lines = ["Line 1", "Line 2", "Line X"]

+ hunk = Hunk(

+ 2, 2, 2, 2, [(" ", "Line 2"), ("-", "Line 3"), ("+", "Line 3 modified")]

+ )

+ with pytest.raises(

+ DiffApplyError, match="Removing line that doesn't exactly match"

+ ):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_context_mismatch():

+ result_lines = ["Line 1", "Line 3"]

+ hunk = Hunk(2, 2, 2, 2, [(" ", "Line 1"), ("+", "Line 2"), (" ", "Line 4")])

+ with pytest.raises(DiffApplyError, match="Context line doesn't exactly match"):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_invalid_prefix():

+ result_lines = ["Line 1", "Line 2", "Line 3"]

+ hunk = Hunk(

+ 2, 2, 2, 2, [(" ", "Line 2"), ("*", "Line 3"), ("+", "Line 3 modified")]

+ )

+ with pytest.raises(DiffApplyError, match="Invalid line prefix"):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_end_of_file():

+ result_lines = ["Line 1", "Line 2"]

+ hunk = Hunk(

+ 2, 2, 2, 3, [(" ", "Line 2"), ("-", "Line 3"), ("+", "Line 3 modified")]

+ )

+ with pytest.raises(

+ DiffApplyError, match="Found context or deletions after end of file"

+ ):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_context_beyond_end_of_file():

+ result_lines = ["Line 1", "Line 3"]

+ hunk = Hunk(

+ 2, 2, 2, 3, [(" ", "Line 1"), ("+", "Line 2"), (" ", "Line 3"), (" ", "Line 4")]

+ )

+ with pytest.raises(

+ DiffApplyError, match="Found context or deletions after end of file"

+ ):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_remove_non_existent_line():

+ result_lines = ["Line 1", "Line 2", "Line 4"]

+ hunk = Hunk(

+ 2, 2, 2, 3, [(" ", "Line 2"), ("-", "Line 3"), ("+", "Line 3 modified")]

+ )

+ with pytest.raises(

+ DiffApplyError, match="Removing line that doesn't exactly match"

+ ):

+ apply_hunk(result_lines, hunk, 0)

+

+

+def test_apply_hunk_addition_beyond_end_of_file():

+ result_lines = ["Line 1", "Line 2"]

+ hunk = Hunk(

+ 2, 2, 2, 3, [(" ", "Line 2"), ("+", "Line 3 modified"), ("+", "Line 4")]

+ )

+ apply_hunk(result_lines, hunk, 0)

+ assert result_lines == ["Line 1", "Line 2", "Line 3 modified", "Line 4"]

+

+

+def test_apply_diff():

+ source = """Line 1

+Line 2

+Line 3

+Line 4"""

+ patch = """@@ -1,4 +1,5 @@

+ Line 1

+-Line 2

++Line 2 modified

++Line 2.5

+ Line 3

+ Line 4"""

+ result = apply_diff(source, patch)

+ expected = """Line 1

+Line 2 modified

+Line 2.5

+Line 3

+Line 4

+"""

+ assert result == expected

+

+

+def test_apply_diff_invalid_patch():

+ source = "Line 1\nLine 2\n"

+ patch = "Invalid patch"

+ with pytest.raises(InvalidDiffError):

+ apply_diff(source, patch)

+

+

+def test_apply_diff_unapplicable_patch():

+ source = "Line 1\nLine 2\n"

+ patch = "@@ -1,2 +1,2 @@\n Line 1\n-Line X\n+Line 2 modified\n"

+ with pytest.raises(DiffApplyError):

+ apply_diff(source, patch)

+

+

+def test_apply_diff_add_to_empty_file():

+ source = ""

+ patch = """\

+@@ -1,0 +1,1 @@

++Line 1

++Line 2

+"""

+ result = apply_diff(source, patch)

+ assert result == "Line 1\nLine 2\n"

diff --git a/metadata-ingestion/tests/unit/utilities/test_utilities.py b/metadata-ingestion/tests/unit/utilities/test_utilities.py

index fc2aa27f70b43..68da1bc1c01be 100644

--- a/metadata-ingestion/tests/unit/utilities/test_utilities.py

+++ b/metadata-ingestion/tests/unit/utilities/test_utilities.py

@@ -1,6 +1,7 @@

import doctest

from datahub.utilities.delayed_iter import delayed_iter

+from datahub.utilities.is_pytest import is_pytest_running

from datahub.utilities.sql_parser import SqlLineageSQLParser

@@ -295,3 +296,7 @@ def test_logging_name_extraction():

).attempted

> 0

)

+

+

+def test_is_pytest_running() -> None:

+ assert is_pytest_running()

diff --git a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/EntityAspect.java b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/EntityAspect.java

index cba770d841b94..976db4133c004 100644

--- a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/EntityAspect.java

+++ b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/EntityAspect.java

@@ -9,6 +9,7 @@

import com.linkedin.metadata.aspect.SystemAspect;

import com.linkedin.metadata.models.AspectSpec;

import com.linkedin.metadata.models.EntitySpec;

+import com.linkedin.mxe.GenericAspect;

import com.linkedin.mxe.SystemMetadata;

import java.sql.Timestamp;

import javax.annotation.Nonnull;

@@ -65,7 +66,7 @@ public static class EntitySystemAspect implements SystemAspect {

@Nullable private final RecordTemplate recordTemplate;

@Nonnull private final EntitySpec entitySpec;

- @Nonnull private final AspectSpec aspectSpec;

+ @Nullable private final AspectSpec aspectSpec;

@Nonnull

public String getUrnRaw() {

@@ -151,7 +152,7 @@ private EntityAspect.EntitySystemAspect build() {

public EntityAspect.EntitySystemAspect build(

@Nonnull EntitySpec entitySpec,

- @Nonnull AspectSpec aspectSpec,

+ @Nullable AspectSpec aspectSpec,

@Nonnull EntityAspect entityAspect) {

this.entityAspect = entityAspect;

this.urn = UrnUtils.getUrn(entityAspect.getUrn());

@@ -159,7 +160,11 @@ public EntityAspect.EntitySystemAspect build(

if (entityAspect.getMetadata() != null) {

this.recordTemplate =

RecordUtils.toRecordTemplate(

- aspectSpec.getDataTemplateClass(), entityAspect.getMetadata());

+ (Class)

+ (aspectSpec == null

+ ? GenericAspect.class

+ : aspectSpec.getDataTemplateClass()),

+ entityAspect.getMetadata());

}

return new EntitySystemAspect(entityAspect, urn, recordTemplate, entitySpec, aspectSpec);

diff --git a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/AspectsBatchImpl.java b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/AspectsBatchImpl.java

index 1fba842631720..7f56abe64f9a7 100644

--- a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/AspectsBatchImpl.java

+++ b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/AspectsBatchImpl.java

@@ -3,6 +3,7 @@

import com.linkedin.common.AuditStamp;

import com.linkedin.data.template.RecordTemplate;

import com.linkedin.events.metadata.ChangeType;

+import com.linkedin.metadata.aspect.AspectRetriever;

import com.linkedin.metadata.aspect.RetrieverContext;

import com.linkedin.metadata.aspect.SystemAspect;

import com.linkedin.metadata.aspect.batch.AspectsBatch;

@@ -11,6 +12,7 @@

import com.linkedin.metadata.aspect.batch.MCPItem;

import com.linkedin.metadata.aspect.plugins.hooks.MutationHook;

import com.linkedin.metadata.aspect.plugins.validation.ValidationExceptionCollection;

+import com.linkedin.metadata.models.EntitySpec;

import com.linkedin.mxe.MetadataChangeProposal;

import com.linkedin.util.Pair;

import java.util.Collection;

@@ -114,19 +116,7 @@ private Stream proposedItemsToChangeItemStream(List {

- if (ChangeType.PATCH.equals(mcpItem.getChangeType())) {

- return PatchItemImpl.PatchItemImplBuilder.build(

- mcpItem.getMetadataChangeProposal(),

- mcpItem.getAuditStamp(),

- retrieverContext.getAspectRetriever().getEntityRegistry());

- }

- return ChangeItemImpl.ChangeItemImplBuilder.build(

- mcpItem.getMetadataChangeProposal(),

- mcpItem.getAuditStamp(),

- retrieverContext.getAspectRetriever());

- });

+ .map(mcpItem -> patchDiscriminator(mcpItem, retrieverContext.getAspectRetriever()));

List mutatedItems =

applyProposalMutationHooks(proposedItems, retrieverContext).collect(Collectors.toList());

Stream proposedItemsToChangeItems =

@@ -134,12 +124,7 @@ private Stream proposedItemsToChangeItemStream(List mcpItem.getMetadataChangeProposal() != null)

// Filter on proposed items again to avoid applying builder to Patch Item side effects

.filter(mcpItem -> mcpItem instanceof ProposedItem)

- .map(

- mcpItem ->

- ChangeItemImpl.ChangeItemImplBuilder.build(

- mcpItem.getMetadataChangeProposal(),

- mcpItem.getAuditStamp(),

- retrieverContext.getAspectRetriever()));

+ .map(mcpItem -> patchDiscriminator(mcpItem, retrieverContext.getAspectRetriever()));

Stream sideEffectItems =

mutatedItems.stream().filter(mcpItem -> !(mcpItem instanceof ProposedItem));

Stream combinedChangeItems =

@@ -147,6 +132,17 @@ private Stream proposedItemsToChangeItemStream(List mcps,

AuditStamp auditStamp,

RetrieverContext retrieverContext) {

+ return mcps(mcps, auditStamp, retrieverContext, false);

+ }

+

+ public AspectsBatchImplBuilder mcps(

+ Collection mcps,

+ AuditStamp auditStamp,

+ RetrieverContext retrieverContext,

+ boolean alternateMCPValidation) {

retrieverContext(retrieverContext);

items(

@@ -171,6 +175,18 @@ public AspectsBatchImplBuilder mcps(

.map(

mcp -> {

try {

+ if (alternateMCPValidation) {

+ EntitySpec entitySpec =

+ retrieverContext

+ .getAspectRetriever()

+ .getEntityRegistry()

+ .getEntitySpec(mcp.getEntityType());

+ return ProposedItem.builder()

+ .metadataChangeProposal(mcp)

+ .entitySpec(entitySpec)

+ .auditStamp(auditStamp)

+ .build();

+ }

if (mcp.getChangeType().equals(ChangeType.PATCH)) {

return PatchItemImpl.PatchItemImplBuilder.build(

mcp,

diff --git a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/PatchItemImpl.java b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/PatchItemImpl.java

index 43a7d00248a22..ec0a8422e3c4a 100644

--- a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/PatchItemImpl.java

+++ b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/PatchItemImpl.java

@@ -203,7 +203,7 @@ public static PatchItemImpl build(

.build(entityRegistry);

}

- private static JsonPatch convertToJsonPatch(MetadataChangeProposal mcp) {

+ public static JsonPatch convertToJsonPatch(MetadataChangeProposal mcp) {

JsonNode json;

try {

return Json.createPatch(

diff --git a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/ProposedItem.java b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/ProposedItem.java

index 132a731d278af..88187ef159f23 100644

--- a/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/ProposedItem.java

+++ b/metadata-io/metadata-io-api/src/main/java/com/linkedin/metadata/entity/ebean/batch/ProposedItem.java

@@ -9,8 +9,10 @@

import com.linkedin.metadata.models.EntitySpec;

import com.linkedin.metadata.utils.EntityKeyUtils;

import com.linkedin.metadata.utils.GenericRecordUtils;

+import com.linkedin.metadata.utils.SystemMetadataUtils;

import com.linkedin.mxe.MetadataChangeProposal;

import com.linkedin.mxe.SystemMetadata;

+import java.util.Objects;

import javax.annotation.Nonnull;

import javax.annotation.Nullable;

import lombok.Builder;

@@ -83,4 +85,18 @@ public SystemMetadata getSystemMetadata() {

public ChangeType getChangeType() {

return metadataChangeProposal.getChangeType();

}

+

+ public static class ProposedItemBuilder {

+ public ProposedItem build() {

+ // Ensure systemMetadata

+ return new ProposedItem(

+ Objects.requireNonNull(this.metadataChangeProposal)

+ .setSystemMetadata(

+ SystemMetadataUtils.generateSystemMetadataIfEmpty(

+ this.metadataChangeProposal.getSystemMetadata())),

+ this.auditStamp,

+ this.entitySpec,

+ this.aspectSpec);

+ }

+ }

}

diff --git a/metadata-io/src/main/java/com/linkedin/metadata/aspect/hooks/IgnoreUnknownMutator.java b/metadata-io/src/main/java/com/linkedin/metadata/aspect/hooks/IgnoreUnknownMutator.java

index 8d6bdffceacb9..f5cc421042e36 100644

--- a/metadata-io/src/main/java/com/linkedin/metadata/aspect/hooks/IgnoreUnknownMutator.java

+++ b/metadata-io/src/main/java/com/linkedin/metadata/aspect/hooks/IgnoreUnknownMutator.java

@@ -1,8 +1,14 @@

package com.linkedin.metadata.aspect.hooks;

+import static com.linkedin.events.metadata.ChangeType.CREATE;

+import static com.linkedin.events.metadata.ChangeType.CREATE_ENTITY;

+import static com.linkedin.events.metadata.ChangeType.UPDATE;

+import static com.linkedin.events.metadata.ChangeType.UPSERT;

+

import com.datahub.util.exception.ModelConversionException;

import com.linkedin.data.template.RecordTemplate;

import com.linkedin.data.transform.filter.request.MaskTree;

+import com.linkedin.events.metadata.ChangeType;

import com.linkedin.metadata.aspect.RetrieverContext;

import com.linkedin.metadata.aspect.batch.MCPItem;

import com.linkedin.metadata.aspect.plugins.config.AspectPluginConfig;

@@ -14,6 +20,7 @@

import com.linkedin.mxe.GenericAspect;

import com.linkedin.restli.internal.server.util.RestUtils;

import java.util.Collection;

+import java.util.Set;

import java.util.stream.Stream;

import javax.annotation.Nonnull;

import lombok.Getter;

@@ -27,6 +34,11 @@

@Getter

@Accessors(chain = true)

public class IgnoreUnknownMutator extends MutationHook {

+ private static final Set SUPPORTED_MIME_TYPES =

+ Set.of("application/json", "application/json-patch+json");

+ private static final Set MUTATION_TYPES =

+ Set.of(CREATE, CREATE_ENTITY, UPSERT, UPDATE);

+

@Nonnull private AspectPluginConfig config;

@Override

@@ -42,8 +54,8 @@ protected Stream proposalMutation(

item.getAspectSpec().getName());

return false;

}

- if (!"application/json"

- .equals(item.getMetadataChangeProposal().getAspect().getContentType())) {

+ if (!SUPPORTED_MIME_TYPES.contains(

+ item.getMetadataChangeProposal().getAspect().getContentType())) {

log.warn(

"Dropping unknown content type {} for aspect {} on entity {}",

item.getMetadataChangeProposal().getAspect().getContentType(),

@@ -55,25 +67,27 @@ protected Stream proposalMutation(

})

.peek(

item -> {

- try {

- AspectSpec aspectSpec = item.getEntitySpec().getAspectSpec(item.getAspectName());

- GenericAspect aspect = item.getMetadataChangeProposal().getAspect();

- RecordTemplate recordTemplate =

- GenericRecordUtils.deserializeAspect(

- aspect.getValue(), aspect.getContentType(), aspectSpec);

+ if (MUTATION_TYPES.contains(item.getChangeType())) {

try {

- ValidationApiUtils.validateOrThrow(recordTemplate);

- } catch (ValidationException | ModelConversionException e) {

- log.warn(

- "Failed to validate aspect. Coercing aspect {} on entity {}",

- item.getAspectName(),

- item.getEntitySpec().getName());

- RestUtils.trimRecordTemplate(recordTemplate, new MaskTree(), false);

- item.getMetadataChangeProposal()

- .setAspect(GenericRecordUtils.serializeAspect(recordTemplate));

+ AspectSpec aspectSpec = item.getEntitySpec().getAspectSpec(item.getAspectName());

+ GenericAspect aspect = item.getMetadataChangeProposal().getAspect();

+ RecordTemplate recordTemplate =

+ GenericRecordUtils.deserializeAspect(

+ aspect.getValue(), aspect.getContentType(), aspectSpec);

+ try {

+ ValidationApiUtils.validateOrThrow(recordTemplate);

+ } catch (ValidationException | ModelConversionException e) {

+ log.warn(

+ "Failed to validate aspect. Coercing aspect {} on entity {}",

+ item.getAspectName(),

+ item.getEntitySpec().getName());

+ RestUtils.trimRecordTemplate(recordTemplate, new MaskTree(), false);

+ item.getMetadataChangeProposal()

+ .setAspect(GenericRecordUtils.serializeAspect(recordTemplate));

+ }

+ } catch (Exception e) {

+ throw new RuntimeException(e);

}

- } catch (Exception e) {

- throw new RuntimeException(e);

}

});

}

diff --git a/metadata-io/src/main/java/com/linkedin/metadata/client/JavaEntityClient.java b/metadata-io/src/main/java/com/linkedin/metadata/client/JavaEntityClient.java

index 60a991c19ae8b..8b625b3ae2289 100644

--- a/metadata-io/src/main/java/com/linkedin/metadata/client/JavaEntityClient.java

+++ b/metadata-io/src/main/java/com/linkedin/metadata/client/JavaEntityClient.java

@@ -23,7 +23,6 @@

import com.linkedin.metadata.aspect.EnvelopedAspectArray;

import com.linkedin.metadata.aspect.VersionedAspect;

import com.linkedin.metadata.aspect.batch.AspectsBatch;

-import com.linkedin.metadata.aspect.batch.BatchItem;

import com.linkedin.metadata.browse.BrowseResult;

import com.linkedin.metadata.browse.BrowseResultV2;

import com.linkedin.metadata.entity.DeleteEntityService;

@@ -56,6 +55,7 @@

import com.linkedin.parseq.retry.backoff.BackoffPolicy;

import com.linkedin.parseq.retry.backoff.ExponentialBackoff;

import com.linkedin.r2.RemoteInvocationException;

+import com.linkedin.util.Pair;

import io.datahubproject.metadata.context.OperationContext;

import io.opentelemetry.extension.annotations.WithSpan;

import java.net.URISyntaxException;

@@ -762,38 +762,41 @@ public List batchIngestProposals(

AspectsBatch batch =

AspectsBatchImpl.builder()

- .mcps(metadataChangeProposals, auditStamp, opContext.getRetrieverContext().get())

+ .mcps(

+ metadataChangeProposals,

+ auditStamp,

+ opContext.getRetrieverContext().get(),

+ opContext.getValidationContext().isAlternateValidation())

.build();

- Map> resultMap =

+ Map, List> resultMap =

entityService.ingestProposal(opContext, batch, async).stream()

- .collect(Collectors.groupingBy(IngestResult::getRequest));

-

- // Update runIds

- batch.getItems().stream()

- .filter(resultMap::containsKey)

- .forEach(

- requestItem -> {

- List results = resultMap.get(requestItem);

- Optional resultUrn =

- results.stream().map(IngestResult::getUrn).filter(Objects::nonNull).findFirst();

- resultUrn.ifPresent(

- urn -> tryIndexRunId(opContext, urn, requestItem.getSystemMetadata()));

- });

+ .collect(

+ Collectors.groupingBy(

+ result ->

+ Pair.of(

+ result.getRequest().getUrn(), result.getRequest().getAspectName())));

// Preserve ordering

return batch.getItems().stream()

.map(

requestItem -> {

- if (resultMap.containsKey(requestItem)) {

- List results = resultMap.get(requestItem);

- return results.stream()

- .filter(r -> r.getUrn() != null)

- .findFirst()

- .map(r -> r.getUrn().toString())

- .orElse(null);

- }

- return null;

+ // Urns generated

+ List urnsForRequest =

+ resultMap

+ .getOrDefault(

+ Pair.of(requestItem.getUrn(), requestItem.getAspectName()), List.of())

+ .stream()

+ .map(IngestResult::getUrn)

+ .filter(Objects::nonNull)

+ .distinct()

+ .collect(Collectors.toList());

+

+ // Update runIds

+ urnsForRequest.forEach(

+ urn -> tryIndexRunId(opContext, urn, requestItem.getSystemMetadata()));

+

+ return urnsForRequest.isEmpty() ? null : urnsForRequest.get(0).toString();

})

.collect(Collectors.toList());

}

diff --git a/metadata-io/src/main/java/com/linkedin/metadata/entity/EntityServiceImpl.java b/metadata-io/src/main/java/com/linkedin/metadata/entity/EntityServiceImpl.java

index 00feb547ca330..9f608be4f3d18 100644

--- a/metadata-io/src/main/java/com/linkedin/metadata/entity/EntityServiceImpl.java

+++ b/metadata-io/src/main/java/com/linkedin/metadata/entity/EntityServiceImpl.java

@@ -1173,15 +1173,15 @@ public IngestResult ingestProposal(

* @return an {@link IngestResult} containing the results

*/

@Override

- public Set ingestProposal(

+ public List ingestProposal(

@Nonnull OperationContext opContext, AspectsBatch aspectsBatch, final boolean async) {

Stream timeseriesIngestResults =

ingestTimeseriesProposal(opContext, aspectsBatch, async);

Stream nonTimeseriesIngestResults =

async ? ingestProposalAsync(aspectsBatch) : ingestProposalSync(opContext, aspectsBatch);

- return Stream.concat(timeseriesIngestResults, nonTimeseriesIngestResults)

- .collect(Collectors.toSet());

+ return Stream.concat(nonTimeseriesIngestResults, timeseriesIngestResults)

+ .collect(Collectors.toList());

}

/**

@@ -1192,11 +1192,13 @@ public Set ingestProposal(

*/

private Stream ingestTimeseriesProposal(

@Nonnull OperationContext opContext, AspectsBatch aspectsBatch, final boolean async) {

+

List unsupported =

aspectsBatch.getItems().stream()

.filter(

item ->

- item.getAspectSpec().isTimeseries()

+ item.getAspectSpec() != null

+ && item.getAspectSpec().isTimeseries()

&& item.getChangeType() != ChangeType.UPSERT)

.collect(Collectors.toList());

if (!unsupported.isEmpty()) {

@@ -1212,7 +1214,7 @@ private Stream ingestTimeseriesProposal(

// Create default non-timeseries aspects for timeseries aspects

List timeseriesKeyAspects =

aspectsBatch.getMCPItems().stream()

- .filter(item -> item.getAspectSpec().isTimeseries())

+ .filter(item -> item.getAspectSpec() != null && item.getAspectSpec().isTimeseries())

.map(

item ->

ChangeItemImpl.builder()

@@ -1238,10 +1240,10 @@ private Stream ingestTimeseriesProposal(

}

// Emit timeseries MCLs

- List, Boolean>>>> timeseriesResults =

+ List, Boolean>>>> timeseriesResults =

aspectsBatch.getItems().stream()

- .filter(item -> item.getAspectSpec().isTimeseries())

- .map(item -> (ChangeItemImpl) item)

+ .filter(item -> item.getAspectSpec() != null && item.getAspectSpec().isTimeseries())

+ .map(item -> (MCPItem) item)

.map(

item ->

Pair.of(

@@ -1272,7 +1274,7 @@ private Stream ingestTimeseriesProposal(

}

});

- ChangeItemImpl request = result.getFirst();

+ MCPItem request = result.getFirst();

return IngestResult.builder()

.urn(request.getUrn())

.request(request)

@@ -1292,7 +1294,7 @@ private Stream ingestTimeseriesProposal(

private Stream ingestProposalAsync(AspectsBatch aspectsBatch) {

List nonTimeseries =

aspectsBatch.getMCPItems().stream()

- .filter(item -> !item.getAspectSpec().isTimeseries())

+ .filter(item -> item.getAspectSpec() == null || !item.getAspectSpec().isTimeseries())

.collect(Collectors.toList());

List> futures =

@@ -1328,6 +1330,7 @@ private Stream ingestProposalAsync(AspectsBatch aspectsBatch) {

private Stream ingestProposalSync(

@Nonnull OperationContext opContext, AspectsBatch aspectsBatch) {

+

AspectsBatchImpl nonTimeseries =

AspectsBatchImpl.builder()

.retrieverContext(aspectsBatch.getRetrieverContext())

diff --git a/metadata-io/src/main/java/com/linkedin/metadata/search/elasticsearch/query/filter/BaseQueryFilterRewriter.java b/metadata-io/src/main/java/com/linkedin/metadata/search/elasticsearch/query/filter/BaseQueryFilterRewriter.java

index d545f60a1ee8f..367705d369c7c 100644