diff --git a/datahub-web-react/package.json b/datahub-web-react/package.json

index ca53932eba5189..f641706c7661e8 100644

--- a/datahub-web-react/package.json

+++ b/datahub-web-react/package.json

@@ -89,7 +89,7 @@

"test": "vitest",

"generate": "graphql-codegen --config codegen.yml",

"lint": "eslint . --ext .ts,.tsx --quiet && yarn format-check && yarn type-check",

- "lint-fix": "eslint '*/**/*.{ts,tsx}' --quiet --fix",

+ "lint-fix": "eslint '*/**/*.{ts,tsx}' --quiet --fix && yarn format",

"format-check": "prettier --check src",

"format": "prettier --write src",

"type-check": "tsc --noEmit",

diff --git a/docs-website/sidebars.js b/docs-website/sidebars.js

index e58dbd4d99b0b3..8e48062af6d4d8 100644

--- a/docs-website/sidebars.js

+++ b/docs-website/sidebars.js

@@ -209,11 +209,6 @@ module.exports = {

},

items: [

"docs/managed-datahub/welcome-acryl",

- {

- type: "doc",

- id: "docs/managed-datahub/saas-slack-setup",

- className: "saasOnly",

- },

{

type: "doc",

id: "docs/managed-datahub/approval-workflows",

@@ -247,6 +242,20 @@ module.exports = {

},

],

},

+ {

+ Slack: [

+ {

+ type: "doc",

+ id: "docs/managed-datahub/slack/saas-slack-setup",

+ className: "saasOnly",

+ },

+ {

+ type: "doc",

+ id: "docs/managed-datahub/slack/saas-slack-app",

+ className: "saasOnly",

+ },

+ ],

+ },

{

"Operator Guide": [

{

diff --git a/docs/actions/actions/slack.md b/docs/actions/actions/slack.md

index bdea1c479e8aa6..a89439825d2da1 100644

--- a/docs/actions/actions/slack.md

+++ b/docs/actions/actions/slack.md

@@ -138,7 +138,7 @@ In the next steps, we'll show you how to configure the Slack Action based on the

#### Managed DataHub

-Head over to the [Configuring Notifications](../../managed-datahub/saas-slack-setup.md#configuring-notifications) section in the Managed DataHub guide to configure Slack notifications for your Managed DataHub instance.

+Head over to the [Configuring Notifications](../../managed-datahub/slack/saas-slack-setup.md#configuring-notifications) section in the Managed DataHub guide to configure Slack notifications for your Managed DataHub instance.

#### Quickstart

diff --git a/docs/incidents/incidents.md b/docs/incidents/incidents.md

index 578571289cd2ea..41b4df10b78281 100644

--- a/docs/incidents/incidents.md

+++ b/docs/incidents/incidents.md

@@ -427,5 +427,5 @@ These notifications are also able to tag the immediate asset's owners, along wit

-To do so, simply follow the [Slack Integration Guide](docs/managed-datahub/saas-slack-setup.md) and contact your Acryl customer success team to enable the feature!

+To do so, simply follow the [Slack Integration Guide](docs/managed-datahub/slack/saas-slack-setup.md) and contact your Acryl customer success team to enable the feature!

diff --git a/docs/managed-datahub/managed-datahub-overview.md b/docs/managed-datahub/managed-datahub-overview.md

index 087238097dd9f4..4efc96eaf17a7c 100644

--- a/docs/managed-datahub/managed-datahub-overview.md

+++ b/docs/managed-datahub/managed-datahub-overview.md

@@ -56,7 +56,8 @@ know.

| Monitor Freshness SLAs | ❌ | ✅ |

| Monitor Table Schemas | ❌ | ✅ |

| Monitor Table Volume | ❌ | ✅ |

-| Validate Table Columns | ❌ | ✅ |

+| Monitor Table Column Integrity | ❌ | ✅ |

+| Monitor Table with Custom SQL | ❌ | ✅ |

| Receive Notifications via Email & Slack | ❌ | ✅ |

| Manage Data Incidents via Slack | ❌ | ✅ |

| View Data Health Dashboard | ❌ | ✅ |

@@ -115,7 +116,7 @@ Fill out

## Additional Integrations

-- [Slack Integration](docs/managed-datahub/saas-slack-setup.md)

+- [Slack Integration](docs/managed-datahub/slack/saas-slack-setup.md)

- [Remote Ingestion Executor](docs/managed-datahub/operator-guide/setting-up-remote-ingestion-executor.md)

- [AWS Privatelink](docs/managed-datahub/integrations/aws-privatelink.md)

- [AWS Eventbridge](docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge.md)

diff --git a/docs/managed-datahub/observe/assertions.md b/docs/managed-datahub/observe/assertions.md

index b74d524dff1bd7..e63d051a0096b2 100644

--- a/docs/managed-datahub/observe/assertions.md

+++ b/docs/managed-datahub/observe/assertions.md

@@ -38,7 +38,7 @@ If you opt for a 3rd party tool, it will be your responsibility to ensure the as

## Alerts

-Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via [slack messages](/docs/managed-datahub/saas-slack-setup.md) (DMs or to a team channel) based on your [subscription](https://youtu.be/VNNZpkjHG_I?t=79) to an assertion change event. In the future, we’ll also provide the ability to subscribe directly to contracts.

+Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via [Slack messages](/docs/managed-datahub/slack/saas-slack-setup.md) (DMs or to a team channel) based on your [subscription](https://youtu.be/VNNZpkjHG_I?t=79) to an assertion change event. In the future, we’ll also provide the ability to subscribe directly to contracts.

With Acryl Observe, you can get the Assertion Change event by getting API events via [AWS EventBridge](/docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge.md) (the availability and simplicity of setup of each solution dependent on your current Acryl setup – chat with your Acryl representative to learn more).

diff --git a/docs/managed-datahub/saas-slack-setup.md b/docs/managed-datahub/saas-slack-setup.md

deleted file mode 100644

index 1b98f3a30773a0..00000000000000

--- a/docs/managed-datahub/saas-slack-setup.md

+++ /dev/null

@@ -1,113 +0,0 @@

-import FeatureAvailability from '@site/src/components/FeatureAvailability';

-

-# Configure Slack For Notifications

-

-

-

-## Install the DataHub Slack App into your Slack workspace

-

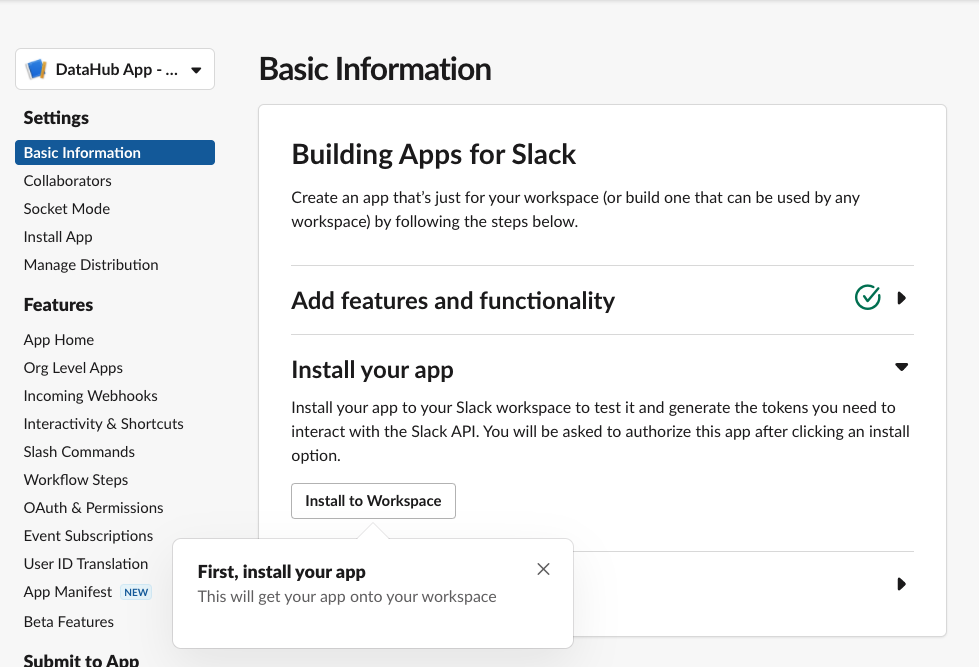

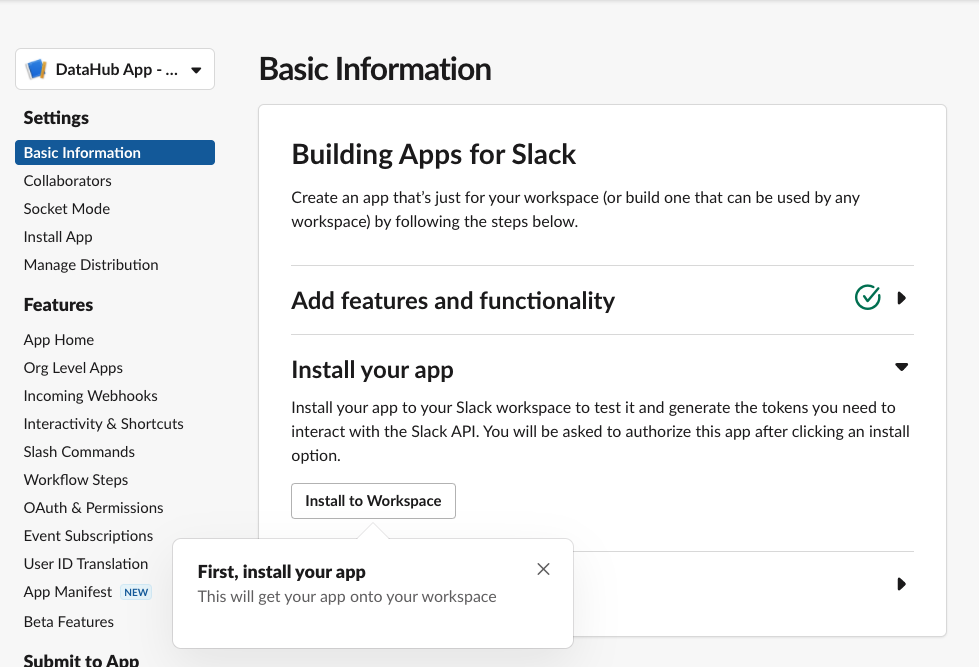

-The following steps should be performed by a Slack Workspace Admin.

-- Navigate to https://api.slack.com/apps/

-- Click Create New App

-- Use “From an app manifest” option

-- Select your workspace

-- Paste this Manifest in YAML. Suggest changing name and `display_name` to be `DataHub App YOUR_TEAM_NAME` but not required. This name will show up in your slack workspace

-```yml

-display_information:

- name: DataHub App

- description: An app to integrate DataHub with Slack

- background_color: "#000000"

-features:

- bot_user:

- display_name: DataHub App

- always_online: false

-oauth_config:

- scopes:

- bot:

- - channels:read

- - chat:write

- - commands

- - groups:read

- - im:read

- - mpim:read

- - team:read

- - users:read

- - users:read.email

-settings:

- org_deploy_enabled: false

- socket_mode_enabled: false

- token_rotation_enabled: false

-```

-

-Confirm you see the Basic Information Tab

-

-

-

-- Click **Install to Workspace**

-- It will show you permissions the Slack App is asking for, what they mean and a default channel in which you want to add the slack app

- - Note that the Slack App will only be able to post in channels that the app has been added to. This is made clear by slack’s Authentication screen also.

-- Select the channel you'd like notifications to go to and click **Allow**

-- Go to DataHub App page

- - You can find your workspace's list of apps at https://api.slack.com/apps/

-

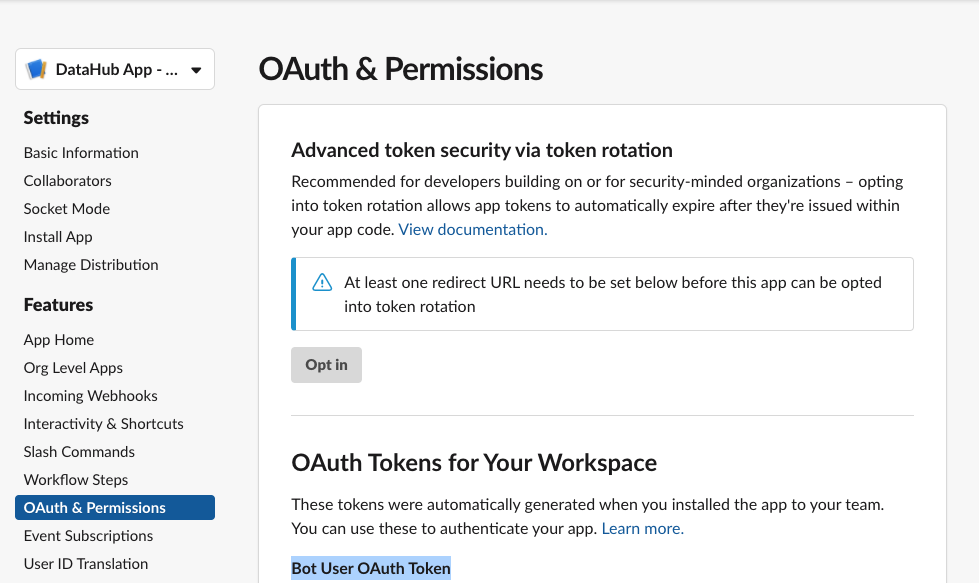

-## Generating a Bot Token

-

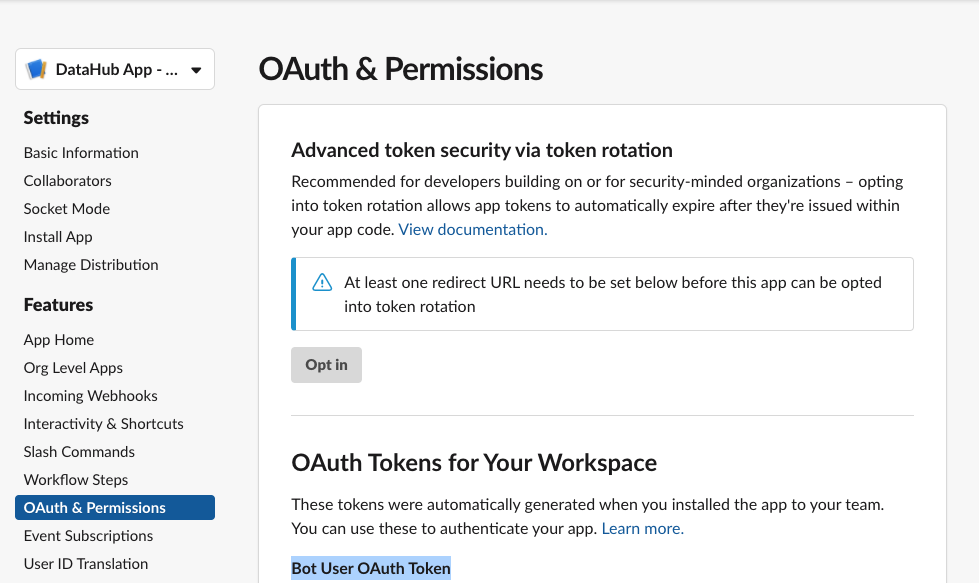

-- Go to **OAuth & Permissions** Tab

-

-

-

-Here you'll find a “Bot User OAuth Token” which DataHub will need to communicate with your slack through the bot.

-In the next steps, we'll show you how to configure the Slack Integration inside of Acryl DataHub.

-

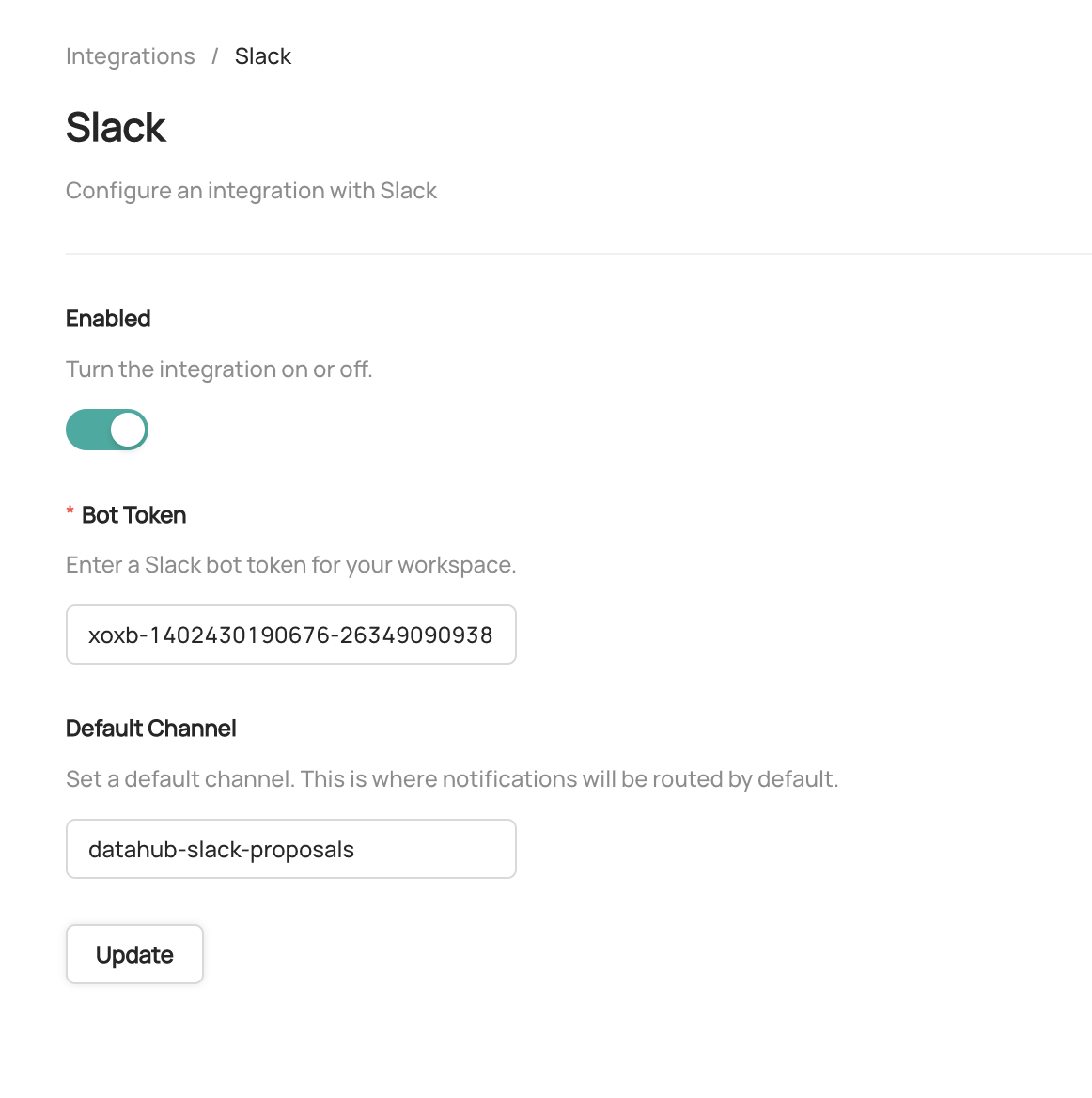

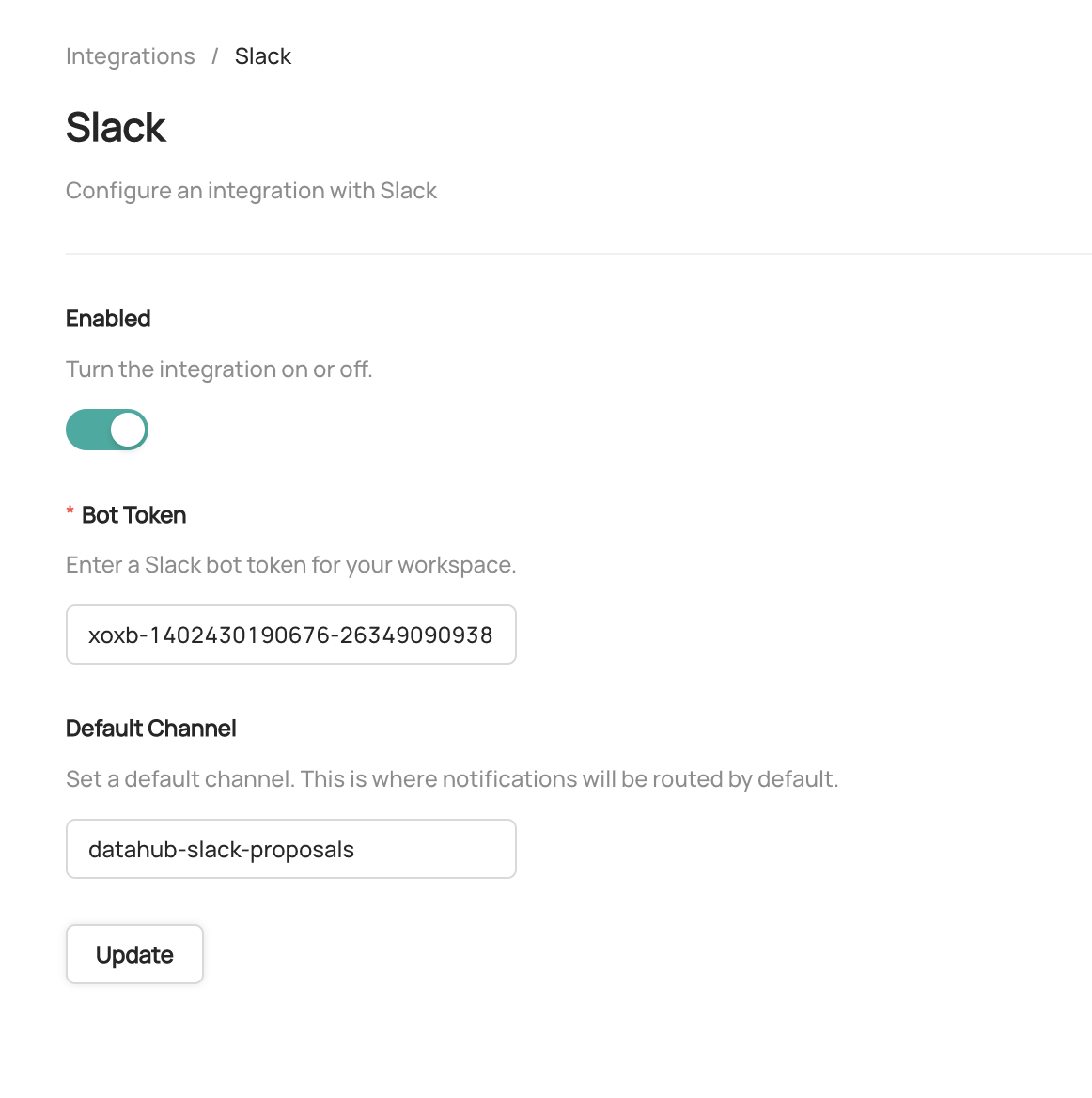

-## Configuring Notifications

-

-> In order to set up the Slack integration, the user must have the `Manage Platform Settings` privilege.

-

-To enable the integration with slack

-- Navigate to **Settings > Integrations**

-- Click **Slack**

-- Enable the Integration

-- Enter the **Bot Token** obtained in the previous steps

-- Enter a **Default Slack Channel** - this is where all notifications will be routed unless

-- Click **Update** to save your settings

-

-

-To do so, simply follow the [Slack Integration Guide](docs/managed-datahub/saas-slack-setup.md) and contact your Acryl customer success team to enable the feature!

+To do so, simply follow the [Slack Integration Guide](docs/managed-datahub/slack/saas-slack-setup.md) and contact your Acryl customer success team to enable the feature!

diff --git a/docs/managed-datahub/managed-datahub-overview.md b/docs/managed-datahub/managed-datahub-overview.md

index 087238097dd9f4..4efc96eaf17a7c 100644

--- a/docs/managed-datahub/managed-datahub-overview.md

+++ b/docs/managed-datahub/managed-datahub-overview.md

@@ -56,7 +56,8 @@ know.

| Monitor Freshness SLAs | ❌ | ✅ |

| Monitor Table Schemas | ❌ | ✅ |

| Monitor Table Volume | ❌ | ✅ |

-| Validate Table Columns | ❌ | ✅ |

+| Monitor Table Column Integrity | ❌ | ✅ |

+| Monitor Table with Custom SQL | ❌ | ✅ |

| Receive Notifications via Email & Slack | ❌ | ✅ |

| Manage Data Incidents via Slack | ❌ | ✅ |

| View Data Health Dashboard | ❌ | ✅ |

@@ -115,7 +116,7 @@ Fill out

## Additional Integrations

-- [Slack Integration](docs/managed-datahub/saas-slack-setup.md)

+- [Slack Integration](docs/managed-datahub/slack/saas-slack-setup.md)

- [Remote Ingestion Executor](docs/managed-datahub/operator-guide/setting-up-remote-ingestion-executor.md)

- [AWS Privatelink](docs/managed-datahub/integrations/aws-privatelink.md)

- [AWS Eventbridge](docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge.md)

diff --git a/docs/managed-datahub/observe/assertions.md b/docs/managed-datahub/observe/assertions.md

index b74d524dff1bd7..e63d051a0096b2 100644

--- a/docs/managed-datahub/observe/assertions.md

+++ b/docs/managed-datahub/observe/assertions.md

@@ -38,7 +38,7 @@ If you opt for a 3rd party tool, it will be your responsibility to ensure the as

## Alerts

-Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via [slack messages](/docs/managed-datahub/saas-slack-setup.md) (DMs or to a team channel) based on your [subscription](https://youtu.be/VNNZpkjHG_I?t=79) to an assertion change event. In the future, we’ll also provide the ability to subscribe directly to contracts.

+Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via [Slack messages](/docs/managed-datahub/slack/saas-slack-setup.md) (DMs or to a team channel) based on your [subscription](https://youtu.be/VNNZpkjHG_I?t=79) to an assertion change event. In the future, we’ll also provide the ability to subscribe directly to contracts.

With Acryl Observe, you can get the Assertion Change event by getting API events via [AWS EventBridge](/docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge.md) (the availability and simplicity of setup of each solution dependent on your current Acryl setup – chat with your Acryl representative to learn more).

diff --git a/docs/managed-datahub/saas-slack-setup.md b/docs/managed-datahub/saas-slack-setup.md

deleted file mode 100644

index 1b98f3a30773a0..00000000000000

--- a/docs/managed-datahub/saas-slack-setup.md

+++ /dev/null

@@ -1,113 +0,0 @@

-import FeatureAvailability from '@site/src/components/FeatureAvailability';

-

-# Configure Slack For Notifications

-

-

-

-## Install the DataHub Slack App into your Slack workspace

-

-The following steps should be performed by a Slack Workspace Admin.

-- Navigate to https://api.slack.com/apps/

-- Click Create New App

-- Use “From an app manifest” option

-- Select your workspace

-- Paste this Manifest in YAML. Suggest changing name and `display_name` to be `DataHub App YOUR_TEAM_NAME` but not required. This name will show up in your slack workspace

-```yml

-display_information:

- name: DataHub App

- description: An app to integrate DataHub with Slack

- background_color: "#000000"

-features:

- bot_user:

- display_name: DataHub App

- always_online: false

-oauth_config:

- scopes:

- bot:

- - channels:read

- - chat:write

- - commands

- - groups:read

- - im:read

- - mpim:read

- - team:read

- - users:read

- - users:read.email

-settings:

- org_deploy_enabled: false

- socket_mode_enabled: false

- token_rotation_enabled: false

-```

-

-Confirm you see the Basic Information Tab

-

-

-

-- Click **Install to Workspace**

-- It will show you permissions the Slack App is asking for, what they mean and a default channel in which you want to add the slack app

- - Note that the Slack App will only be able to post in channels that the app has been added to. This is made clear by slack’s Authentication screen also.

-- Select the channel you'd like notifications to go to and click **Allow**

-- Go to DataHub App page

- - You can find your workspace's list of apps at https://api.slack.com/apps/

-

-## Generating a Bot Token

-

-- Go to **OAuth & Permissions** Tab

-

-

-

-Here you'll find a “Bot User OAuth Token” which DataHub will need to communicate with your slack through the bot.

-In the next steps, we'll show you how to configure the Slack Integration inside of Acryl DataHub.

-

-## Configuring Notifications

-

-> In order to set up the Slack integration, the user must have the `Manage Platform Settings` privilege.

-

-To enable the integration with slack

-- Navigate to **Settings > Integrations**

-- Click **Slack**

-- Enable the Integration

-- Enter the **Bot Token** obtained in the previous steps

-- Enter a **Default Slack Channel** - this is where all notifications will be routed unless

-- Click **Update** to save your settings

-

- -

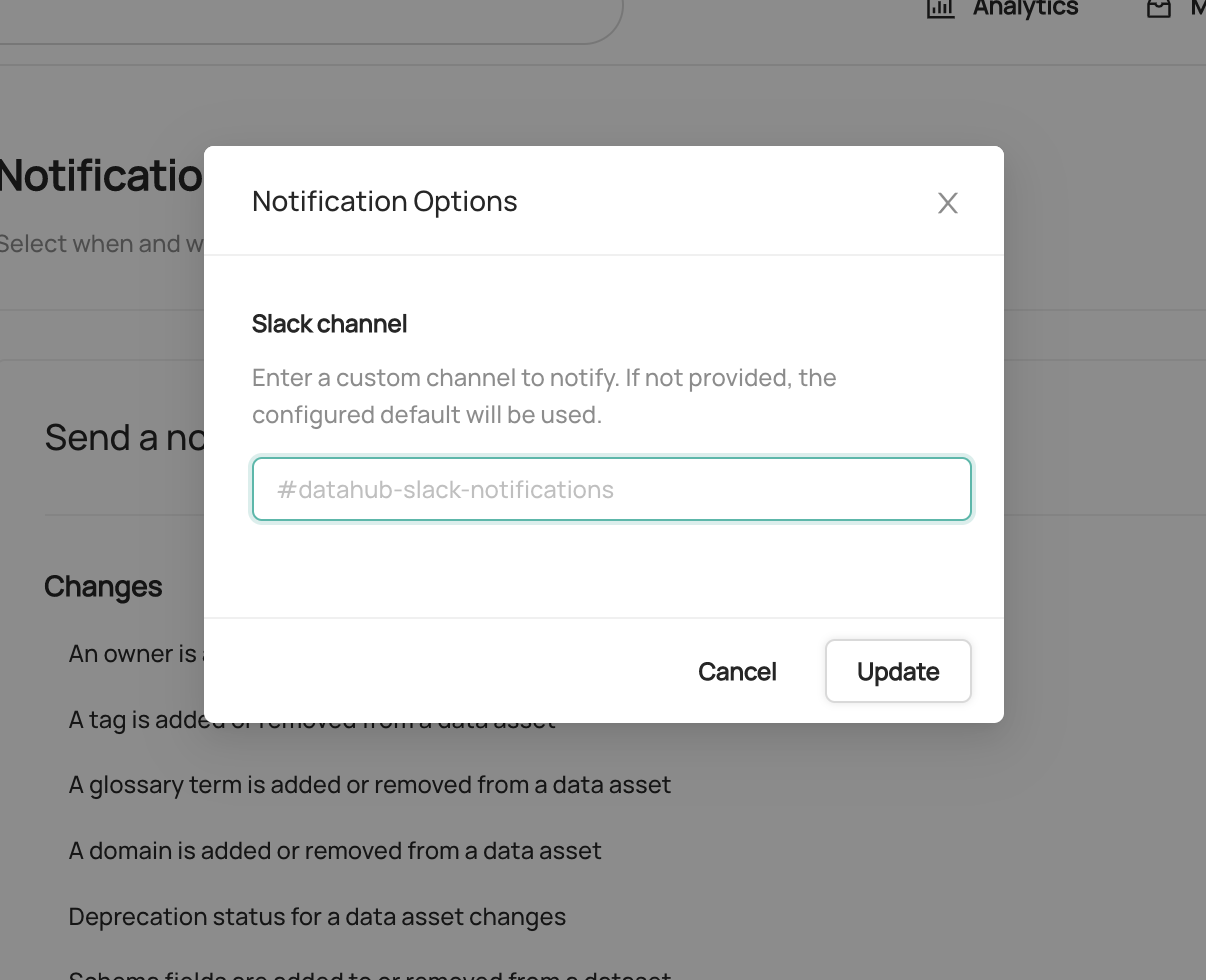

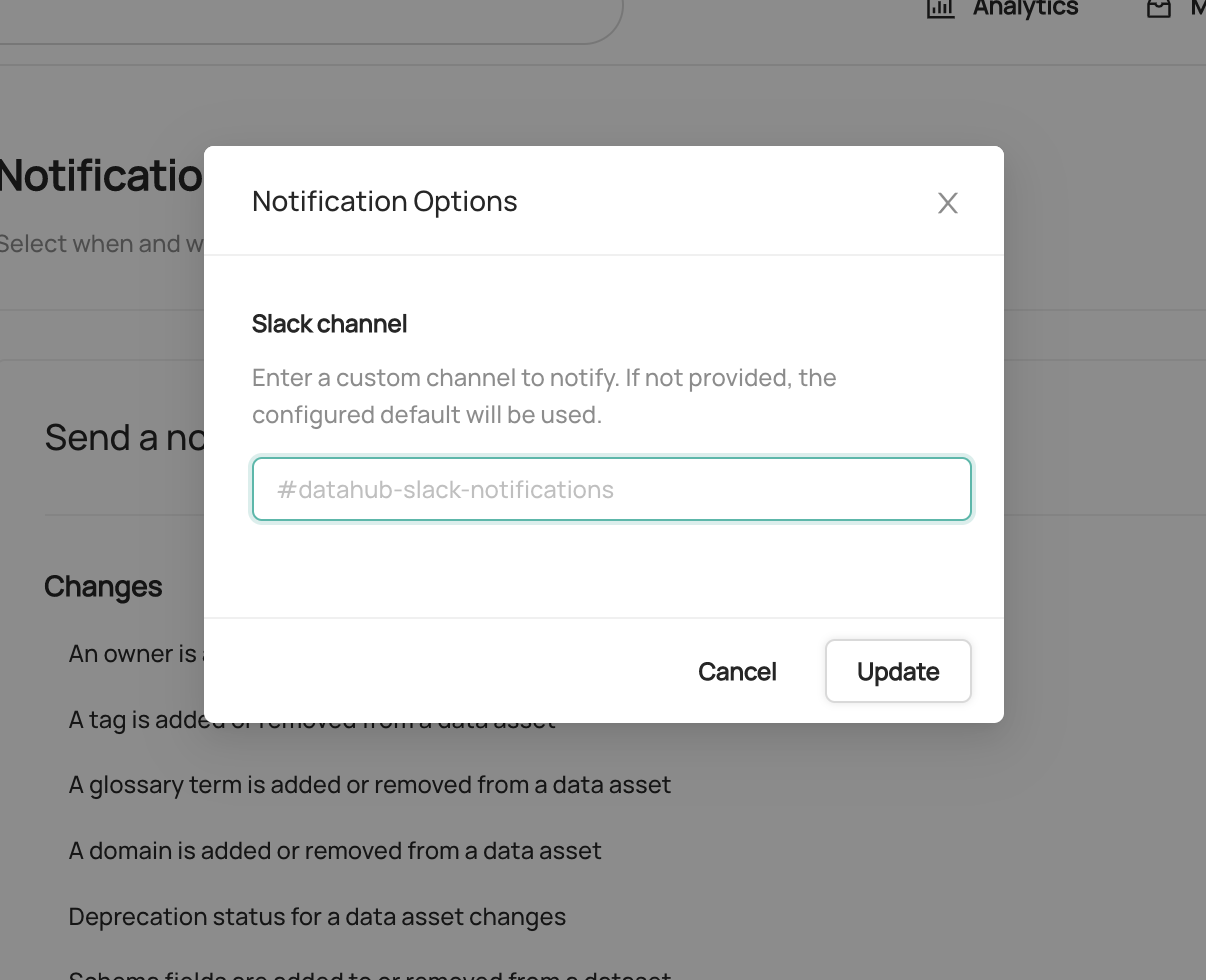

-To enable and disable specific types of notifications, or configure custom routing for notifications, start by navigating to **Settings > Notifications**.

-To enable or disable a specific notification type in Slack, simply click the check mark. By default, all notification types are enabled.

-To customize the channel where notifications are send, click the button to the right of the check box.

-

-

-

-To enable and disable specific types of notifications, or configure custom routing for notifications, start by navigating to **Settings > Notifications**.

-To enable or disable a specific notification type in Slack, simply click the check mark. By default, all notification types are enabled.

-To customize the channel where notifications are send, click the button to the right of the check box.

-

- -

-If provided, a custom channel will be used to route notifications of the given type. If not provided, the default channel will be used.

-That's it! You should begin to receive notifications on Slack. Note that it may take up to 1 minute for notification settings to take effect after saving.

-

-## Sending Notifications

-

-For now we support sending notifications to

-- Slack Channel ID (e.g. `C029A3M079U`)

-- Slack Channel Name (e.g. `#troubleshoot`)

-- Specific Users (aka Direct Messages or DMs) via user ID

-

-By default, the Slack app will be able to send notifications to public channels. If you want to send notifications to private channels or DMs, you will need to invite the Slack app to those channels.

-

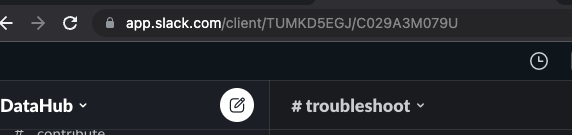

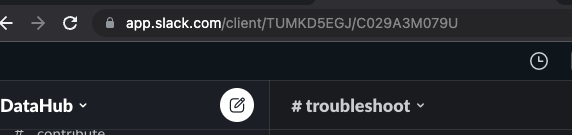

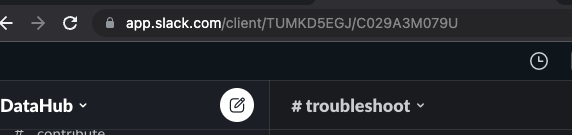

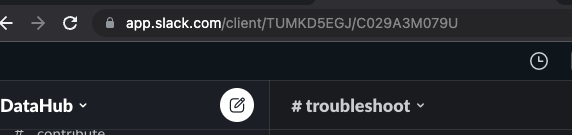

-## How to find Team ID and Channel ID in Slack

-

-- Go to the Slack channel for which you want to get channel ID

-- Check the URL e.g. for the troubleshoot channel in OSS DataHub slack

-

-

-

-- Notice `TUMKD5EGJ/C029A3M079U` in the URL

- - Team ID = `TUMKD5EGJ` from above

- - Channel ID = `C029A3M079U` from above

-

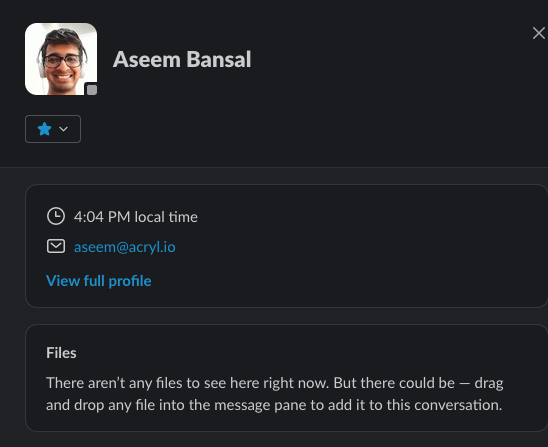

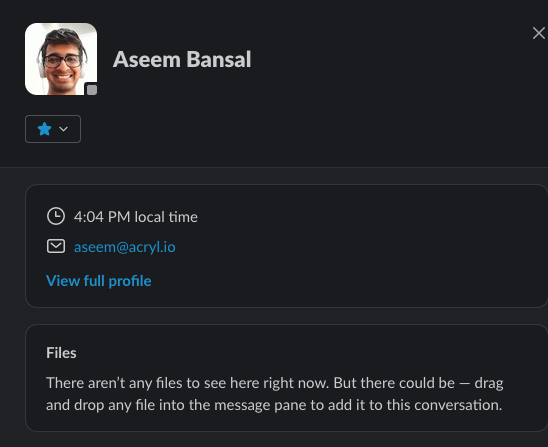

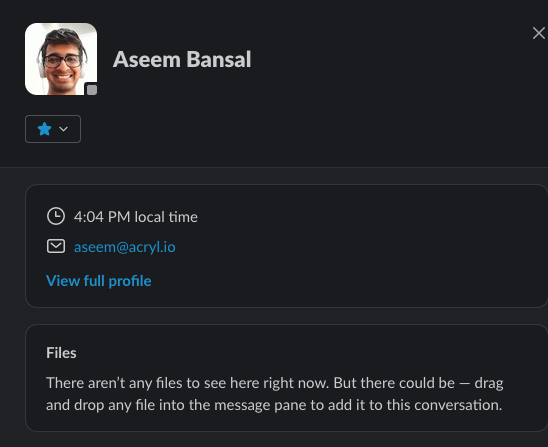

-## How to find User ID in Slack

-

-- Go to user DM

-- Click on their profile picture

-- Click on View Full Profile

-- Click on “More”

-- Click on “Copy member ID”

-

-

\ No newline at end of file

diff --git a/docs/managed-datahub/slack/saas-slack-app.md b/docs/managed-datahub/slack/saas-slack-app.md

new file mode 100644

index 00000000000000..5e16fed901e723

--- /dev/null

+++ b/docs/managed-datahub/slack/saas-slack-app.md

@@ -0,0 +1,59 @@

+import FeatureAvailability from '@site/src/components/FeatureAvailability';

+

+# Slack App Features

+

+

+

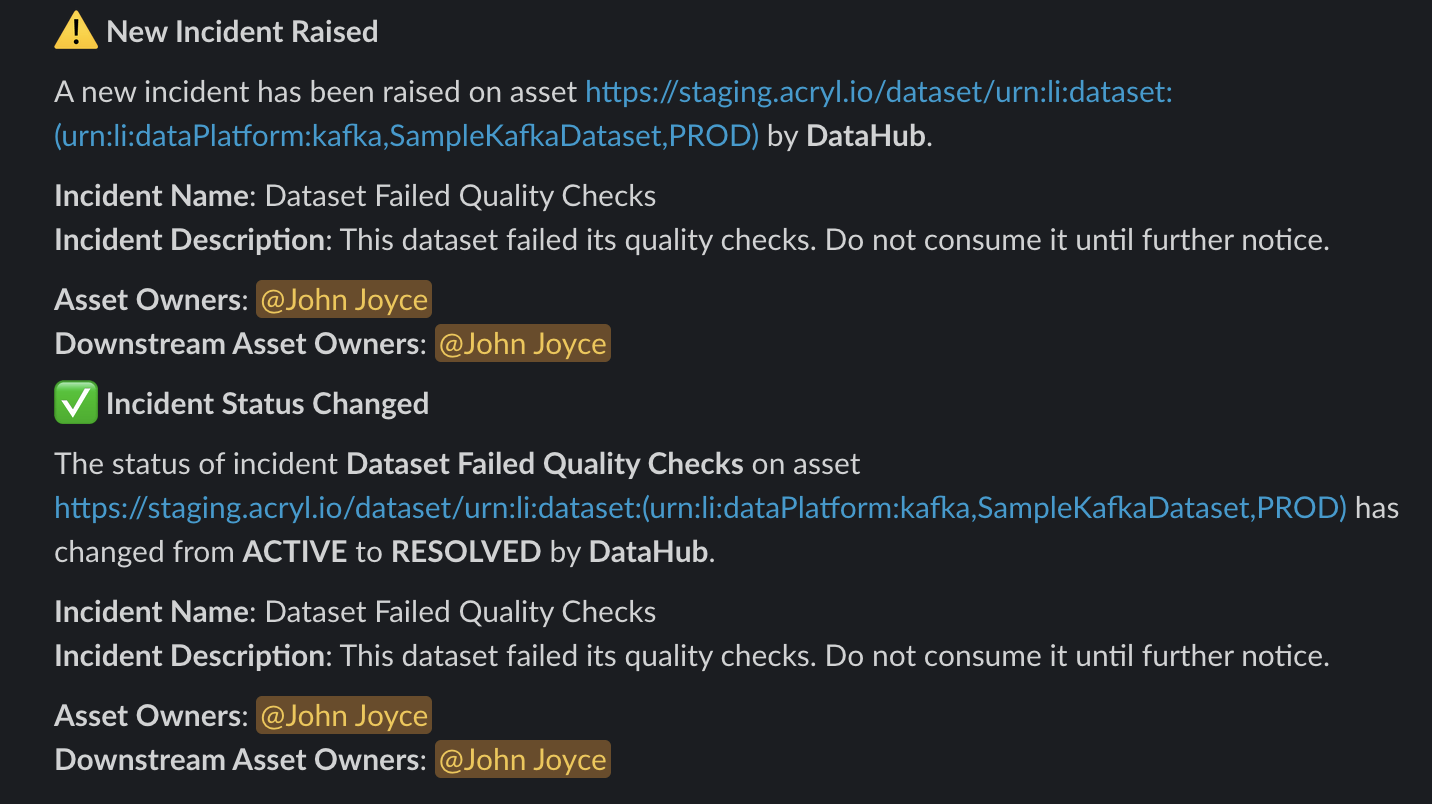

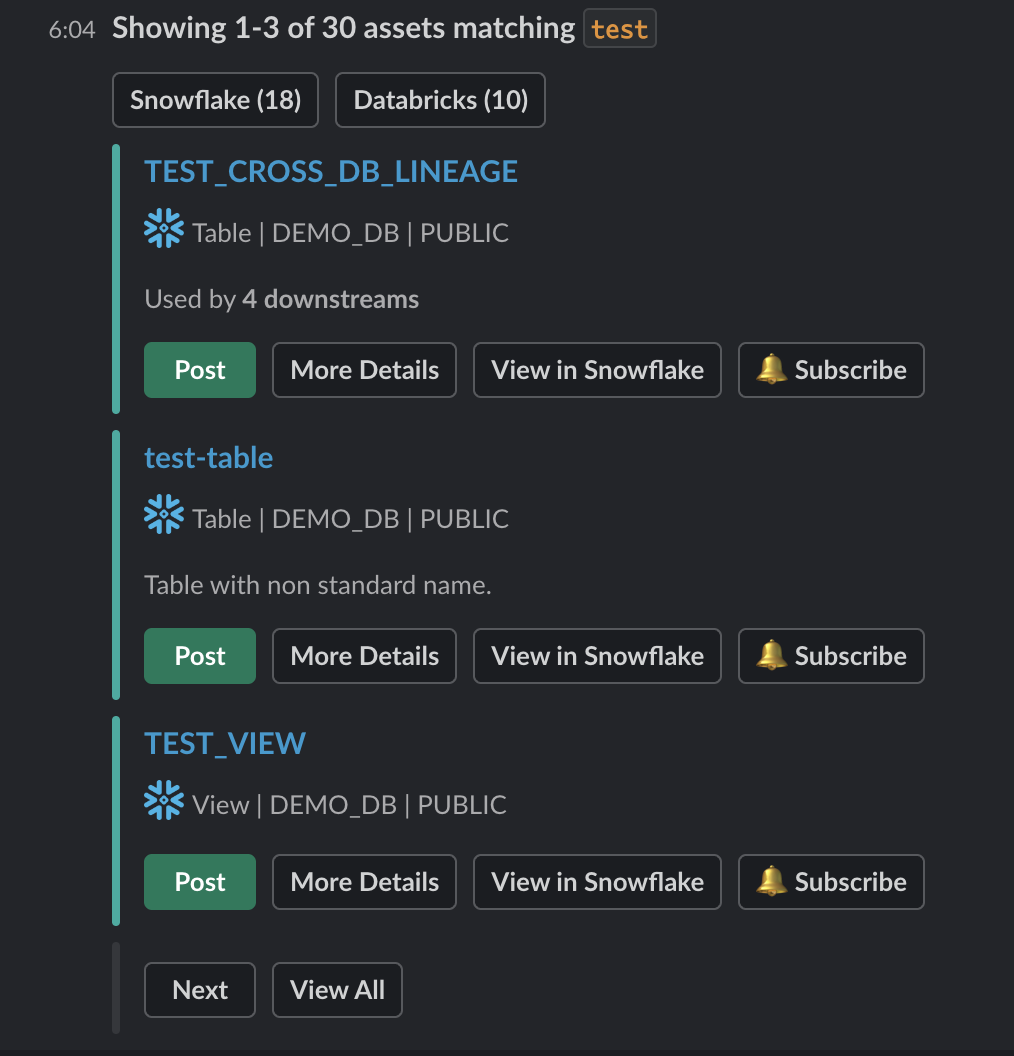

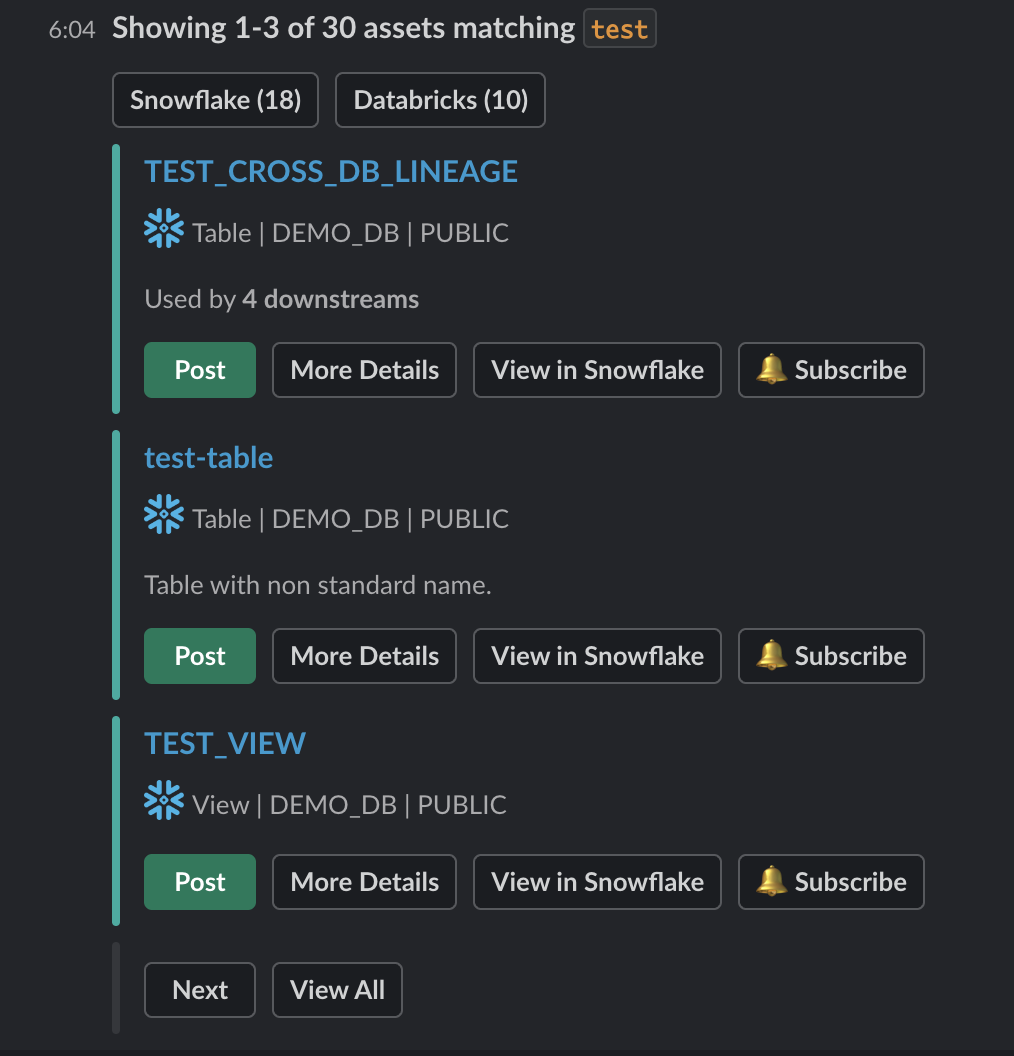

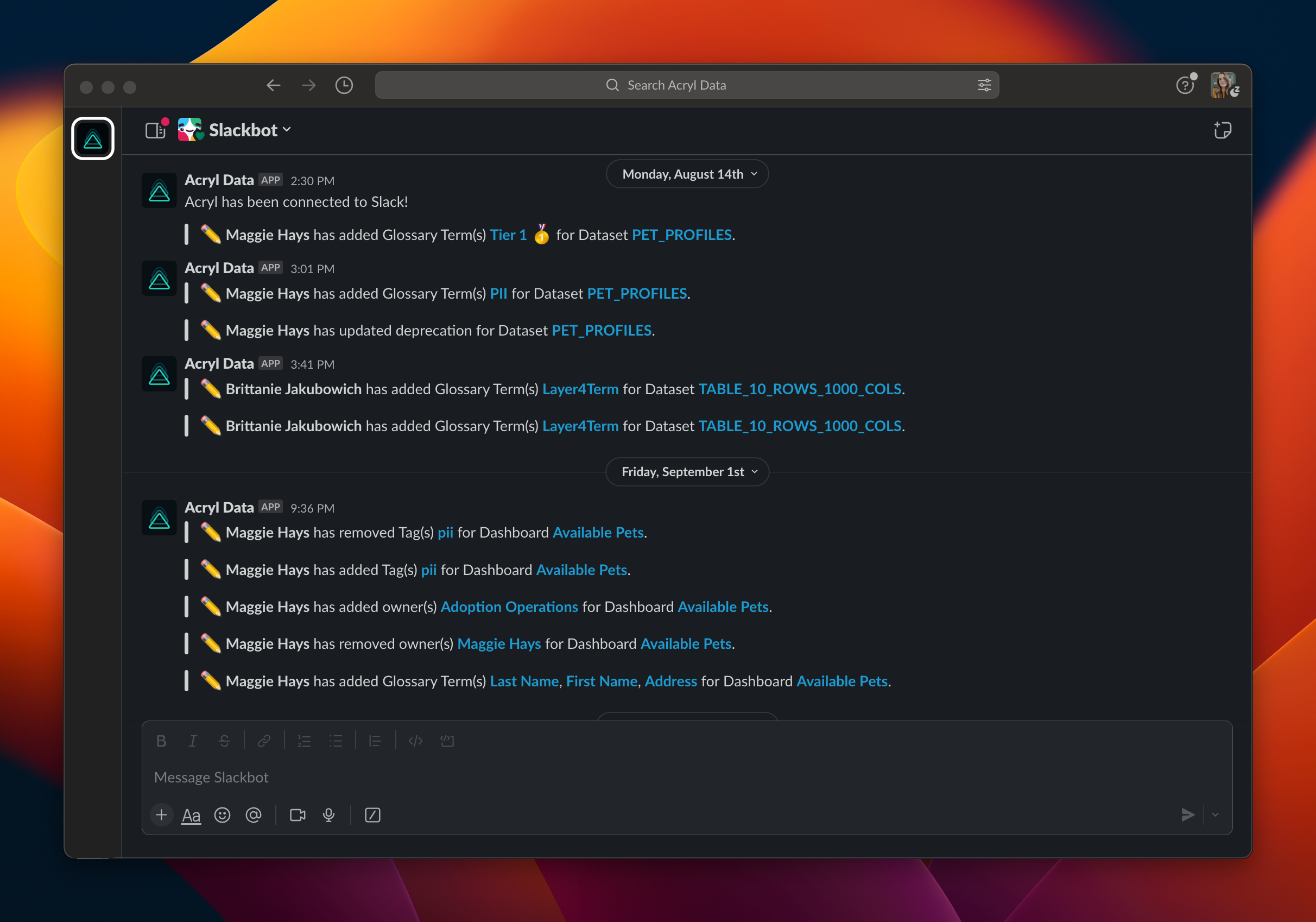

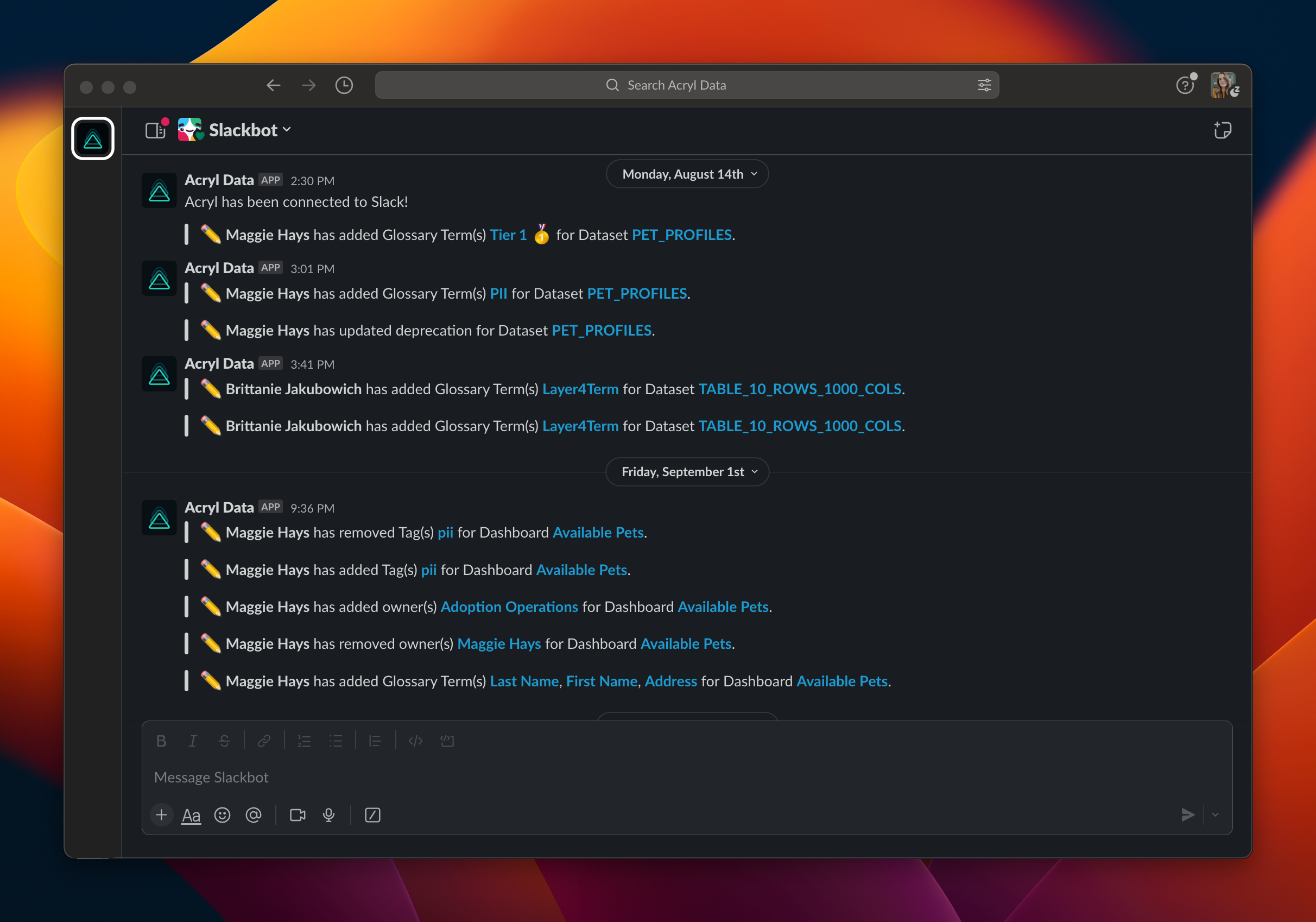

+## Overview

+The DataHub Slack App brings several of DataHub's key capabilities directly into your Slack experience. These include:

+1. Searching for Data Assets

+2. Subscribing to notifications for Data Assets

+3. Managing Data Incidents

+

+*Our goal with the Slack app is to make data discovery easier and more accessible for you.*

+

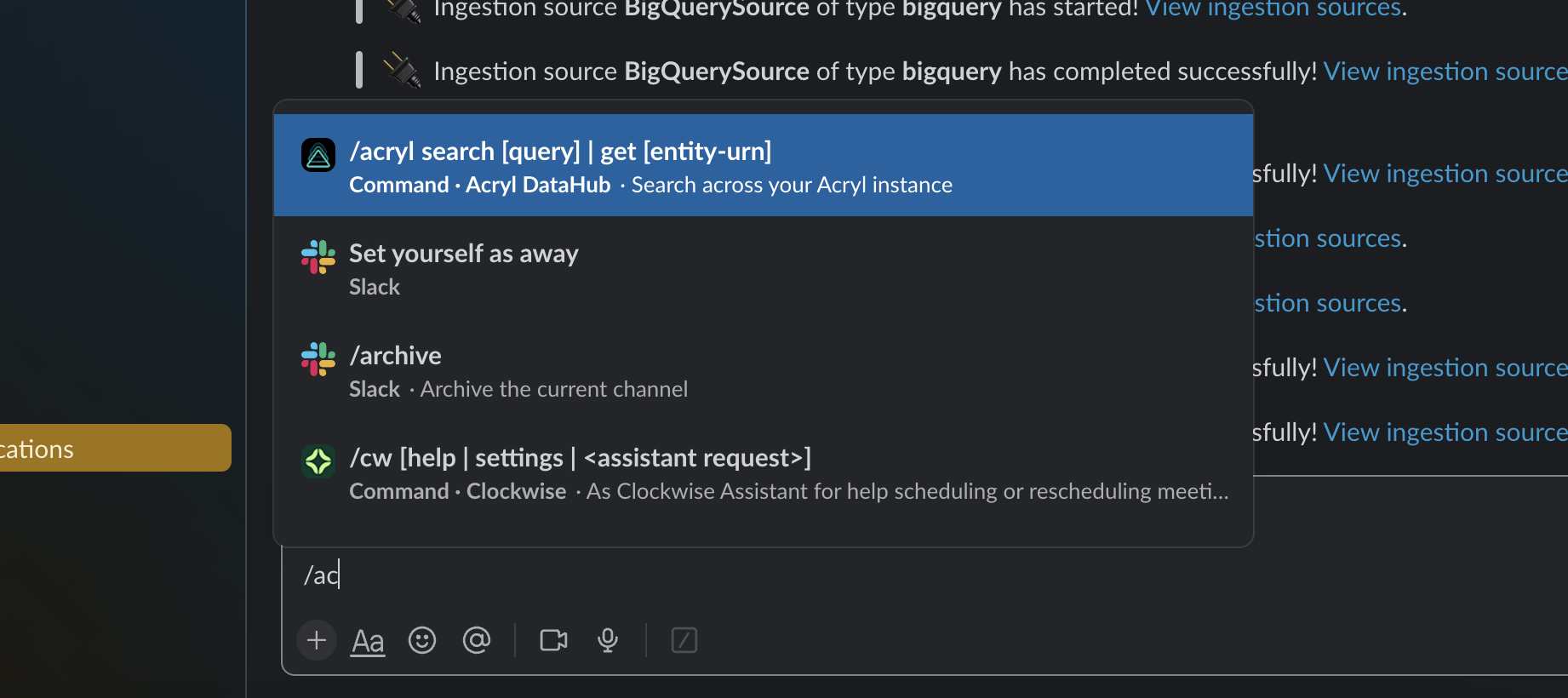

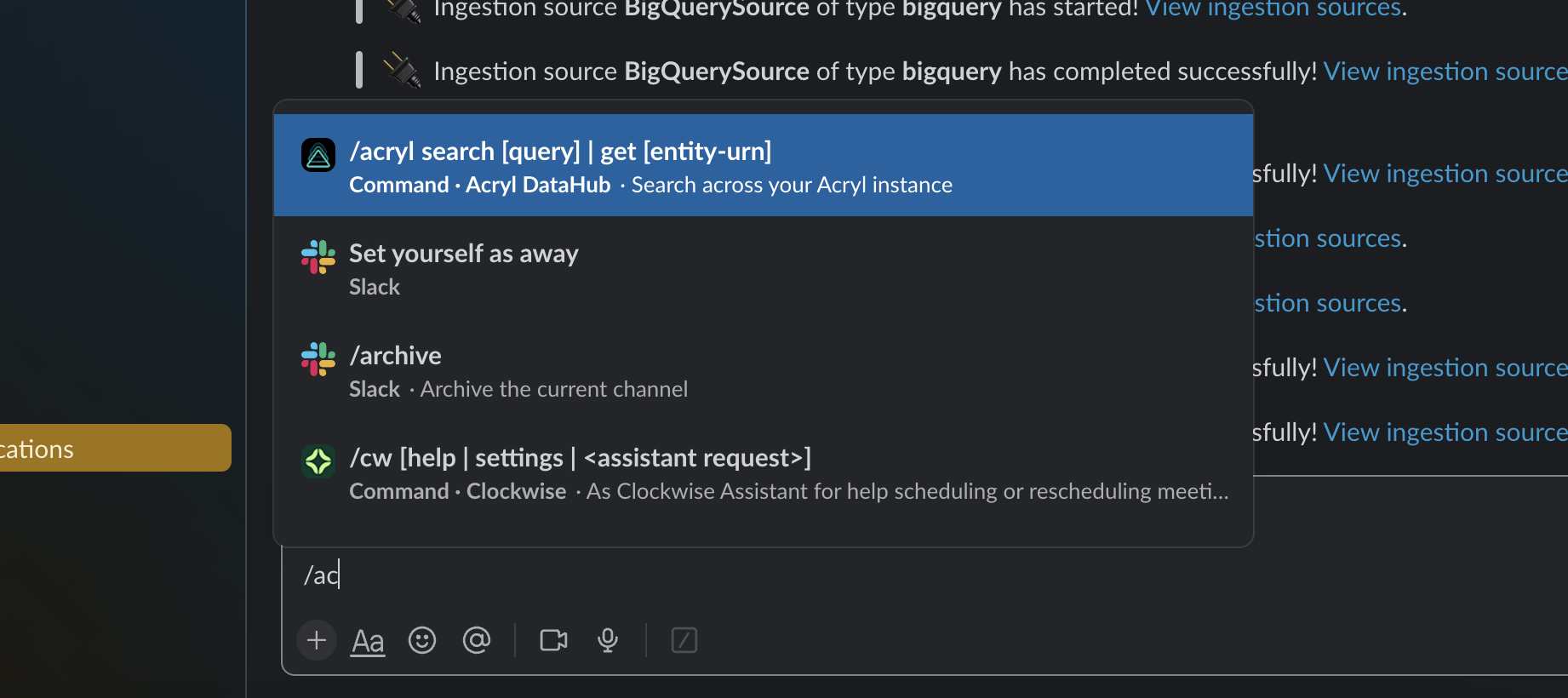

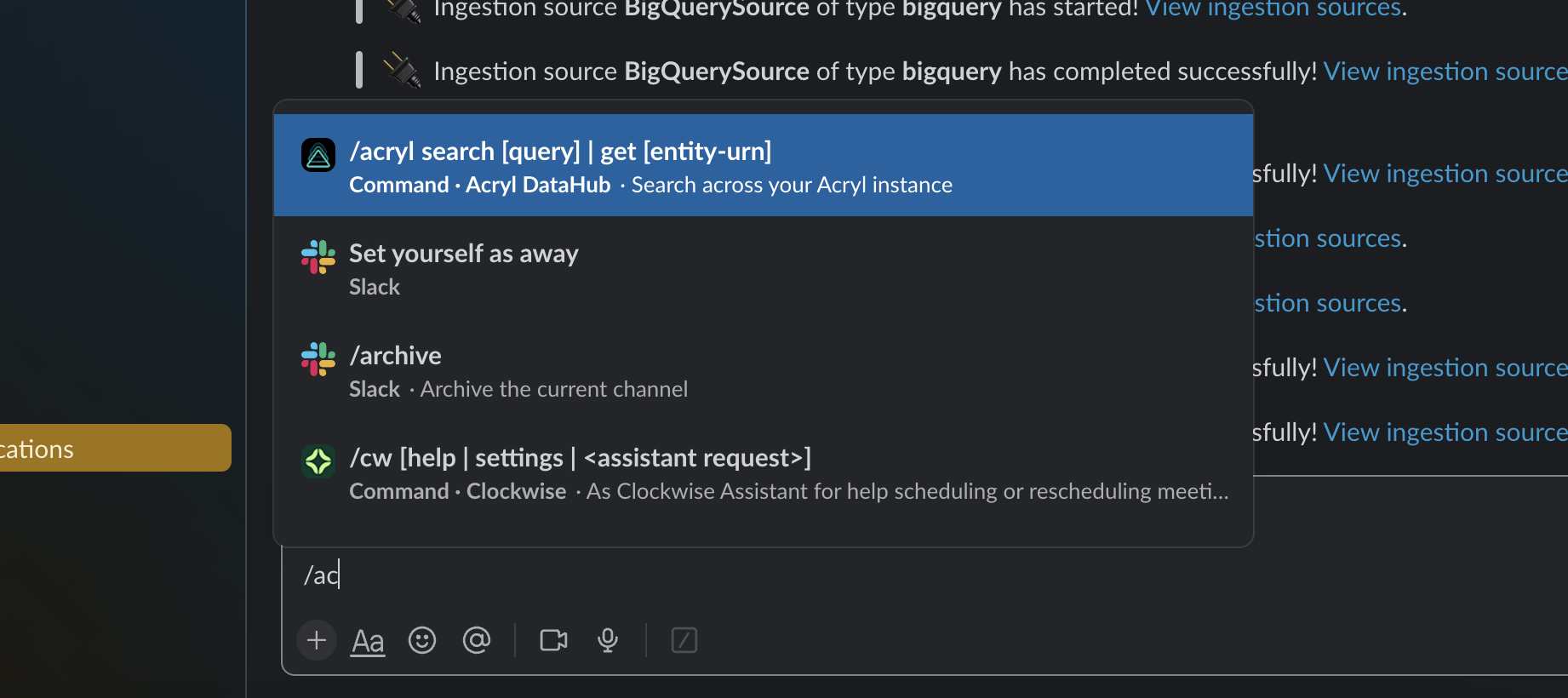

+## Slack App Commands

+The command-based capabilities on the Slack App revolve around search.

+

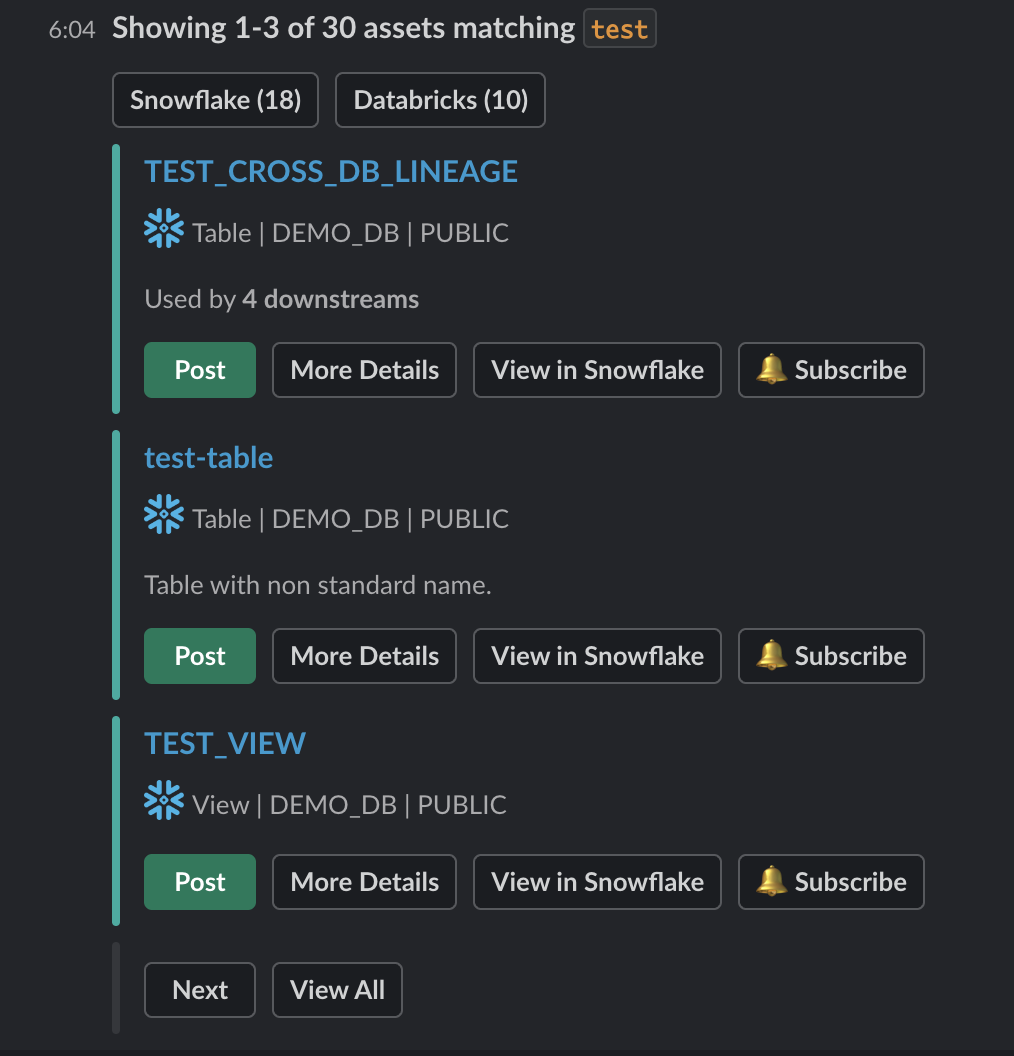

+### Querying for Assets

+You can trigger a search by simplying typing `/acryl my favorite table`.

+

-

-If provided, a custom channel will be used to route notifications of the given type. If not provided, the default channel will be used.

-That's it! You should begin to receive notifications on Slack. Note that it may take up to 1 minute for notification settings to take effect after saving.

-

-## Sending Notifications

-

-For now we support sending notifications to

-- Slack Channel ID (e.g. `C029A3M079U`)

-- Slack Channel Name (e.g. `#troubleshoot`)

-- Specific Users (aka Direct Messages or DMs) via user ID

-

-By default, the Slack app will be able to send notifications to public channels. If you want to send notifications to private channels or DMs, you will need to invite the Slack app to those channels.

-

-## How to find Team ID and Channel ID in Slack

-

-- Go to the Slack channel for which you want to get channel ID

-- Check the URL e.g. for the troubleshoot channel in OSS DataHub slack

-

-

-

-- Notice `TUMKD5EGJ/C029A3M079U` in the URL

- - Team ID = `TUMKD5EGJ` from above

- - Channel ID = `C029A3M079U` from above

-

-## How to find User ID in Slack

-

-- Go to user DM

-- Click on their profile picture

-- Click on View Full Profile

-- Click on “More”

-- Click on “Copy member ID”

-

-

\ No newline at end of file

diff --git a/docs/managed-datahub/slack/saas-slack-app.md b/docs/managed-datahub/slack/saas-slack-app.md

new file mode 100644

index 00000000000000..5e16fed901e723

--- /dev/null

+++ b/docs/managed-datahub/slack/saas-slack-app.md

@@ -0,0 +1,59 @@

+import FeatureAvailability from '@site/src/components/FeatureAvailability';

+

+# Slack App Features

+

+

+

+## Overview

+The DataHub Slack App brings several of DataHub's key capabilities directly into your Slack experience. These include:

+1. Searching for Data Assets

+2. Subscribing to notifications for Data Assets

+3. Managing Data Incidents

+

+*Our goal with the Slack app is to make data discovery easier and more accessible for you.*

+

+## Slack App Commands

+The command-based capabilities on the Slack App revolve around search.

+

+### Querying for Assets

+You can trigger a search by simplying typing `/acryl my favorite table`.

+

+  +

+

+

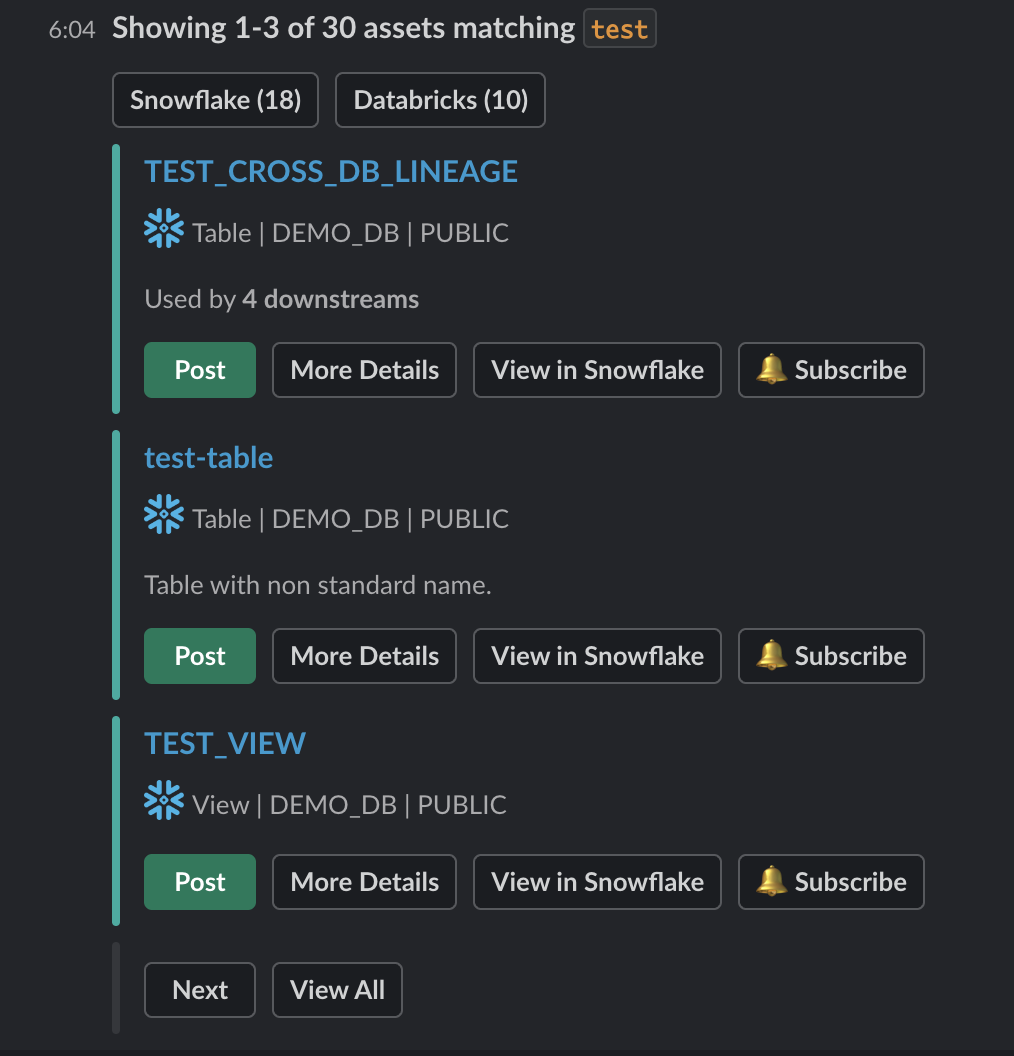

+Right within Slack, you'll be presented with results matching your query, and a handful of quick-actions for your convenience.

+

+  +

+

+

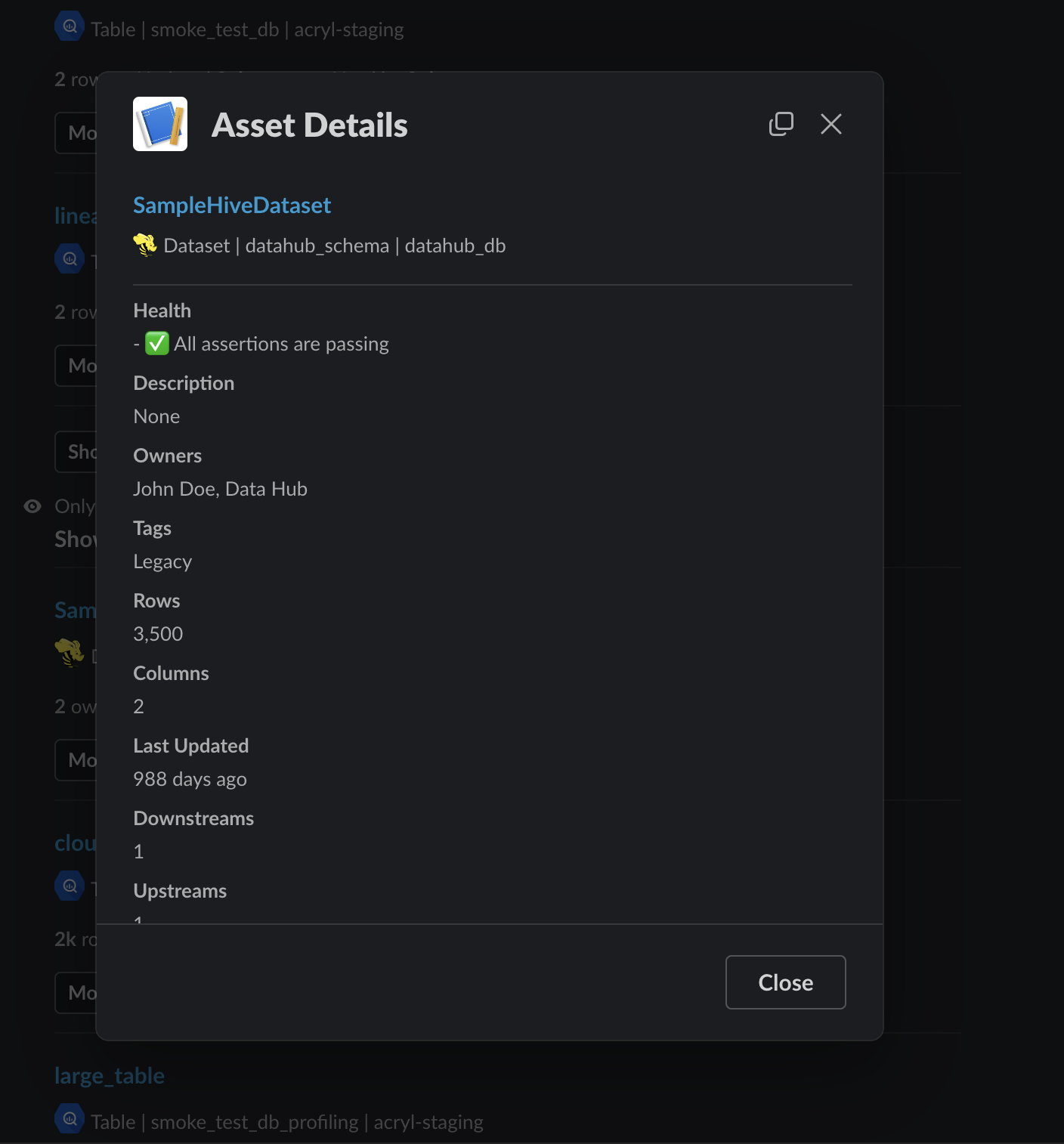

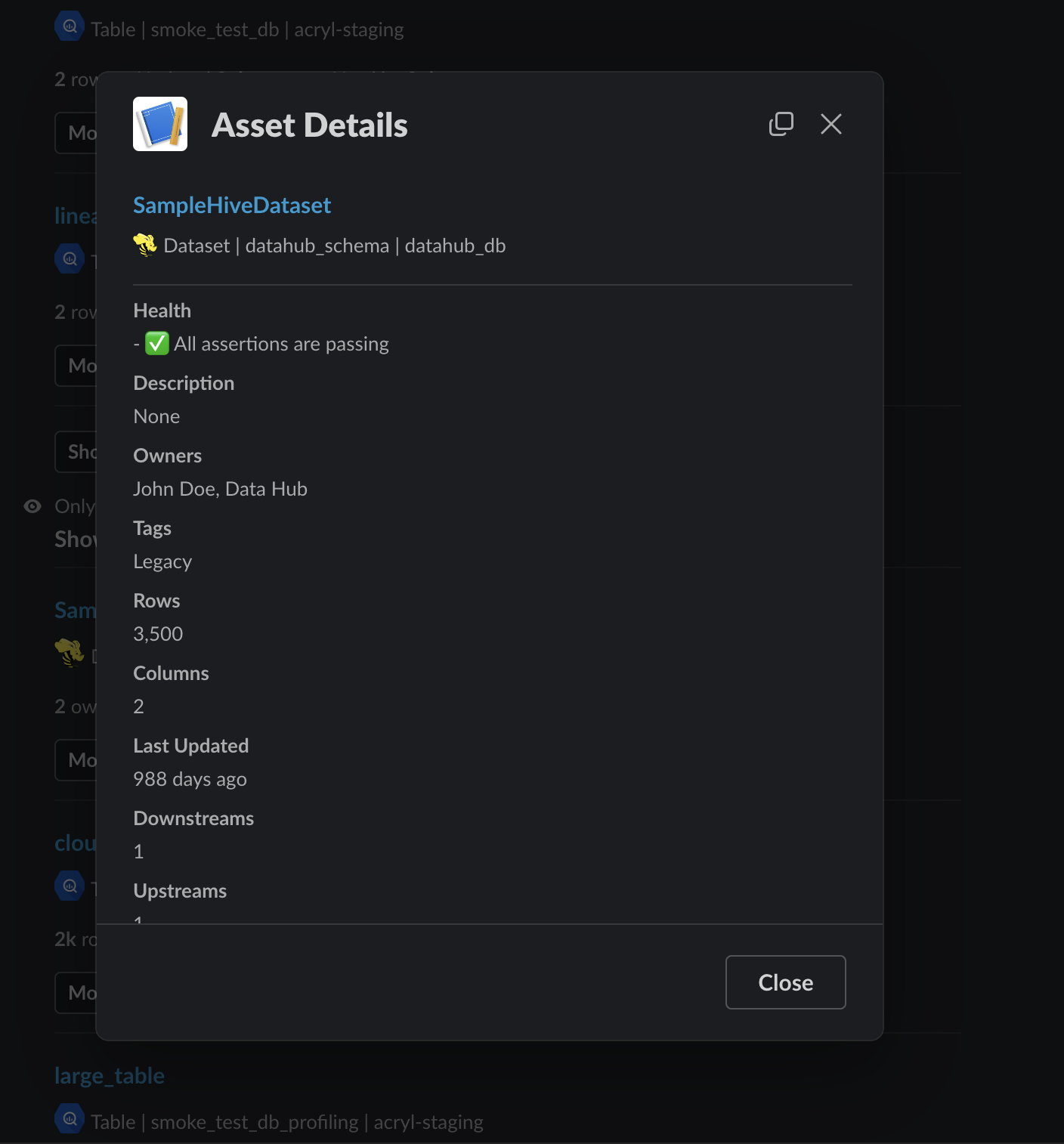

+By selecting **'More Details'** you can preview in-depth information about an asset without leaving Slack.

+

+  +

+

+

+### Subscribing to be notified about an Asset

+You can hit the **'Subscribe'** button on a specific search result to subscribe to it directly from within Slack.

+

+  +

+

+

+

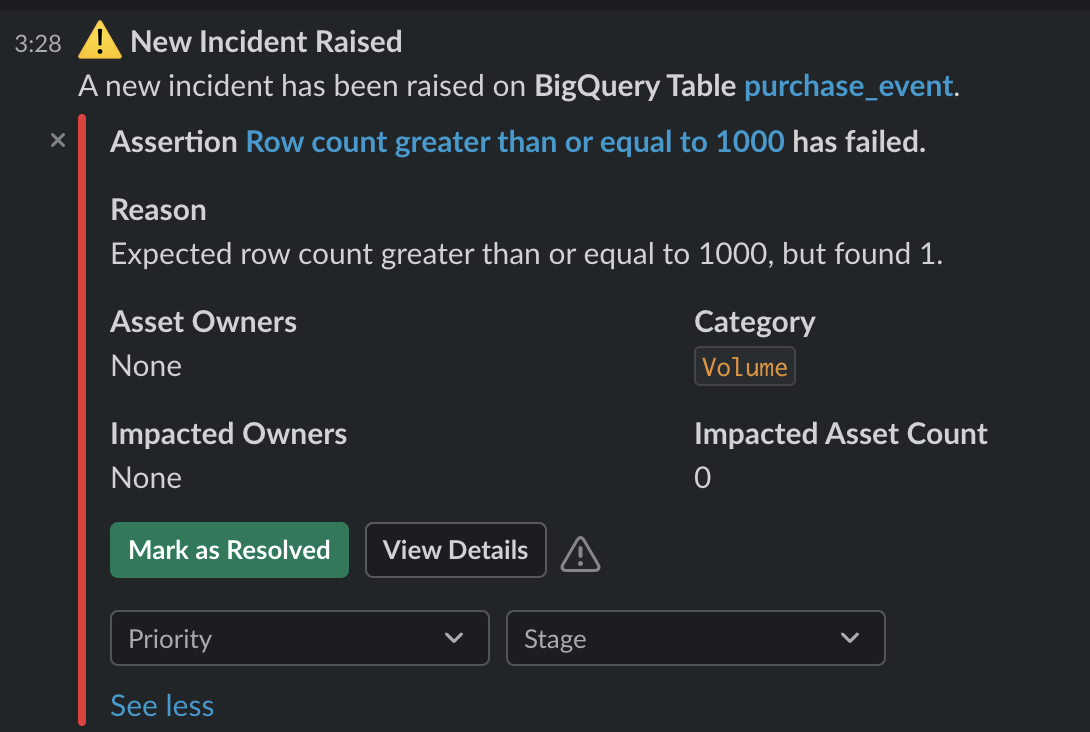

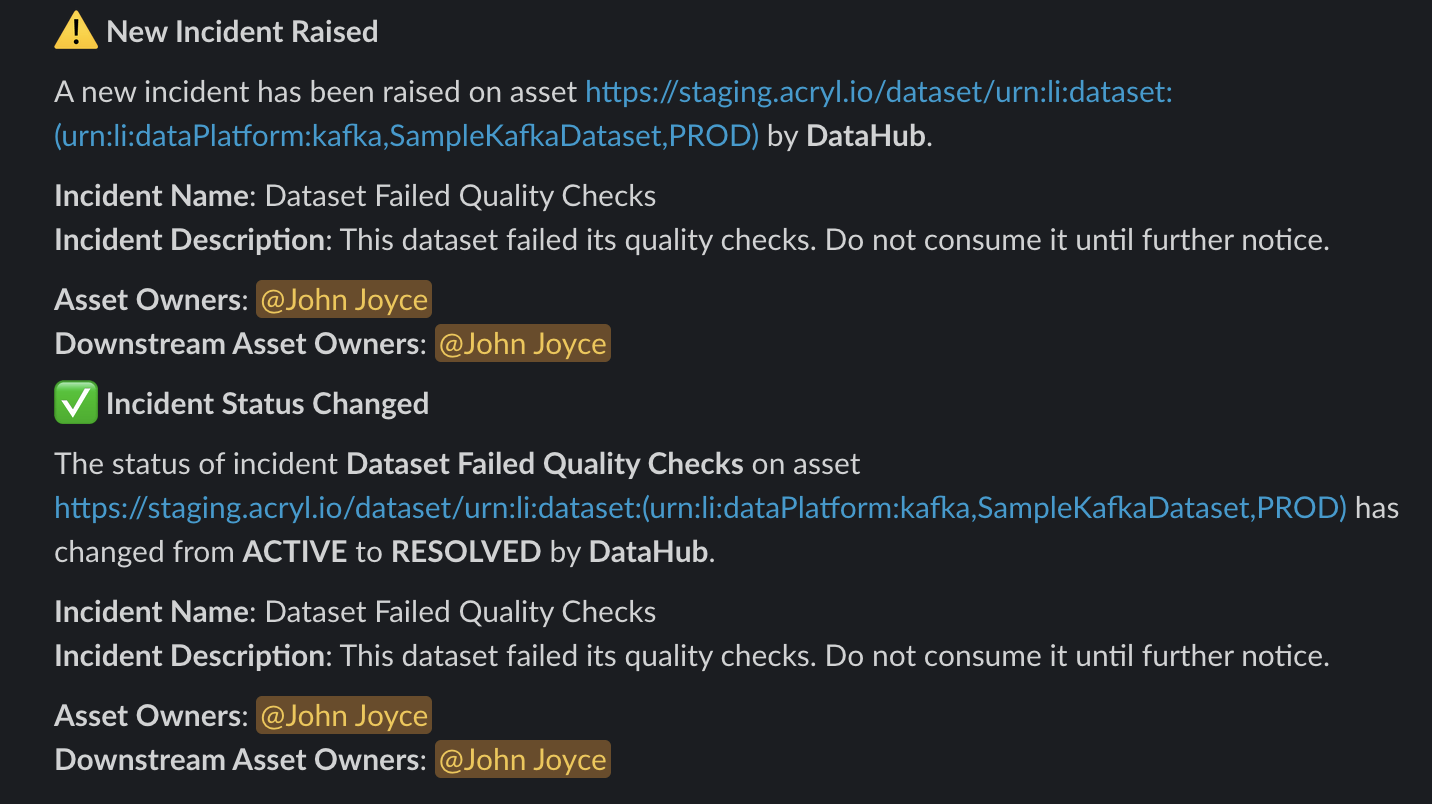

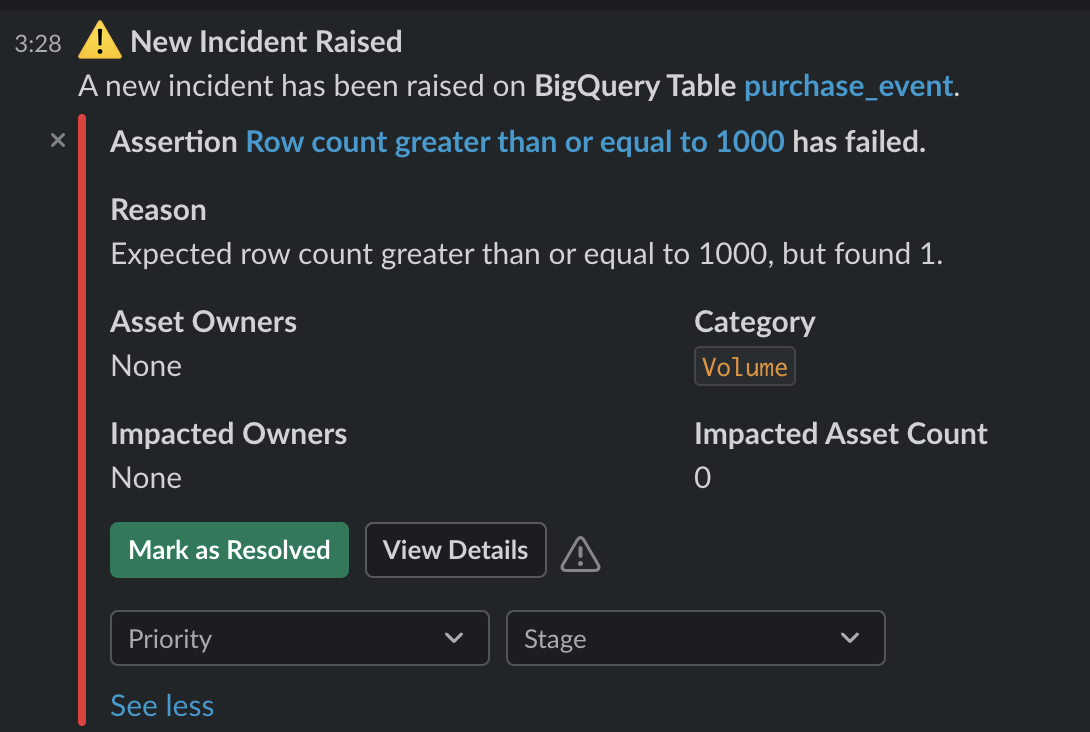

+## Manage Data Incidents

+Some of the most commonly used features within our Slack app are the Incidents management capabilities.

+The DataHub UI offers a rich set of [Incident tracking and management](https://datahubproject.io/docs/incidents/incidents/) features.

+When a Slack member or channel receives notifications about an Incident, many of these features are made accessible right within the Slack app.

+

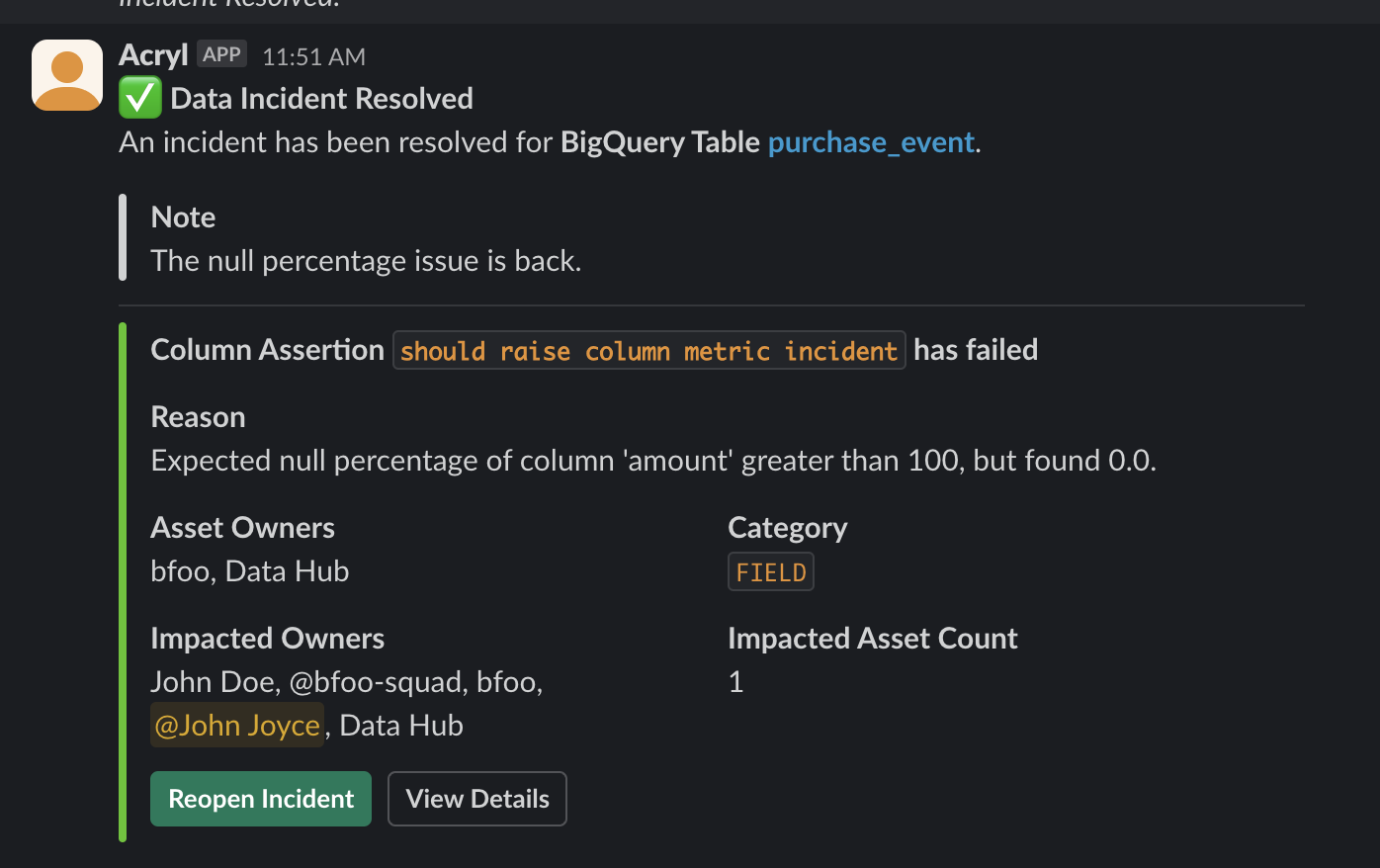

+When an incident is raised, you will recieve rich context about the incident in the Slack message itself. You will also be able to `Mark as Resolved`, update the `Priorty`, set a triage `Stage` and `View Details` - directly from the Slack message.

+

+  +

+

+

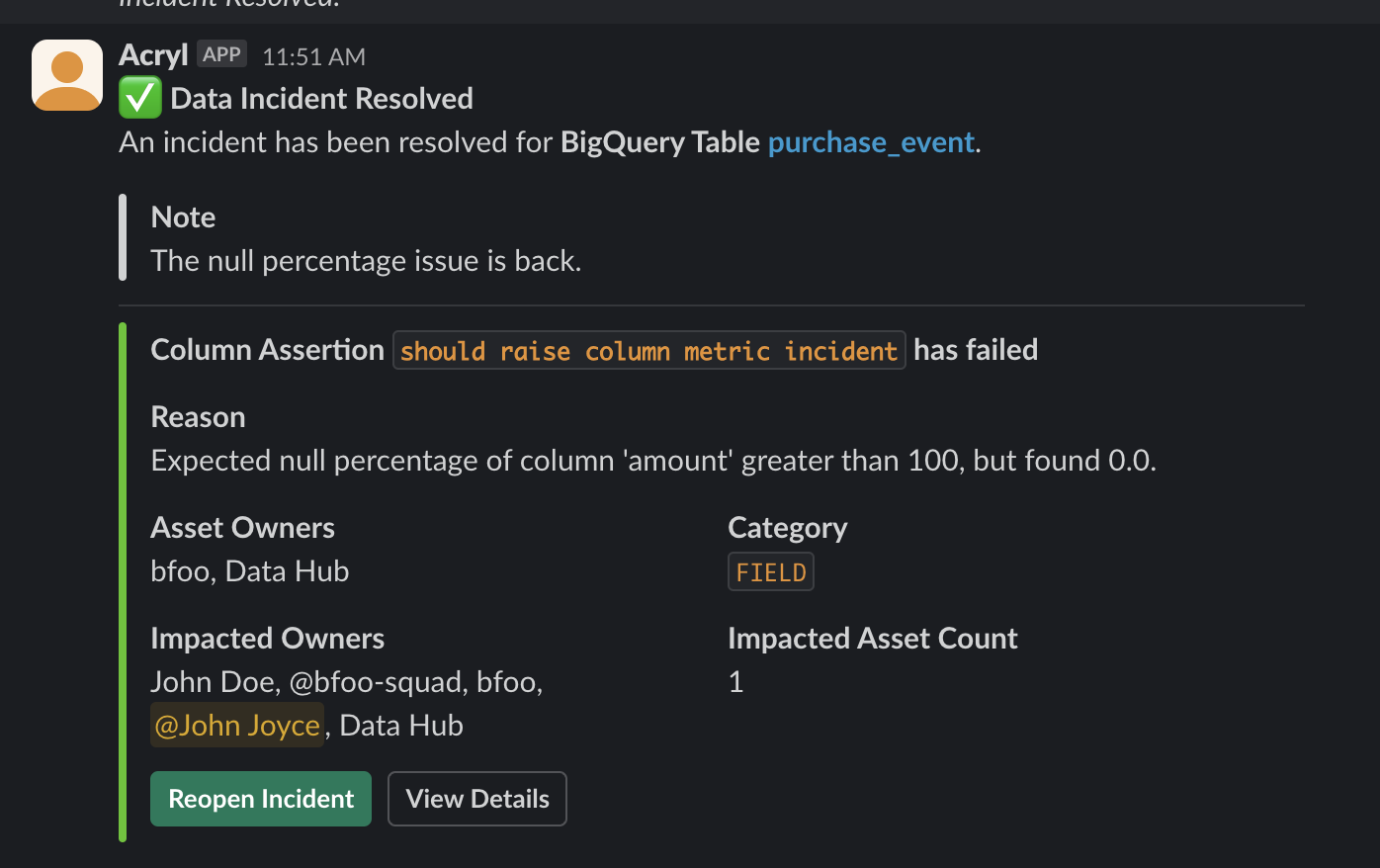

+If you choose to `Mark as Resolved` the message will update in-place, and you will be presented with the ability to `Reopen Incident` should you choose.

+

+  +

+

+

+

+## Coming Soon

+We're constantly working on rolling out new features for the Slack app, stay tuned!

+

diff --git a/docs/managed-datahub/slack/saas-slack-setup.md b/docs/managed-datahub/slack/saas-slack-setup.md

new file mode 100644

index 00000000000000..6db6a77c3a1f39

--- /dev/null

+++ b/docs/managed-datahub/slack/saas-slack-setup.md

@@ -0,0 +1,176 @@

+import FeatureAvailability from '@site/src/components/FeatureAvailability';

+

+# Configure Slack For Notifications

+

+

+

+## Install the DataHub Slack App into your Slack workspace

+

+

+### Video Walkthrough

+

+

+### Step-by-step guide

+The following steps should be performed by a Slack Workspace Admin.

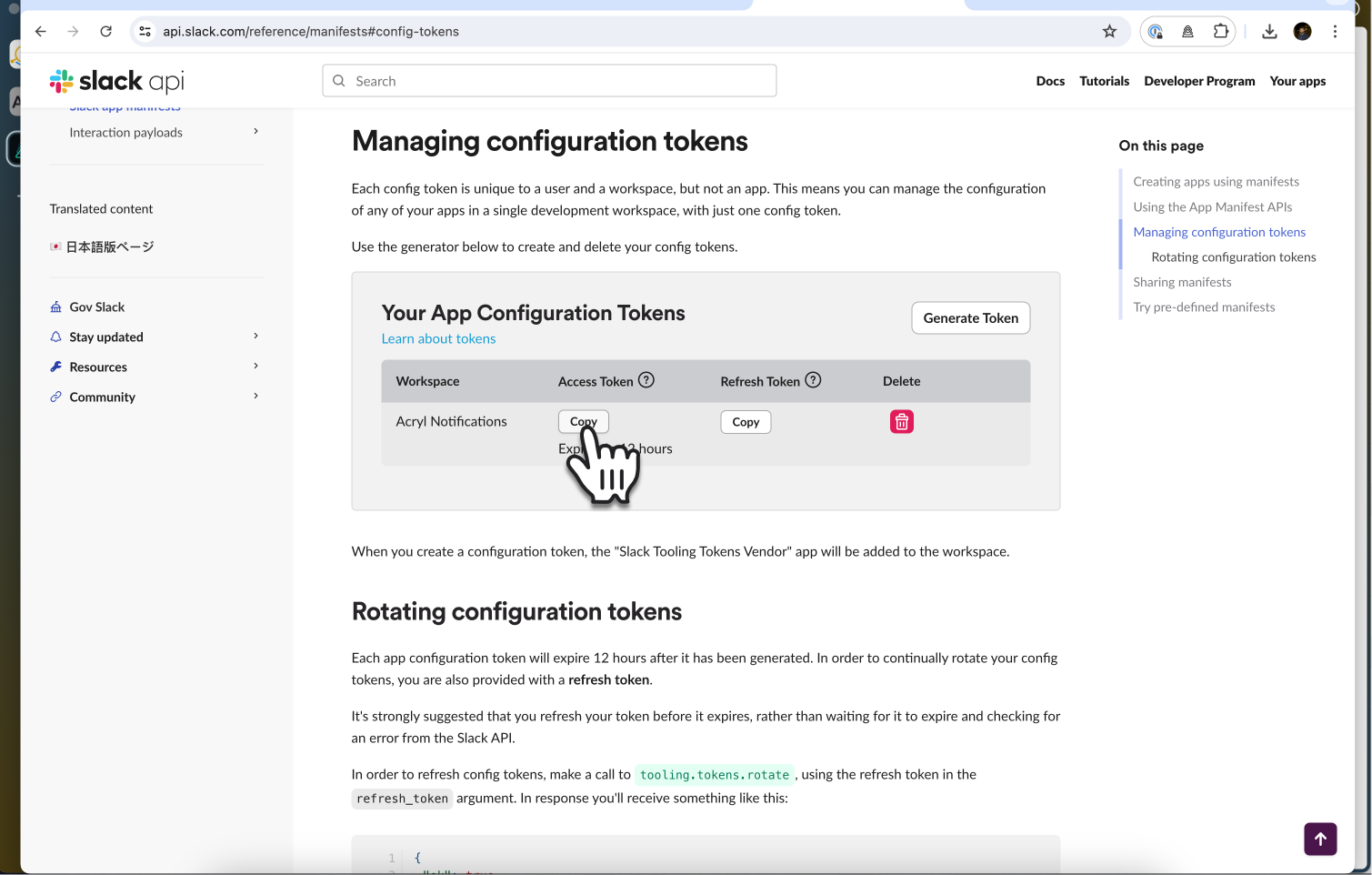

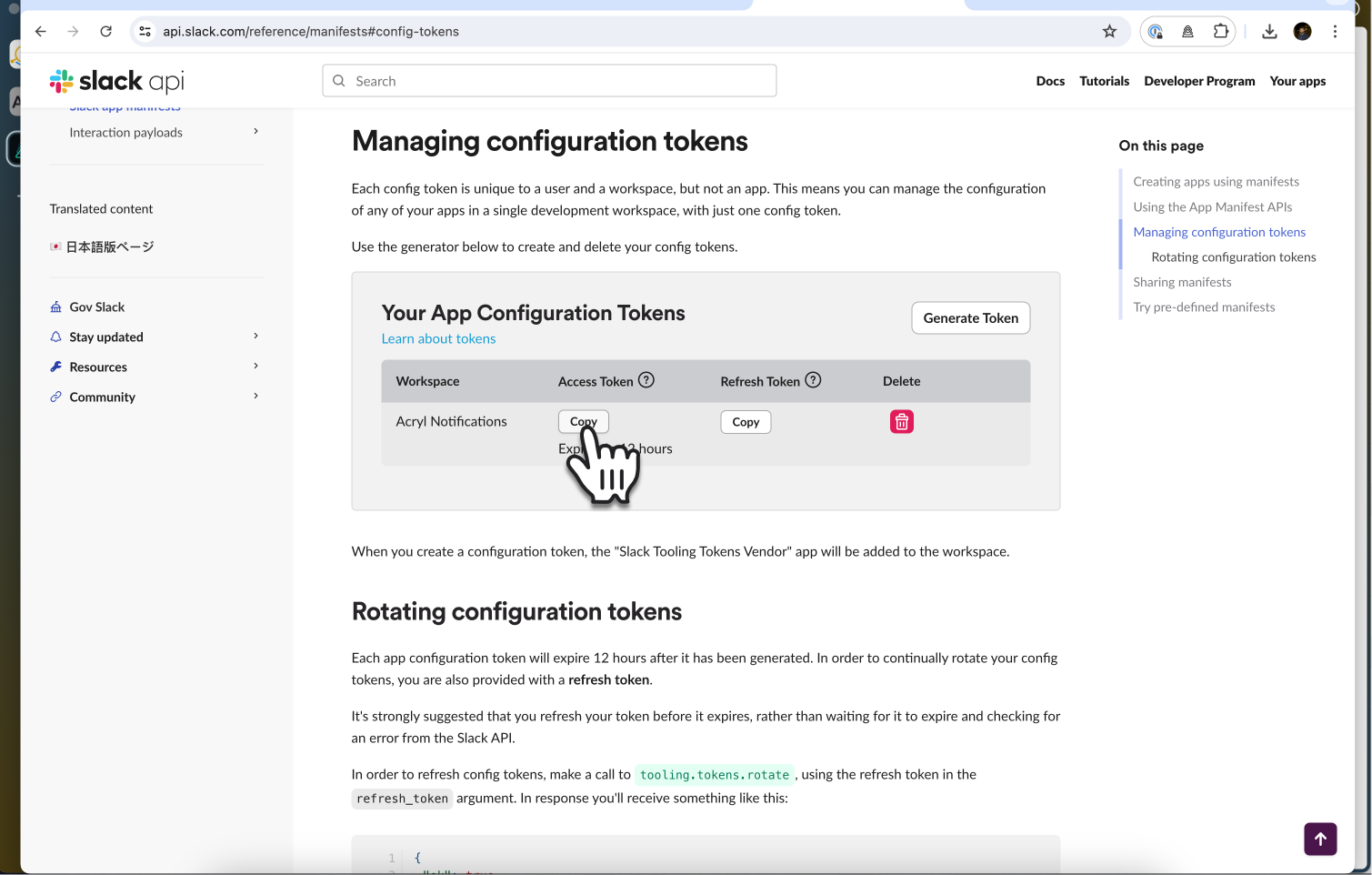

+1. Navigate to [https://api.slack.com/reference/manifests#config-tokens](https://api.slack.com/reference/manifests#config-tokens)

+2. Under **Managing configuration tokens**, select **'Generate Token'**

+

+  +

+

+3. Select your workspace, then hit **'Generate'**

+

+  +

+

+4. Now you will see two tokens available for you to copy, an *Access Token* and a *Refresh Token*

+

+  +

+

+5. Navigate back to your DataHub [Slack Integration setup page](https://longtailcompanions.acryl.io/settings/integrations/slack), and paste the tokens into their respective boxes, and click **'Connect'**.

+

+  +

+

+6. You will be automatically re-directed to Slack to confirm DataHub Slack App's permissions and complete the installation process:

+

+  +

+

+7. Congrats 🎉 Slack is set up! Now try it out by going to the **Platform Notifications** page

+

+  +

+

+8. Enter your channel in, and click **'Send a test notification'**

+

+  +

+

+

+Now proceed to the [Subscriptions and Notifications page](https://datahubproject.io/docs/managed-datahub/subscription-and-notification) to see how you can subscribe to be notified about events on the platform, or visit the [Slack App page](saas-slack-app.md) to see how you can use DataHub's powerful capabilities directly within Slack.

+

+

+

+## Sending Notifications

+

+For now, we support sending notifications to

+- Slack Channel Name (e.g. `#troubleshoot`)

+- Slack Channel ID (e.g. `C029A3M079U`)

+- Specific Users (aka Direct Messages or DMs) via user ID

+

+By default, the Slack app will be able to send notifications to public channels. If you want to send notifications to private channels or DMs, you will need to invite the Slack app to those channels.

+

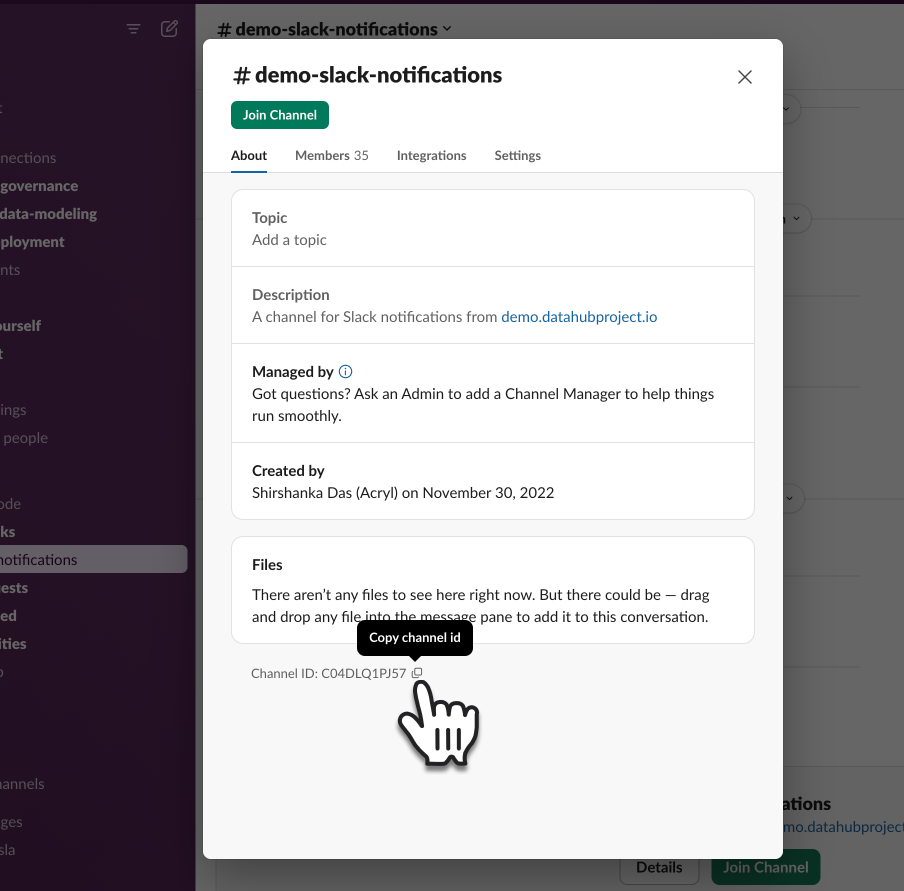

+## How to find Team ID and Channel ID in Slack

+:::note

+We recommend just using the Slack channel name for simplicity (e.g. `#troubleshoot`).

+:::

+

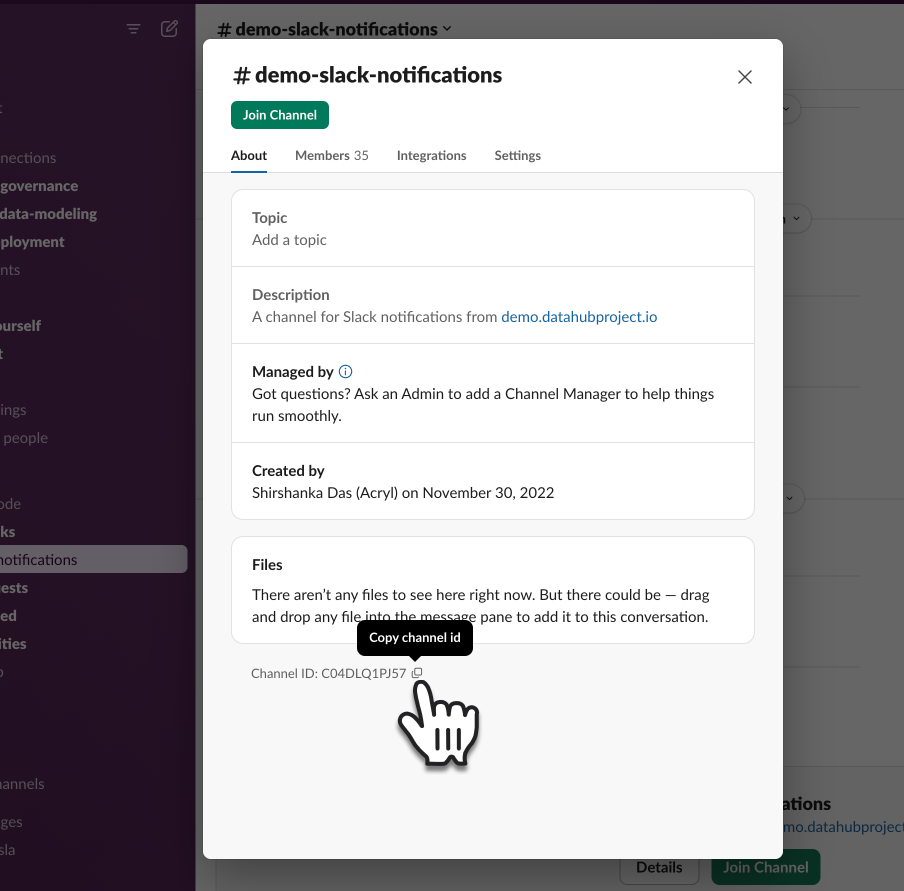

+**Via Slack App:**

+1. Go to the Slack channel for which you want to get a channel ID

+2. Click the channel name at the top

+

+  +

+

+3. At the bottom of the modal that pops up, you will see the Channel ID as well as a button to copy it

+

+  +

+

+

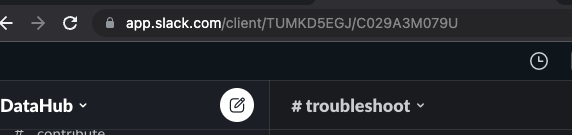

+**Via Web:**

+1. Go to the Slack channel for which you want to get a channel ID

+2. Check the URL e.g. for the troubleshoot channel in OSS DataHub Slack

+

+

+3. Notice `TUMKD5EGJ/C029A3M079U` in the URL

+ - Team ID = `TUMKD5EGJ` from above

+ - Channel ID = `C029A3M079U` from above

+

+## How to find User ID in Slack

+

+**Your User ID**

+1. Click your profile picture, then select **'Profile'**

+

+  +

+

+2. Now hit the **'...'** and select **'Copy member ID'**

+

+  +

+

+

+**Someone else's User ID**

+1. Click their profile picture in the Slack message

+

+  +

+

+2. Now hit the **'...'** and select **'Copy member ID'**

+

+  +

+

diff --git a/docs/managed-datahub/subscription-and-notification.md b/docs/managed-datahub/subscription-and-notification.md

index 81648d4298ec17..0e456fe415b2c3 100644

--- a/docs/managed-datahub/subscription-and-notification.md

+++ b/docs/managed-datahub/subscription-and-notification.md

@@ -5,7 +5,10 @@ import FeatureAvailability from '@site/src/components/FeatureAvailability';

DataHub's Subscriptions and Notifications feature gives you real-time change alerts on data assets of your choice.

-With this feature, you can set up subscriptions to specific changes for an Entity – and DataHub will notify you when those changes happen. Currently, DataHub supports notifications on Slack, with support for Microsoft Teams and email subscriptions forthcoming.

+With this feature, you can set up subscriptions to specific changes for an Entity – and DataHub will notify you when those changes happen. Currently, DataHub supports notifications on Slack and Email, with support for Microsoft Teams forthcoming.

+

+Email will work out of box. For installing the DataHub Slack App, see:

+👉 [Configure Slack for Notifications](slack/saas-slack-setup.md)

@@ -16,7 +19,7 @@ As a user, you can subscribe to and receive notifications about changes such as

## Prerequisites

-Once you have [configured Slack within your DataHub instance](saas-slack-setup.md), you will be able to subscribe to any Entity in DataHub and begin recieving notifications via DM.

+Once you have [configured Slack within your DataHub instance](slack/saas-slack-setup.md), you will be able to subscribe to any Entity in DataHub and begin recieving notifications via DM.

To begin receiving personal notifications, go to Settings > "My Notifications". From here, toggle on Slack Notifications and input your Slack Member ID.

If you want to create and manage group-level Subscriptions for your team, you will need [the following privileges](../../docs/authorization/roles.md#role-privileges):

diff --git a/entity-registry/src/main/java/com/linkedin/metadata/aspect/batch/AspectsBatch.java b/entity-registry/src/main/java/com/linkedin/metadata/aspect/batch/AspectsBatch.java

index 77820948b00cbc..fc4ac90dfabad8 100644

--- a/entity-registry/src/main/java/com/linkedin/metadata/aspect/batch/AspectsBatch.java

+++ b/entity-registry/src/main/java/com/linkedin/metadata/aspect/batch/AspectsBatch.java

@@ -9,6 +9,7 @@

import com.linkedin.util.Pair;

import java.util.ArrayList;

import java.util.Collection;

+import java.util.HashMap;

import java.util.HashSet;

import java.util.List;

import java.util.Map;

@@ -198,16 +199,12 @@ default Map> getNewUrnAspectsMap(

static Map> merge(

@Nonnull Map> a, @Nonnull Map> b) {

- return Stream.concat(a.entrySet().stream(), b.entrySet().stream())

- .flatMap(

- entry ->

- entry.getValue().entrySet().stream()

- .map(innerEntry -> Pair.of(entry.getKey(), innerEntry)))

- .collect(

- Collectors.groupingBy(

- Pair::getKey,

- Collectors.mapping(

- Pair::getValue, Collectors.toMap(Map.Entry::getKey, Map.Entry::getValue))));

+ Map> mergedMap = new HashMap<>();

+ for (Map.Entry> entry :

+ Stream.concat(a.entrySet().stream(), b.entrySet().stream()).collect(Collectors.toList())) {

+ mergedMap.computeIfAbsent(entry.getKey(), k -> new HashMap<>()).putAll(entry.getValue());

+ }

+ return mergedMap;

}

default String toAbbreviatedString(int maxWidth) {

diff --git a/metadata-ingestion-modules/airflow-plugin/src/datahub_airflow_plugin/client/airflow_generator.py b/metadata-ingestion-modules/airflow-plugin/src/datahub_airflow_plugin/client/airflow_generator.py

index 8aa154dc267b60..e9f93c0c1eab0a 100644

--- a/metadata-ingestion-modules/airflow-plugin/src/datahub_airflow_plugin/client/airflow_generator.py

+++ b/metadata-ingestion-modules/airflow-plugin/src/datahub_airflow_plugin/client/airflow_generator.py

@@ -127,6 +127,10 @@ def _get_dependencies(

)

return upstream_tasks

+ @staticmethod

+ def _extract_owners(dag: "DAG") -> List[str]:

+ return [owner.strip() for owner in dag.owner.split(",")]

+

@staticmethod

def generate_dataflow(

config: DatahubLineageConfig,

@@ -175,7 +179,7 @@ def generate_dataflow(

data_flow.url = f"{base_url}/tree?dag_id={dag.dag_id}"

if config.capture_ownership_info and dag.owner:

- owners = [owner.strip() for owner in dag.owner.split(",")]

+ owners = AirflowGenerator._extract_owners(dag)

if config.capture_ownership_as_group:

data_flow.group_owners.update(owners)

else:

@@ -282,10 +286,12 @@ def generate_datajob(

datajob.url = f"{base_url}/taskinstance/list/?flt1_dag_id_equals={datajob.flow_urn.flow_id}&_flt_3_task_id={task.task_id}"

if capture_owner and dag.owner:

- if config and config.capture_ownership_as_group:

- datajob.group_owners.add(dag.owner)

- else:

- datajob.owners.add(dag.owner)

+ if config and config.capture_ownership_info:

+ owners = AirflowGenerator._extract_owners(dag)

+ if config.capture_ownership_as_group:

+ datajob.group_owners.update(owners)

+ else:

+ datajob.owners.update(owners)

if capture_tags and dag.tags:

datajob.tags.update(dag.tags)

diff --git a/metadata-ingestion/docs/sources/abs/README.md b/metadata-ingestion/docs/sources/abs/README.md

new file mode 100644

index 00000000000000..46a234ed305e0b

--- /dev/null

+++ b/metadata-ingestion/docs/sources/abs/README.md

@@ -0,0 +1,40 @@

+This connector ingests Azure Blob Storage (abbreviated to abs) datasets into DataHub. It allows mapping an individual

+file or a folder of files to a dataset in DataHub.

+To specify the group of files that form a dataset, use `path_specs` configuration in ingestion recipe. Refer

+section [Path Specs](https://datahubproject.io/docs/generated/ingestion/sources/s3/#path-specs) for more details.

+

+### Concept Mapping

+

+This ingestion source maps the following Source System Concepts to DataHub Concepts:

+

+| Source Concept | DataHub Concept | Notes |

+|----------------------------------------|--------------------------------------------------------------------------------------------|------------------|

+| `"abs"` | [Data Platform](https://datahubproject.io/docs/generated/metamodel/entities/dataplatform/) | |

+| abs blob / Folder containing abs blobs | [Dataset](https://datahubproject.io/docs/generated/metamodel/entities/dataset/) | |

+| abs container | [Container](https://datahubproject.io/docs/generated/metamodel/entities/container/) | Subtype `Folder` |

+

+This connector supports both local files and those stored on Azure Blob Storage (which must be identified using the

+prefix `http(s)://.blob.core.windows.net/` or `azure://`).

+

+### Supported file types

+

+Supported file types are as follows:

+

+- CSV (*.csv)

+- TSV (*.tsv)

+- JSONL (*.jsonl)

+- JSON (*.json)

+- Parquet (*.parquet)

+- Apache Avro (*.avro)

+

+Schemas for Parquet and Avro files are extracted as provided.

+

+Schemas for schemaless formats (CSV, TSV, JSONL, JSON) are inferred. For CSV, TSV and JSONL files, we consider the first

+100 rows by default, which can be controlled via the `max_rows` recipe parameter (see [below](#config-details))

+JSON file schemas are inferred on the basis of the entire file (given the difficulty in extracting only the first few

+objects of the file), which may impact performance.

+We are working on using iterator-based JSON parsers to avoid reading in the entire JSON object.

+

+### Profiling

+

+Profiling is not available in the current release.

diff --git a/metadata-ingestion/docs/sources/abs/abs.md b/metadata-ingestion/docs/sources/abs/abs.md

new file mode 100644

index 00000000000000..613ace280c8ba0

--- /dev/null

+++ b/metadata-ingestion/docs/sources/abs/abs.md

@@ -0,0 +1,204 @@

+

+### Path Specs

+

+Path Specs (`path_specs`) is a list of Path Spec (`path_spec`) objects, where each individual `path_spec` represents one or more datasets. The include path (`path_spec.include`) represents a formatted path to the dataset. This path must end with `*.*` or `*.[ext]` to represent the leaf level. If `*.[ext]` is provided, then only files with the specified extension type will be scanned. "`.[ext]`" can be any of the [supported file types](#supported-file-types). Refer to [example 1](#example-1---individual-file-as-dataset) below for more details.

+

+All folder levels need to be specified in the include path. You can use `/*/` to represent a folder level and avoid specifying the exact folder name. To map a folder as a dataset, use the `{table}` placeholder to represent the folder level for which the dataset is to be created. For a partitioned dataset, you can use the placeholder `{partition_key[i]}` to represent the name of the `i`th partition and `{partition[i]}` to represent the value of the `i`th partition. During ingestion, `i` will be used to match the partition_key to the partition. Refer to [examples 2 and 3](#example-2---folder-of-files-as-dataset-without-partitions) below for more details.

+

+Exclude paths (`path_spec.exclude`) can be used to ignore paths that are not relevant to the current `path_spec`. This path cannot have named variables (`{}`). The exclude path can have `**` to represent multiple folder levels. Refer to [example 4](#example-4---folder-of-files-as-dataset-with-partitions-and-exclude-filter) below for more details.

+

+Refer to [example 5](#example-5---advanced---either-individual-file-or-folder-of-files-as-dataset) if your container has a more complex dataset representation.

+

+**Additional points to note**

+- Folder names should not contain {, }, *, / in their names.

+- Named variable {folder} is reserved for internal working. please do not use in named variables.

+

+

+### Path Specs - Examples

+#### Example 1 - Individual file as Dataset

+

+Container structure:

+

+```

+test-container

+├── employees.csv

+├── departments.json

+└── food_items.csv

+```

+

+Path specs config to ingest `employees.csv` and `food_items.csv` as datasets:

+```

+path_specs:

+ - include: https://storageaccountname.blob.core.windows.net/test-container/*.csv

+

+```

+This will automatically ignore `departments.json` file. To include it, use `*.*` instead of `*.csv`.

+

+#### Example 2 - Folder of files as Dataset (without Partitions)

+

+Container structure:

+```

+test-container

+└── offers

+ ├── 1.avro

+ └── 2.avro

+

+```

+

+Path specs config to ingest folder `offers` as dataset:

+```

+path_specs:

+ - include: https://storageaccountname.blob.core.windows.net/test-container/{table}/*.avro

+```

+

+`{table}` represents folder for which dataset will be created.

+

+#### Example 3 - Folder of files as Dataset (with Partitions)

+

+Container structure:

+```

+test-container

+├── orders

+│ └── year=2022

+│ └── month=2

+│ ├── 1.parquet

+│ └── 2.parquet

+└── returns

+ └── year=2021

+ └── month=2

+ └── 1.parquet

+

+```

+

+Path specs config to ingest folders `orders` and `returns` as datasets:

+```

+path_specs:

+ - include: https://storageaccountname.blob.core.windows.net/test-container/{table}/{partition_key[0]}={partition[0]}/{partition_key[1]}={partition[1]}/*.parquet

+```

+

+One can also use `include: https://storageaccountname.blob.core.windows.net/test-container/{table}/*/*/*.parquet` here however above format is preferred as it allows declaring partitions explicitly.

+

+#### Example 4 - Folder of files as Dataset (with Partitions), and Exclude Filter

+

+Container structure:

+```

+test-container

+├── orders

+│ └── year=2022

+│ └── month=2

+│ ├── 1.parquet

+│ └── 2.parquet

+└── tmp_orders

+ └── year=2021

+ └── month=2

+ └── 1.parquet

+

+

+```

+

+Path specs config to ingest folder `orders` as dataset but not folder `tmp_orders`:

+```

+path_specs:

+ - include: https://storageaccountname.blob.core.windows.net/test-container/{table}/{partition_key[0]}={partition[0]}/{partition_key[1]}={partition[1]}/*.parquet

+ exclude:

+ - **/tmp_orders/**

+```

+

+

+#### Example 5 - Advanced - Either Individual file OR Folder of files as Dataset

+

+Container structure:

+```

+test-container

+├── customers

+│ ├── part1.json

+│ ├── part2.json

+│ ├── part3.json

+│ └── part4.json

+├── employees.csv

+├── food_items.csv

+├── tmp_10101000.csv

+└── orders

+ └── year=2022

+ └── month=2

+ ├── 1.parquet

+ ├── 2.parquet

+ └── 3.parquet

+

+```

+

+Path specs config:

+```

+path_specs:

+ - include: https://storageaccountname.blob.core.windows.net/test-container/*.csv

+ exclude:

+ - **/tmp_10101000.csv

+ - include: https://storageaccountname.blob.core.windows.net/test-container/{table}/*.json

+ - include: https://storageaccountname.blob.core.windows.net/test-container/{table}/{partition_key[0]}={partition[0]}/{partition_key[1]}={partition[1]}/*.parquet

+```

+

+Above config has 3 path_specs and will ingest following datasets

+- `employees.csv` - Single File as Dataset

+- `food_items.csv` - Single File as Dataset

+- `customers` - Folder as Dataset

+- `orders` - Folder as Dataset

+ and will ignore file `tmp_10101000.csv`

+

+**Valid path_specs.include**

+

+```python

+https://storageaccountname.blob.core.windows.net/my-container/foo/tests/bar.avro # single file table

+https://storageaccountname.blob.core.windows.net/my-container/foo/tests/*.* # mulitple file level tables

+https://storageaccountname.blob.core.windows.net/my-container/foo/tests/{table}/*.avro #table without partition

+https://storageaccountname.blob.core.windows.net/my-container/foo/tests/{table}/*/*.avro #table where partitions are not specified

+https://storageaccountname.blob.core.windows.net/my-container/foo/tests/{table}/*.* # table where no partitions as well as data type specified

+https://storageaccountname.blob.core.windows.net/my-container/{dept}/tests/{table}/*.avro # specifying keywords to be used in display name

+https://storageaccountname.blob.core.windows.net/my-container/{dept}/tests/{table}/{partition_key[0]}={partition[0]}/{partition_key[1]}={partition[1]}/*.avro # specify partition key and value format

+https://storageaccountname.blob.core.windows.net/my-container/{dept}/tests/{table}/{partition[0]}/{partition[1]}/{partition[2]}/*.avro # specify partition value only format

+https://storageaccountname.blob.core.windows.net/my-container/{dept}/tests/{table}/{partition[0]}/{partition[1]}/{partition[2]}/*.* # for all extensions

+https://storageaccountname.blob.core.windows.net/my-container/*/{table}/{partition[0]}/{partition[1]}/{partition[2]}/*.* # table is present at 2 levels down in container

+https://storageaccountname.blob.core.windows.net/my-container/*/*/{table}/{partition[0]}/{partition[1]}/{partition[2]}/*.* # table is present at 3 levels down in container

+```

+

+**Valid path_specs.exclude**

+- \**/tests/**

+- https://storageaccountname.blob.core.windows.net/my-container/hr/**

+- **/tests/*.csv

+- https://storageaccountname.blob.core.windows.net/my-container/foo/*/my_table/**

+

+

+

+If you would like to write a more complicated function for resolving file names, then a {transformer} would be a good fit.

+

+:::caution

+

+Specify as long fixed prefix ( with out /*/ ) as possible in `path_specs.include`. This will reduce the scanning time and cost, specifically on AWS S3

+

+:::

+

+:::caution

+

+Running profiling against many tables or over many rows can run up significant costs.

+While we've done our best to limit the expensiveness of the queries the profiler runs, you

+should be prudent about the set of tables profiling is enabled on or the frequency

+of the profiling runs.

+

+:::

+

+:::caution

+

+If you are ingesting datasets from AWS S3, we recommend running the ingestion on a server in the same region to avoid high egress costs.

+

+:::

+

+### Compatibility

+

+Profiles are computed with PyDeequ, which relies on PySpark. Therefore, for computing profiles, we currently require Spark 3.0.3 with Hadoop 3.2 to be installed and the `SPARK_HOME` and `SPARK_VERSION` environment variables to be set. The Spark+Hadoop binary can be downloaded [here](https://www.apache.org/dyn/closer.lua/spark/spark-3.0.3/spark-3.0.3-bin-hadoop3.2.tgz).

+

+For an example guide on setting up PyDeequ on AWS, see [this guide](https://aws.amazon.com/blogs/big-data/testing-data-quality-at-scale-with-pydeequ/).

+

+:::caution

+

+From Spark 3.2.0+, Avro reader fails on column names that don't start with a letter and contains other character than letters, number, and underscore. [https://github.com/apache/spark/blob/72c62b6596d21e975c5597f8fff84b1a9d070a02/connector/avro/src/main/scala/org/apache/spark/sql/avro/AvroFileFormat.scala#L158]

+Avro files that contain such columns won't be profiled.

+:::

\ No newline at end of file

diff --git a/metadata-ingestion/docs/sources/abs/abs_recipe.yml b/metadata-ingestion/docs/sources/abs/abs_recipe.yml

new file mode 100644

index 00000000000000..4c4e5c678238f7

--- /dev/null

+++ b/metadata-ingestion/docs/sources/abs/abs_recipe.yml

@@ -0,0 +1,13 @@

+source:

+ type: abs

+ config:

+ path_specs:

+ - include: "https://storageaccountname.blob.core.windows.net/covid19-lake/covid_knowledge_graph/csv/nodes/*.*"

+

+ azure_config:

+ account_name: "*****"

+ sas_token: "*****"

+ container_name: "covid_knowledge_graph"

+ env: "PROD"

+

+# sink configs

diff --git a/metadata-ingestion/docs/sources/s3/README.md b/metadata-ingestion/docs/sources/s3/README.md

index b0d354a9b3c2ac..5feda741070240 100644

--- a/metadata-ingestion/docs/sources/s3/README.md

+++ b/metadata-ingestion/docs/sources/s3/README.md

@@ -1,5 +1,5 @@

This connector ingests AWS S3 datasets into DataHub. It allows mapping an individual file or a folder of files to a dataset in DataHub.

-To specify the group of files that form a dataset, use `path_specs` configuration in ingestion recipe. Refer section [Path Specs](https://datahubproject.io/docs/generated/ingestion/sources/s3/#path-specs) for more details.

+Refer to the section [Path Specs](https://datahubproject.io/docs/generated/ingestion/sources/s3/#path-specs) for more details.

:::tip

This connector can also be used to ingest local files.

diff --git a/metadata-ingestion/scripts/modeldocgen.py b/metadata-ingestion/scripts/modeldocgen.py

index ea7813f0ca85bc..ee5f06cb801baa 100644

--- a/metadata-ingestion/scripts/modeldocgen.py

+++ b/metadata-ingestion/scripts/modeldocgen.py

@@ -8,12 +8,12 @@

from dataclasses import Field, dataclass, field

from enum import auto

from pathlib import Path

-from typing import Any, Dict, List, Optional, Tuple, Iterable

+from typing import Any, Dict, Iterable, List, Optional, Tuple

import avro.schema

import click

-from datahub.configuration.common import ConfigEnum, ConfigModel

+from datahub.configuration.common import ConfigEnum, PermissiveConfigModel

from datahub.emitter.mce_builder import make_data_platform_urn, make_dataset_urn

from datahub.emitter.mcp import MetadataChangeProposalWrapper

from datahub.emitter.rest_emitter import DatahubRestEmitter

@@ -22,7 +22,9 @@

from datahub.ingestion.extractor.schema_util import avro_schema_to_mce_fields

from datahub.ingestion.sink.file import FileSink, FileSinkConfig

from datahub.metadata.schema_classes import (

+ BrowsePathEntryClass,

BrowsePathsClass,

+ BrowsePathsV2Class,

DatasetPropertiesClass,

DatasetSnapshotClass,

ForeignKeyConstraintClass,

@@ -34,8 +36,6 @@

StringTypeClass,

SubTypesClass,

TagAssociationClass,

- BrowsePathsV2Class,

- BrowsePathEntryClass,

)

logger = logging.getLogger(__name__)

@@ -493,30 +493,29 @@ def strip_types(field_path: str) -> str:

],

)

+

@dataclass

class EntityAspectName:

entityName: str

aspectName: str

-@dataclass

-class AspectPluginConfig:

+class AspectPluginConfig(PermissiveConfigModel):

className: str

enabled: bool

- supportedEntityAspectNames: List[EntityAspectName]

+ supportedEntityAspectNames: List[EntityAspectName] = []

packageScan: Optional[List[str]] = None

supportedOperations: Optional[List[str]] = None

-@dataclass

-class PluginConfiguration:

+class PluginConfiguration(PermissiveConfigModel):

aspectPayloadValidators: Optional[List[AspectPluginConfig]] = None

mutationHooks: Optional[List[AspectPluginConfig]] = None

mclSideEffects: Optional[List[AspectPluginConfig]] = None

mcpSideEffects: Optional[List[AspectPluginConfig]] = None

-class EntityRegistry(ConfigModel):

+class EntityRegistry(PermissiveConfigModel):

entities: List[EntityDefinition]

events: Optional[List[EventDefinition]]

plugins: Optional[PluginConfiguration] = None

diff --git a/metadata-ingestion/setup.py b/metadata-ingestion/setup.py

index 41c04ca4a433cf..e1a9e6a55909d4 100644

--- a/metadata-ingestion/setup.py

+++ b/metadata-ingestion/setup.py

@@ -258,6 +258,13 @@

*path_spec_common,

}

+abs_base = {

+ "azure-core==1.29.4",

+ "azure-identity>=1.14.0",

+ "azure-storage-blob>=12.19.0",

+ "azure-storage-file-datalake>=12.14.0",

+}

+

data_lake_profiling = {

"pydeequ~=1.1.0",

"pyspark~=3.3.0",

@@ -265,6 +272,7 @@

delta_lake = {

*s3_base,

+ *abs_base,

# Version 0.18.0 broken on ARM Macs: https://github.com/delta-io/delta-rs/issues/2577

"deltalake>=0.6.3, != 0.6.4, < 0.18.0; platform_system == 'Darwin' and platform_machine == 'arm64'",

"deltalake>=0.6.3, != 0.6.4; platform_system != 'Darwin' or platform_machine != 'arm64'",

@@ -407,6 +415,7 @@

| {"cachetools"},

"s3": {*s3_base, *data_lake_profiling},

"gcs": {*s3_base, *data_lake_profiling},

+ "abs": {*abs_base},

"sagemaker": aws_common,

"salesforce": {"simple-salesforce"},

"snowflake": snowflake_common | usage_common | sqlglot_lib,

@@ -686,6 +695,7 @@

"demo-data = datahub.ingestion.source.demo_data.DemoDataSource",

"unity-catalog = datahub.ingestion.source.unity.source:UnityCatalogSource",

"gcs = datahub.ingestion.source.gcs.gcs_source:GCSSource",

+ "abs = datahub.ingestion.source.abs.source:ABSSource",

"sql-queries = datahub.ingestion.source.sql_queries:SqlQueriesSource",

"fivetran = datahub.ingestion.source.fivetran.fivetran:FivetranSource",

"qlik-sense = datahub.ingestion.source.qlik_sense.qlik_sense:QlikSenseSource",

diff --git a/metadata-ingestion/src/datahub/ingestion/api/source.py b/metadata-ingestion/src/datahub/ingestion/api/source.py

index ad1b312ef445c1..788bec97a64884 100644

--- a/metadata-ingestion/src/datahub/ingestion/api/source.py

+++ b/metadata-ingestion/src/datahub/ingestion/api/source.py

@@ -1,3 +1,4 @@

+import contextlib

import datetime

import logging

from abc import ABCMeta, abstractmethod

@@ -10,6 +11,7 @@

Dict,

Generic,

Iterable,

+ Iterator,

List,

Optional,

Sequence,

@@ -97,6 +99,7 @@ def report_log(

context: Optional[str] = None,

exc: Optional[BaseException] = None,

log: bool = False,

+ stacklevel: int = 1,

) -> None:

"""

Report a user-facing warning for the ingestion run.

@@ -109,7 +112,8 @@ def report_log(

exc: The exception associated with the event. We'll show the stack trace when in debug mode.

"""

- stacklevel = 2

+ # One for this method, and one for the containing report_* call.

+ stacklevel = stacklevel + 2

log_key = f"{title}-{message}"

entries = self._entries[level]

@@ -118,6 +122,8 @@ def report_log(

context = f"{context[:_MAX_CONTEXT_STRING_LENGTH]} ..."

log_content = f"{message} => {context}" if context else message

+ if title:

+ log_content = f"{title}: {log_content}"

if exc:

log_content += f"{log_content}: {exc}"

@@ -255,9 +261,10 @@ def report_failure(

context: Optional[str] = None,

title: Optional[LiteralString] = None,

exc: Optional[BaseException] = None,

+ log: bool = True,

) -> None:

self._structured_logs.report_log(

- StructuredLogLevel.ERROR, message, title, context, exc, log=False

+ StructuredLogLevel.ERROR, message, title, context, exc, log=log

)

def failure(

@@ -266,9 +273,10 @@ def failure(

context: Optional[str] = None,

title: Optional[LiteralString] = None,

exc: Optional[BaseException] = None,

+ log: bool = True,

) -> None:

self._structured_logs.report_log(

- StructuredLogLevel.ERROR, message, title, context, exc, log=True

+ StructuredLogLevel.ERROR, message, title, context, exc, log=log

)

def info(

@@ -277,11 +285,30 @@ def info(

context: Optional[str] = None,

title: Optional[LiteralString] = None,

exc: Optional[BaseException] = None,

+ log: bool = True,

) -> None:

self._structured_logs.report_log(

- StructuredLogLevel.INFO, message, title, context, exc, log=True

+ StructuredLogLevel.INFO, message, title, context, exc, log=log

)

+ @contextlib.contextmanager

+ def report_exc(

+ self,

+ message: LiteralString,

+ title: Optional[LiteralString] = None,

+ context: Optional[str] = None,

+ level: StructuredLogLevel = StructuredLogLevel.ERROR,

+ ) -> Iterator[None]:

+ # Convenience method that helps avoid boilerplate try/except blocks.

+ # TODO: I'm not super happy with the naming here - it's not obvious that this

+ # suppresses the exception in addition to reporting it.

+ try:

+ yield

+ except Exception as exc:

+ self._structured_logs.report_log(

+ level, message=message, title=title, context=context, exc=exc

+ )

+

def __post_init__(self) -> None:

self.start_time = datetime.datetime.now()

self.running_time: datetime.timedelta = datetime.timedelta(seconds=0)

diff --git a/metadata-ingestion/src/datahub/ingestion/run/pipeline.py b/metadata-ingestion/src/datahub/ingestion/run/pipeline.py

index e61ffa46b3c107..60930f03763ed9 100644

--- a/metadata-ingestion/src/datahub/ingestion/run/pipeline.py

+++ b/metadata-ingestion/src/datahub/ingestion/run/pipeline.py

@@ -379,13 +379,19 @@ def _notify_reporters_on_ingestion_completion(self) -> None:

for reporter in self.reporters:

try:

reporter.on_completion(

- status="CANCELLED"

- if self.final_status == PipelineStatus.CANCELLED

- else "FAILURE"

- if self.has_failures()

- else "SUCCESS"

- if self.final_status == PipelineStatus.COMPLETED

- else "UNKNOWN",

+ status=(

+ "CANCELLED"

+ if self.final_status == PipelineStatus.CANCELLED

+ else (

+ "FAILURE"

+ if self.has_failures()

+ else (

+ "SUCCESS"

+ if self.final_status == PipelineStatus.COMPLETED

+ else "UNKNOWN"

+ )

+ )

+ ),

report=self._get_structured_report(),

ctx=self.ctx,

)

@@ -425,7 +431,7 @@ def _time_to_print(self) -> bool:

return True

return False

- def run(self) -> None:

+ def run(self) -> None: # noqa: C901

with contextlib.ExitStack() as stack:

if self.config.flags.generate_memory_profiles:

import memray

@@ -436,6 +442,8 @@ def run(self) -> None:

)

)

+ stack.enter_context(self.sink)

+

self.final_status = PipelineStatus.UNKNOWN

self._notify_reporters_on_ingestion_start()

callback = None

@@ -460,7 +468,17 @@ def run(self) -> None:

if not self.dry_run:

self.sink.handle_work_unit_start(wu)

try:

- record_envelopes = self.extractor.get_records(wu)

+ # Most of this code is meant to be fully stream-based instead of generating all records into memory.

+ # However, the extractor in particular will never generate a particularly large list. We want the

+ # exception reporting to be associated with the source, and not the transformer. As such, we

+ # need to materialize the generator returned by get_records().

+ record_envelopes = list(self.extractor.get_records(wu))

+ except Exception as e:

+ self.source.get_report().failure(

+ "Source produced bad metadata", context=wu.id, exc=e

+ )

+ continue

+ try:

for record_envelope in self.transform(record_envelopes):

if not self.dry_run:

try:

@@ -482,9 +500,9 @@ def run(self) -> None:

)

# TODO: Transformer errors should cause the pipeline to fail.

- self.extractor.close()

if not self.dry_run:

self.sink.handle_work_unit_end(wu)

+ self.extractor.close()

self.source.close()

# no more data is coming, we need to let the transformers produce any additional records if they are holding on to state

for record_envelope in self.transform(

@@ -518,8 +536,6 @@ def run(self) -> None:

self._notify_reporters_on_ingestion_completion()

- self.sink.close()

-

def transform(self, records: Iterable[RecordEnvelope]) -> Iterable[RecordEnvelope]:

"""

Transforms the given sequence of records by passing the records through the transformers

diff --git a/metadata-ingestion/src/datahub/ingestion/source/abs/__init__.py b/metadata-ingestion/src/datahub/ingestion/source/abs/__init__.py

new file mode 100644

index 00000000000000..e69de29bb2d1d6

diff --git a/metadata-ingestion/src/datahub/ingestion/source/abs/config.py b/metadata-ingestion/src/datahub/ingestion/source/abs/config.py

new file mode 100644

index 00000000000000..c62239527a1200

--- /dev/null

+++ b/metadata-ingestion/src/datahub/ingestion/source/abs/config.py

@@ -0,0 +1,163 @@

+import logging

+from typing import Any, Dict, List, Optional, Union

+

+import pydantic

+from pydantic.fields import Field

+

+from datahub.configuration.common import AllowDenyPattern

+from datahub.configuration.source_common import DatasetSourceConfigMixin

+from datahub.configuration.validate_field_deprecation import pydantic_field_deprecated

+from datahub.configuration.validate_field_rename import pydantic_renamed_field

+from datahub.ingestion.source.abs.datalake_profiler_config import DataLakeProfilerConfig

+from datahub.ingestion.source.azure.azure_common import AzureConnectionConfig

+from datahub.ingestion.source.data_lake_common.config import PathSpecsConfigMixin

+from datahub.ingestion.source.data_lake_common.path_spec import PathSpec

+from datahub.ingestion.source.state.stale_entity_removal_handler import (

+ StatefulStaleMetadataRemovalConfig,

+)

+from datahub.ingestion.source.state.stateful_ingestion_base import (

+ StatefulIngestionConfigBase,

+)

+from datahub.ingestion.source_config.operation_config import is_profiling_enabled

+

+# hide annoying debug errors from py4j

+logging.getLogger("py4j").setLevel(logging.ERROR)

+logger: logging.Logger = logging.getLogger(__name__)

+

+

+class DataLakeSourceConfig(

+ StatefulIngestionConfigBase, DatasetSourceConfigMixin, PathSpecsConfigMixin

+):

+ platform: str = Field(

+ default="",

+ description="The platform that this source connects to (either 'abs' or 'file'). "

+ "If not specified, the platform will be inferred from the path_specs.",

+ )

+

+ azure_config: Optional[AzureConnectionConfig] = Field(

+ default=None, description="Azure configuration"

+ )

+

+ stateful_ingestion: Optional[StatefulStaleMetadataRemovalConfig] = None

+ # Whether to create Datahub Azure Container properties

+ use_abs_container_properties: Optional[bool] = Field(

+ None,

+ description="Whether to create tags in datahub from the abs container properties",

+ )

+ # Whether to create Datahub Azure blob tags

+ use_abs_blob_tags: Optional[bool] = Field(

+ None,

+ description="Whether to create tags in datahub from the abs blob tags",

+ )

+ # Whether to create Datahub Azure blob properties

+ use_abs_blob_properties: Optional[bool] = Field(

+ None,

+ description="Whether to create tags in datahub from the abs blob properties",

+ )

+

+ # Whether to update the table schema when schema in files within the partitions are updated

+ _update_schema_on_partition_file_updates_deprecation = pydantic_field_deprecated(

+ "update_schema_on_partition_file_updates",

+ message="update_schema_on_partition_file_updates is deprecated. This behaviour is the default now.",

+ )

+

+ profile_patterns: AllowDenyPattern = Field(

+ default=AllowDenyPattern.allow_all(),

+ description="regex patterns for tables to profile ",

+ )

+ profiling: DataLakeProfilerConfig = Field(

+ default=DataLakeProfilerConfig(), description="Data profiling configuration"

+ )

+

+ spark_driver_memory: str = Field(

+ default="4g", description="Max amount of memory to grant Spark."

+ )

+

+ spark_config: Dict[str, Any] = Field(

+ description='Spark configuration properties to set on the SparkSession. Put config property names into quotes. For example: \'"spark.executor.memory": "2g"\'',

+ default={},

+ )

+

+ max_rows: int = Field(

+ default=100,

+ description="Maximum number of rows to use when inferring schemas for TSV and CSV files.",

+ )

+ add_partition_columns_to_schema: bool = Field(

+ default=False,

+ description="Whether to add partition fields to the schema.",

+ )

+ verify_ssl: Union[bool, str] = Field(

+ default=True,

+ description="Either a boolean, in which case it controls whether we verify the server's TLS certificate, or a string, in which case it must be a path to a CA bundle to use.",

+ )

+

+ number_of_files_to_sample: int = Field(

+ default=100,

+ description="Number of files to list to sample for schema inference. This will be ignored if sample_files is set to False in the pathspec.",

+ )

+

+ _rename_path_spec_to_plural = pydantic_renamed_field(

+ "path_spec", "path_specs", lambda path_spec: [path_spec]

+ )

+

+ def is_profiling_enabled(self) -> bool:

+ return self.profiling.enabled and is_profiling_enabled(

+ self.profiling.operation_config

+ )

+

+ @pydantic.validator("path_specs", always=True)

+ def check_path_specs_and_infer_platform(

+ cls, path_specs: List[PathSpec], values: Dict

+ ) -> List[PathSpec]:

+ if len(path_specs) == 0:

+ raise ValueError("path_specs must not be empty")

+

+ # Check that all path specs have the same platform.

+ guessed_platforms = set(

+ "abs" if path_spec.is_abs else "file" for path_spec in path_specs

+ )

+ if len(guessed_platforms) > 1:

+ raise ValueError(

+ f"Cannot have multiple platforms in path_specs: {guessed_platforms}"

+ )

+ guessed_platform = guessed_platforms.pop()

+

+ # Ensure abs configs aren't used for file sources.

+ if guessed_platform != "abs" and (

+ values.get("use_abs_container_properties")

+ or values.get("use_abs_blob_tags")

+ or values.get("use_abs_blob_properties")

+ ):

+ raise ValueError(

+ "Cannot grab abs blob/container tags when platform is not abs. Remove the flag or use abs."

+ )

+

+ # Infer platform if not specified.

+ if values.get("platform") and values["platform"] != guessed_platform:

+ raise ValueError(

+ f"All path_specs belong to {guessed_platform} platform, but platform is set to {values['platform']}"

+ )

+ else:

+ logger.debug(f'Setting config "platform": {guessed_platform}')

+ values["platform"] = guessed_platform

+

+ return path_specs

+

+ @pydantic.validator("platform", always=True)

+ def platform_not_empty(cls, platform: str, values: dict) -> str:

+ inferred_platform = values.get(

+ "platform", None

+ ) # we may have inferred it above

+ platform = platform or inferred_platform

+ if not platform:

+ raise ValueError("platform must not be empty")

+ return platform

+

+ @pydantic.root_validator()

+ def ensure_profiling_pattern_is_passed_to_profiling(

+ cls, values: Dict[str, Any]

+ ) -> Dict[str, Any]:

+ profiling: Optional[DataLakeProfilerConfig] = values.get("profiling")

+ if profiling is not None and profiling.enabled:

+ profiling._allow_deny_patterns = values["profile_patterns"]

+ return values

diff --git a/metadata-ingestion/src/datahub/ingestion/source/abs/datalake_profiler_config.py b/metadata-ingestion/src/datahub/ingestion/source/abs/datalake_profiler_config.py

new file mode 100644

index 00000000000000..9f6d13a08b182e

--- /dev/null

+++ b/metadata-ingestion/src/datahub/ingestion/source/abs/datalake_profiler_config.py

@@ -0,0 +1,92 @@

+from typing import Any, Dict, Optional

+

+import pydantic

+from pydantic.fields import Field

+

+from datahub.configuration import ConfigModel

+from datahub.configuration.common import AllowDenyPattern

+from datahub.ingestion.source_config.operation_config import OperationConfig

+

+

+class DataLakeProfilerConfig(ConfigModel):

+ enabled: bool = Field(

+ default=False, description="Whether profiling should be done."

+ )

+ operation_config: OperationConfig = Field(

+ default_factory=OperationConfig,

+ description="Experimental feature. To specify operation configs.",

+ )

+

+ # These settings will override the ones below.

+ profile_table_level_only: bool = Field(

+ default=False,

+ description="Whether to perform profiling at table-level only or include column-level profiling as well.",

+ )

+

+ _allow_deny_patterns: AllowDenyPattern = pydantic.PrivateAttr(

+ default=AllowDenyPattern.allow_all(),

+ )

+

+ max_number_of_fields_to_profile: Optional[pydantic.PositiveInt] = Field(

+ default=None,

+ description="A positive integer that specifies the maximum number of columns to profile for any table. `None` implies all columns. The cost of profiling goes up significantly as the number of columns to profile goes up.",

+ )

+

+ include_field_null_count: bool = Field(

+ default=True,

+ description="Whether to profile for the number of nulls for each column.",

+ )

+ include_field_min_value: bool = Field(

+ default=True,

+ description="Whether to profile for the min value of numeric columns.",

+ )

+ include_field_max_value: bool = Field(

+ default=True,

+ description="Whether to profile for the max value of numeric columns.",

+ )

+ include_field_mean_value: bool = Field(

+ default=True,

+ description="Whether to profile for the mean value of numeric columns.",

+ )

+ include_field_median_value: bool = Field(

+ default=True,

+ description="Whether to profile for the median value of numeric columns.",

+ )

+ include_field_stddev_value: bool = Field(

+ default=True,

+ description="Whether to profile for the standard deviation of numeric columns.",

+ )

+ include_field_quantiles: bool = Field(

+ default=True,

+ description="Whether to profile for the quantiles of numeric columns.",

+ )

+ include_field_distinct_value_frequencies: bool = Field(

+ default=True, description="Whether to profile for distinct value frequencies."

+ )

+ include_field_histogram: bool = Field(

+ default=True,

+ description="Whether to profile for the histogram for numeric fields.",

+ )

+ include_field_sample_values: bool = Field(

+ default=True,

+ description="Whether to profile for the sample values for all columns.",

+ )

+

+ @pydantic.root_validator()

+ def ensure_field_level_settings_are_normalized(

+ cls: "DataLakeProfilerConfig", values: Dict[str, Any]

+ ) -> Dict[str, Any]:

+ max_num_fields_to_profile_key = "max_number_of_fields_to_profile"

+ max_num_fields_to_profile = values.get(max_num_fields_to_profile_key)

+

+ # Disable all field-level metrics.

+ if values.get("profile_table_level_only"):

+ for field_level_metric in cls.__fields__:

+ if field_level_metric.startswith("include_field_"):

+ values.setdefault(field_level_metric, False)

+

+ assert (

+ max_num_fields_to_profile is None

+ ), f"{max_num_fields_to_profile_key} should be set to None"

+

+ return values

diff --git a/metadata-ingestion/src/datahub/ingestion/source/abs/profiling.py b/metadata-ingestion/src/datahub/ingestion/source/abs/profiling.py

new file mode 100644

index 00000000000000..c969b229989e84

--- /dev/null

+++ b/metadata-ingestion/src/datahub/ingestion/source/abs/profiling.py

@@ -0,0 +1,472 @@

+import dataclasses

+from typing import Any, List, Optional

+

+from pandas import DataFrame

+from pydeequ.analyzers import (

+ AnalysisRunBuilder,

+ AnalysisRunner,

+ AnalyzerContext,

+ ApproxCountDistinct,

+ ApproxQuantile,

+ ApproxQuantiles,

+ Histogram,

+ Maximum,

+ Mean,

+ Minimum,

+ StandardDeviation,

+)

+from pyspark.sql import SparkSession

+from pyspark.sql.functions import col, count, isnan, when

+from pyspark.sql.types import (

+ DataType as SparkDataType,

+ DateType,

+ DecimalType,

+ DoubleType,

+ FloatType,

+ IntegerType,

+ LongType,

+ NullType,

+ ShortType,

+ StringType,

+ TimestampType,

+)

+

+from datahub.emitter.mce_builder import get_sys_time

+from datahub.ingestion.source.profiling.common import (

+ Cardinality,

+ convert_to_cardinality,

+)

+from datahub.ingestion.source.s3.datalake_profiler_config import DataLakeProfilerConfig

+from datahub.ingestion.source.s3.report import DataLakeSourceReport

+from datahub.metadata.schema_classes import (

+ DatasetFieldProfileClass,

+ DatasetProfileClass,

+ HistogramClass,

+ QuantileClass,

+ ValueFrequencyClass,

+)

+from datahub.telemetry import stats, telemetry

+

+NUM_SAMPLE_ROWS = 20

+QUANTILES = [0.05, 0.25, 0.5, 0.75, 0.95]

+MAX_HIST_BINS = 25

+

+

+def null_str(value: Any) -> Optional[str]:

+ # str() with a passthrough for None.

+ return str(value) if value is not None else None

+

+

+@dataclasses.dataclass

+class _SingleColumnSpec:

+ column: str

+ column_profile: DatasetFieldProfileClass

+

+ # if the histogram is a list of value frequencies (discrete data) or bins (continuous data)

+ histogram_distinct: Optional[bool] = None

+

+ type_: SparkDataType = NullType # type:ignore

+

+ unique_count: Optional[int] = None

+ non_null_count: Optional[int] = None

+ cardinality: Optional[Cardinality] = None

+

+

+class _SingleTableProfiler:

+ spark: SparkSession

+ dataframe: DataFrame

+ analyzer: AnalysisRunBuilder

+ column_specs: List[_SingleColumnSpec]

+ row_count: int

+ profiling_config: DataLakeProfilerConfig

+ file_path: str

+ columns_to_profile: List[str]

+ ignored_columns: List[str]

+ profile: DatasetProfileClass

+ report: DataLakeSourceReport

+

+ def __init__(

+ self,

+ dataframe: DataFrame,

+ spark: SparkSession,

+ profiling_config: DataLakeProfilerConfig,

+ report: DataLakeSourceReport,

+ file_path: str,

+ ):

+ self.spark = spark

+ self.dataframe = dataframe

+ self.analyzer = AnalysisRunner(spark).onData(dataframe)

+ self.column_specs = []

+ self.row_count = dataframe.count()

+ self.profiling_config = profiling_config

+ self.file_path = file_path

+ self.columns_to_profile = []

+ self.ignored_columns = []

+ self.profile = DatasetProfileClass(timestampMillis=get_sys_time())

+ self.report = report

+

+ self.profile.rowCount = self.row_count

+ self.profile.columnCount = len(dataframe.columns)

+

+ column_types = {x.name: x.dataType for x in dataframe.schema.fields}

+

+ if self.profiling_config.profile_table_level_only:

+ return

+

+ # get column distinct counts

+ for column in dataframe.columns:

+ if not self.profiling_config._allow_deny_patterns.allowed(column):

+ self.ignored_columns.append(column)

+ continue

+

+ self.columns_to_profile.append(column)

+ # Normal CountDistinct is ridiculously slow

+ self.analyzer.addAnalyzer(ApproxCountDistinct(column))

+

+ if self.profiling_config.max_number_of_fields_to_profile is not None:

+ if (

+ len(self.columns_to_profile)

+ > self.profiling_config.max_number_of_fields_to_profile

+ ):

+ columns_being_dropped = self.columns_to_profile[

+ self.profiling_config.max_number_of_fields_to_profile :

+ ]

+ self.columns_to_profile = self.columns_to_profile[

+ : self.profiling_config.max_number_of_fields_to_profile

+ ]

+

+ self.report.report_file_dropped(

+ f"The max_number_of_fields_to_profile={self.profiling_config.max_number_of_fields_to_profile} reached. Profile of columns {self.file_path}({', '.join(sorted(columns_being_dropped))})"

+ )

+

+ analysis_result = self.analyzer.run()

+ analysis_metrics = AnalyzerContext.successMetricsAsJson(

+ self.spark, analysis_result

+ )

+

+ # reshape distinct counts into dictionary

+ column_distinct_counts = {

+ x["instance"]: int(x["value"])

+ for x in analysis_metrics

+ if x["name"] == "ApproxCountDistinct"

+ }

+

+ select_numeric_null_counts = [

+ count(

+ when(

+ isnan(c) | col(c).isNull(),

+ c,

+ )

+ ).alias(c)

+ for c in self.columns_to_profile

+ if column_types[column] in [DoubleType, FloatType]

+ ]

+

+ # PySpark doesn't support isnan() on non-float/double columns

+ select_nonnumeric_null_counts = [

+ count(

+ when(

+ col(c).isNull(),

+ c,

+ )

+ ).alias(c)

+ for c in self.columns_to_profile

+ if column_types[column] not in [DoubleType, FloatType]

+ ]

+

+ null_counts = dataframe.select(

+ select_numeric_null_counts + select_nonnumeric_null_counts

+ )

+ column_null_counts = null_counts.toPandas().T[0].to_dict()

+ column_null_fractions = {

+ c: column_null_counts[c] / self.row_count if self.row_count != 0 else 0

+ for c in self.columns_to_profile

+ }

+ column_nonnull_counts = {

+ c: self.row_count - column_null_counts[c] for c in self.columns_to_profile

+ }

+

+ column_unique_proportions = {

+ c: (

+ column_distinct_counts[c] / column_nonnull_counts[c]

+ if column_nonnull_counts[c] > 0

+ else 0

+ )

+ for c in self.columns_to_profile

+ }

+

+ if self.profiling_config.include_field_sample_values:

+ # take sample and convert to Pandas DataFrame

+ if self.row_count < NUM_SAMPLE_ROWS:

+ # if row count is less than number to sample, just take all rows

+ rdd_sample = dataframe.rdd.take(self.row_count)

+ else:

+ rdd_sample = dataframe.rdd.takeSample(False, NUM_SAMPLE_ROWS, seed=0)

+

+ # init column specs with profiles

+ for column in self.columns_to_profile:

+ column_profile = DatasetFieldProfileClass(fieldPath=column)

+

+ column_spec = _SingleColumnSpec(column, column_profile)

+

+ column_profile.uniqueCount = column_distinct_counts.get(column)

+ column_profile.uniqueProportion = column_unique_proportions.get(column)

+ column_profile.nullCount = column_null_counts.get(column)

+ column_profile.nullProportion = column_null_fractions.get(column)

+ if self.profiling_config.include_field_sample_values:

+ column_profile.sampleValues = sorted(

+ [str(x[column]) for x in rdd_sample]

+ )

+

+ column_spec.type_ = column_types[column]

+ column_spec.cardinality = convert_to_cardinality(

+ column_distinct_counts[column],

+ column_null_fractions[column],

+ )

+

+ self.column_specs.append(column_spec)

+

+ def prep_min_value(self, column: str) -> None:

+ if self.profiling_config.include_field_min_value:

+ self.analyzer.addAnalyzer(Minimum(column))

+

+ def prep_max_value(self, column: str) -> None:

+ if self.profiling_config.include_field_max_value:

+ self.analyzer.addAnalyzer(Maximum(column))

+

+ def prep_mean_value(self, column: str) -> None:

+ if self.profiling_config.include_field_mean_value:

+ self.analyzer.addAnalyzer(Mean(column))

+

+ def prep_median_value(self, column: str) -> None:

+ if self.profiling_config.include_field_median_value:

+ self.analyzer.addAnalyzer(ApproxQuantile(column, 0.5))

+

+ def prep_stdev_value(self, column: str) -> None:

+ if self.profiling_config.include_field_stddev_value:

+ self.analyzer.addAnalyzer(StandardDeviation(column))

+

+ def prep_quantiles(self, column: str) -> None:

+ if self.profiling_config.include_field_quantiles: