This sample demonstrates the usage of Depth Anything V2 (small) converted to Core ML. It allows for real-time depth estimation on iOS devices.

We leverage coremltools for model conversion and compression. You can read more about it here.

- Download DepthAnythingV2SmallF16.mlpackage from the Hugging Face Hub and place it inside the

DepthApp/modelsfolder. - Open

DepthSample.xcodeprojin XCode. - Build & run the project!

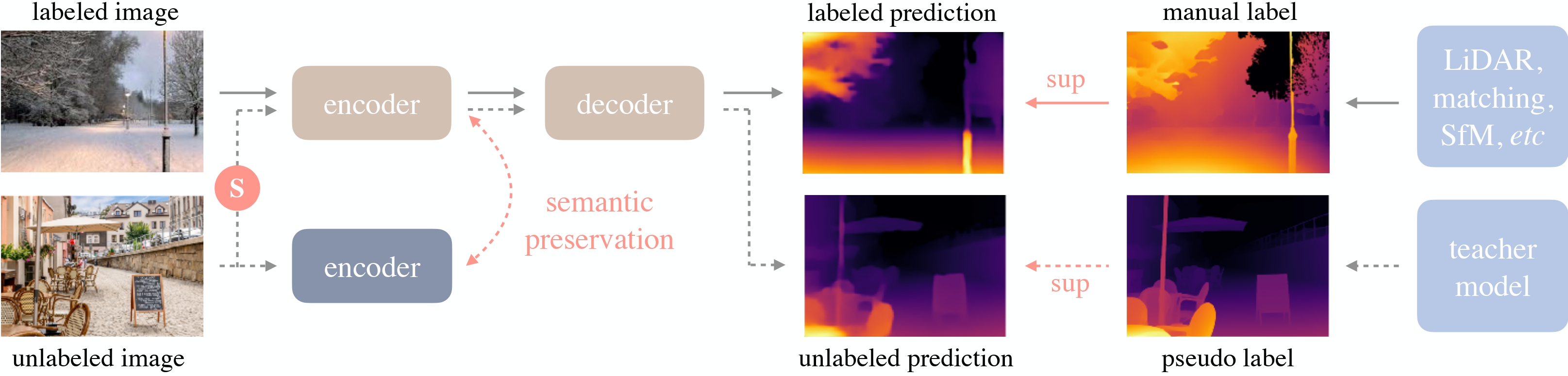

Depth Anything V2 was introduced in the paper of the same name by Lihe Yang et al. It uses the same architecture as the original Depth Anything release, but uses synthetic data and a larger capacity teacher model to achieve much finer and robust depth predictions. The original Depth Anything model was introduced in the paper Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data by Lihe Yang et al., and was first released in this repository.

Depth Anything leverages the DPT architecture with a DINOv2 backbone.

The model is trained on ~600K synthetic labeled images and ~62 million real unlabeled images, obtaining state-of-the-art results for both relative and absolute depth estimation.

Depth Anything overview. Taken from the original paper.

Core ML packages are available in apple/coreml-depth-anything-v2-small.

Install huggingface-cli

brew install huggingface-cliDownload DepthAnythingV2SmallF16.mlpackage to the models directory:

huggingface-cli download \

--local-dir models --local-dir-use-symlinks False \

apple/coreml-depth-anything-v2-small \

--include "DepthAnythingV2SmallF16.mlpackage/*"To download all the model versions, including quantized ones, skip the --include argument.