-

Notifications

You must be signed in to change notification settings - Fork 28

Adapting the PLE Settings

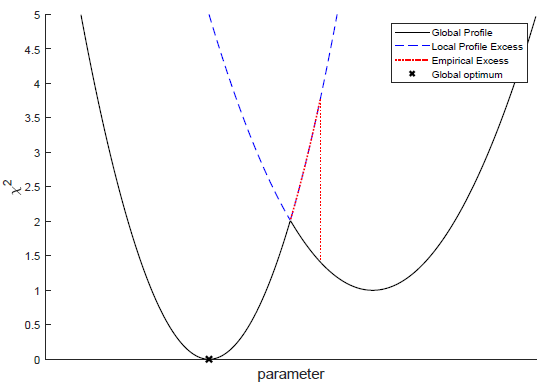

Parameter uncertainty analyses in d2d are mainly handled by exploiting the Profile Likelihood, which is initialized by calling the function arPLEInit which sets up the struct ar.ple containing the default specifications for the Profile Likelihood Algorithm. Subsequently, parameter profiles are calculated by use of the function ple, sampling the profile likelihood by iteratively proposing parameter steps and locally optimizing the objective function with the profile parameter being constrained.

Since the default settings may not always work satisfactorily, this article summarizes the most important algorithm configurations which can be easily modified by the user and some useful functions related to this problem. To this end, a short introduction to how ple actually proposes parameter steps is necessary.

It is usually just required that the likelihood profile crosses a certain objective function threshold if possible, thus these profiles need only to be calculated up until this point. Since it is not previously known for which parameters this threshold is crossed, profiles need to be calculated iteratively, sampling the profile towards the lower and upper parameter threshold by repetition of

- Start from

$P_{Last} = P_{New}$ - Propose a parameter step

$P_{Trial} = P_{Last} + P_{Step}$ - Constrained Fit to new optimum:

$P_{New} \gets P_{Trial}$

where

For such an iterative method to be applicable over a broad range of problems, the step choice needs to adapt to many possible forms of the profile. Since the objective function threshold is unchanged over all possible cases, the step size adaption should be related to the changes in the objective function values. This is implemented by adapting the step size until the criterion

is met. Since

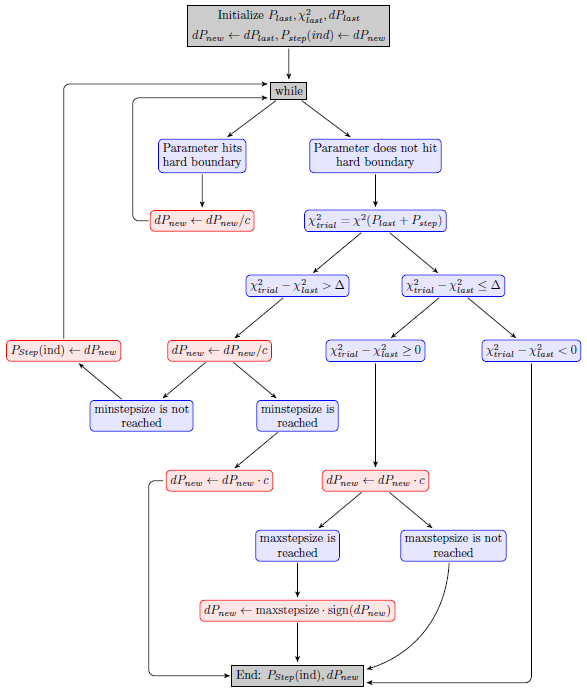

In the current implementation, the default step choice algorithm proposes steps only in the profile parameter, leaving all other parameters unchanged.

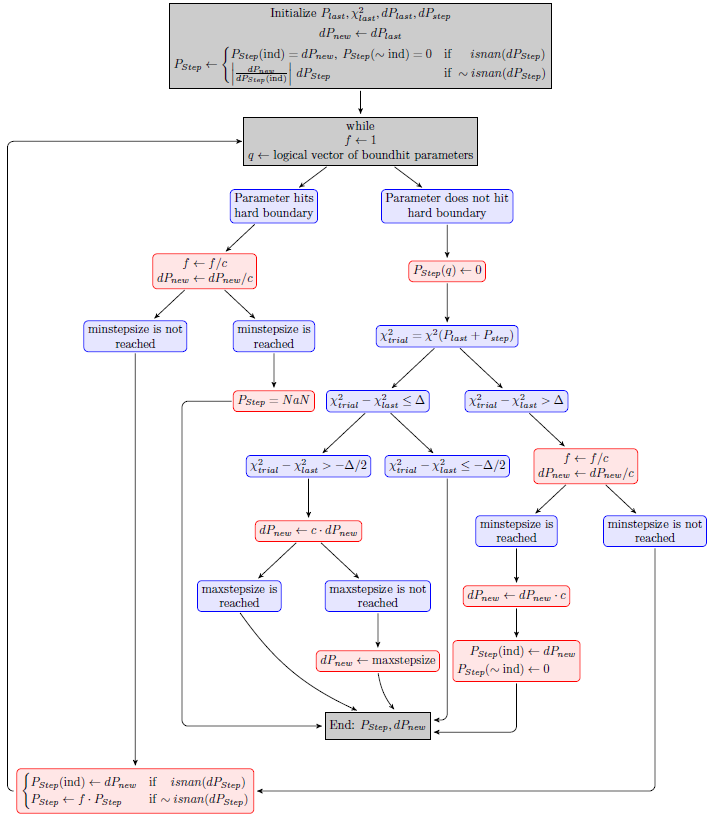

From theoretical considerations it seems promising to use prior information on the trajectory of the profile through parameter space to propose the next step. Such an alternative step choice is implemented simply by using the direction of the previously made step.

The fact that this flowchart looks more involved than the last one is due to stability issues arising from changing many parameters at once in each step. Hence, although in theory such a step choice is simple, the implementation gets messy and this is bound to happen to any other algorithm proposing steps in various directions.

Understanding the basic principle of these methods allows us to understand and modify various settings of the profile likelihood algorithm. Note that most options have to be set after arPLEInit is called, since the defaults are set by this function.

-

Changing the step choice algorithm is done by setting the argument

modeinarPLEInit([],[],mode).mode = 1corresponds to the direct step choice (default), whilemode = 2switches to the inert choice. Extensive testing of the inert step choice in comparison to the direct choice revealed a massive increase in performance as measured by timing and whether the profiles actually reach the threshold if possible. This is a result of the proposed steps changing the objective function in a manner which is closer to the upper limit in most cases, making sampling only as dense as it needs to be. However in a tiny subset of cases, the inert step choice may behave less stably than the direct step choice in models with poorly specified integration or fit tolerances or for profiles with too many peaks. Nevertheless, use ofmode = 2is encouraged and if it proves its worth over time, this should be used as a new default. -

Default maximal and minimal stepsizes are stored in

ar.ple.maxstepsizeandar.ple.minstepsize. While the maximal stepsize is proportional to the range of the parameter space of the profile parameter, the minimal stepsize is a default constant. Thus, changing the minstepsize might be necessary for some parameters, while the maximal stepsize is usually roughly satisfactory. Note that these stepsizes exclusively correspond to the size of the step in the profile parameter. -

ar.ple.samplesizespecifies the maximal number of steps attempted in the negative and positive direction from the starting point and is set to 100 by default. If the algorithm terminates prematurely because of this condition, the functionpleExtendcan be used to recover without recalculation of the whole profile. -

The upper limit

$\Delta$ is set inar.ple.relchi2stepincreaseas a relative factor (0.1) with respect to the profile thresholdicdf('chi2',0.95,1)$\approx 3.84$ (which in theory is used to construct 95%-confidence intervals) and this can be modified to influence sampling sparsity. Note that increasing this factor might empirically make the sampling density more appropriate, but it makes the algorithm more prone to errors since the theoretical upper limit for each step is increased simultaneously. -

While searching for the optimal step size, the proposed step

$P_{Step}$ is varied byar.ple.stepfaktor(default = 1.5) and the objective function needs to be re-evaluated for the new$P_{Trial}$ . Looking for a step factor value which maximizes performance in a general setting may be worthwile, although not relevant for the day-to-day user.

- Due to optimization after each step being local, the existance of local optima may lead to peaks or jumps in the sampled profile, although a profile should always be continuous by definition. This can be fixed by use of the function

pleSmooth, which smoothens these peaks to the true profile form.

-

In order to recover plots for individual profiles after they have already been calculated, use the function

plePlot. -

To suppress the plotting which happens in parallel to the profile sampling, set the flag

ar.ple.showCalculation = 0. Especially for less time intensive models the relative difference in run time is appreciable. -

In order to get a feeling of how parameter changes along the profile influence predicted model trajectories, use

arPLETrajectories. -

By setting the flag

ar.ple.allowbetteroptimum = 1the profile calculation sets a new optimum if an optimum with smaller objective function value is found while scanning the parameter space by virtue of the profile calculation. In this context, setting the new optimum means that the corresponding parameters ar stored inar.pand automatically used as the initials for the following profiles. Caution: A new optimum is only recognized if decreases the objective function byar.ple.optimset_tol, which takes the value 0.1 by default. -

By default, integration of the model trajectories in the ple-setting is performed without relying on sensitivities to decrease computational effort. This works reasonable enough in most instances, although it can be a severe source of problems in models with complicated objective function landscapes. Switching to

ar.ple.usesensis = trueforces the integrator to use sensitivites, yielding more accurate predictions. Hint: Ifar.ple.chi2sinit - ar.ple.chi2s < 0for any value of the index, this is a good indicator that integration might be problematic, since in theory this is prohibited. -

For a pool of various reasons, it might not be desirable to specify a saving directory every time

arPLEInitis called. By setting the fourth argument in this function tofalse, automatic saving is turned off. In default settings, the progress is saved after calculation of a profile is finished.

- Installation and system requirements

- Setting up models

- First steps

- Advanced events and pre-equilibration

- Computation of integration-based prediction bands

- How is the architecture of the code and the most important commands?

- What are the most important fields of the global variable ar?

- What are the most important functions?

- Optimization algorithms available in the d2d-framework

- Objective function, likelhood and chi-square in the d2d framework

- How to set up priors?

- How to set up steady state constraints?

- How do I restart the solver upon a step input?

- How to deal with integrator tolerances?

- How to implement a bolus injection?

- How to implement washing and an injection?

- How to implement a moment ODE model?

- How to run PLE calculations on a Cluster?